GPU Gems

GPU Gems is now available, right here, online. You can purchase a beautifully printed version of this book, and others in the series, at a 30% discount courtesy of InformIT and Addison-Wesley.

The CD content, including demos and content, is available on the web and for download.

Chapter 34. Integrating Hardware Shading into Cinema 4D

Jörn Loviscach

Hochschule Bremen

Most 3D graphics design software uses graphics hardware to accelerate the interactive previews shown during construction. Given the tremendous computing power of current 3D chips, there are many interesting problems to solve in terms of how this hardware can be leveraged for high-quality rendering. The goal is both to accelerate offline rendering and to provide interactive display at higher quality, thus offering better feedback to the designer. This chapter outlines how the CgFX toolkit has been used to bring these capabilities to Maxon Cinema 4D and discusses the problems that had to be solved in doing so. The approaches used can easily be generalized to similar 3D graphics design software.

34.1 Introduction

To investigate hardware-based rendering, it is not necessary to develop new 3D graphics design software from scratch. Rather, one can augment an off-the-shelf product with such functions, emulating the built-in final renderer by graphics hardware. This chapter describes the implementation of this approach as a plug-in for Maxon Cinema 4D called C4Dfx. Most of this development did not depend on our choice of host software; instead, we focused on shader programming, geometry computation, and object orientation. Thus, our techniques can readily be applied to many other 3D graphics solutions that still lack support for programmable hardware shading.

Cinema 4D offers a modeling, animation, and rendering environment, which is functionally comparable to Alias Maya and discreet 3ds max. However, Cinema 4D's support for the special features of current graphics hardware has been quite restricted up to now. Given its expandability via plug-ins, this software represents a good test case of how to apply CgFX.

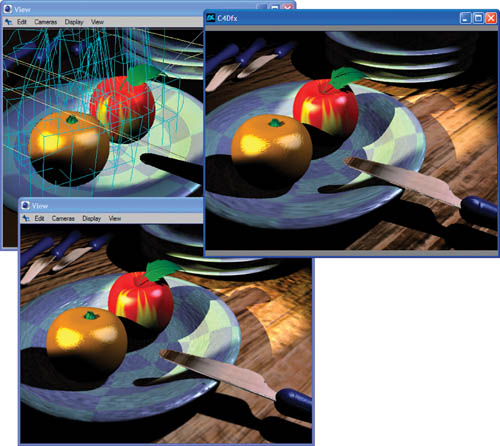

Cinema 4D's preview window, shown in the upper left of Figure 34-1, uses standard OpenGL rendering, based on Gouraud interpolation. The hardware-accelerated renderer C4Dfx (in the upper right of the figure) shows smooth, Phong-interpolated highlights, bump maps, shadows, and environment maps. Apart from the soft shadows, the quality of this rendering is nearly comparable to that of Cinema 4D's offline renderer (shown in the lower left of the figure).

Figure 34-1 Comparing Renderers

The plug-in for hardware-accelerated rendering offers a display updated at near-interactive rates as well as an offline renderer, which generates .avi files. Although the plug-in does not exactly re-create the results of the Cinema 4D offline renderer, its fast and quite accurate preview is helpful. The results of the plug-in's offline renderer may be used for fast previsualization or even as the final result.

The plug-in is built on OpenGL and the CgFX toolkit. The surface materials of the 3D software are transparently emulated by CgFX shaders, including such effects as bump maps, environment maps, and shadows. One may also load CgFX shaders from .fx files. In this case, a graphical user interface is generated on the fly, letting the designer control a shader's tweakable parameters. This approach makes it possible to use the growing number of .fx shaders not only for game development but also as regular materials for high-quality rendering. They may also cast shadows onto objects carrying the emulated Cinema 4D material. Figure 34-2 shows this result for seven of the .fx shaders included in the NVIDIA CgFX plug-in for Maya. The figure also shows that various types of 3D objects can be treated in the same manner.

Figure 34-2 CgFX Shaders and an Emulated Cinema 4D Material with Shadows

34.2 Connecting Cinema 4D to CgFX

One minor hurdle occurs when one tries to use Win32, OpenGL, and CgFX calls together with Cinema 4D's C++ API: the corresponding header files conflict and cannot be included together due to type redefinitions. This problem is solved cleanly by introducing wrapper functions and classes. They encapsulate the employed functions and classes of the API.

For third-party programmers, Cinema 4D allows only limited access to its windows. To gain full control over device contexts, the plug-in builds its own Win32 threads. Its high-quality preview and its offline renderer operate concurrently with Cinema 4D; they use off-screen buffers and deliver their results via bitmaps to Cinema 4D's dialog boxes for display. This design allows, for instance, switching between different antialiasing (multisampling) modes on the fly. Furthermore, if the user resizes one of the windows, only the displayed bitmaps are rescaled, which leads to immediate visual feedback. This is not possible when re-rendering the scene on a resize event.

Thanks to multithreading, the user can work in the construction windows without waiting for the interactive or the offline renderer to finish. However, this concurrent operation means that the plug-in has to receive a cloned copy of the scene. Otherwise, the plug-in might try, for instance, to access objects that the user has just deleted.

The plug-in uses the CgFX framework to build shaders and render scenes with them. Each time the interactive renderer is invoked for a frame or the offline renderer is invoked for a frame sequence, for all materials, instances of ICgFXEffect are built either from .fx files or by emulating the standard Cinema 4D material (more on that below). During rendering, the corresponding ICgFXEffect is invoked for each object. Each render pass of the CgFX framework calls an OpenGL indexed vertex array. This includes normal and (multi)texture coordinate arrays.

These arrays are prepared as follows. While Cinema 4D works with spline surfaces, metaballs and so on, the Hierarchy class of its API makes it easy for the plug-in developer to traverse the scene data structures and collect tessellated and deformed versions of all objects, together with their global transformation matrices. The vertex and polygon structures can immediately be used in OpenGL as an indexed vertex array of quadrilaterals. Note that Cinema 4D does not support arbitrary polygons, but only quadrilaterals and triangles; the latter are treated as degenerated quadrilaterals.

In addition to the spatial coordinates, typical .fx shaders require lots of additional data per vertex: normal vector, tangent vector, binormal vector, and u-v texture coordinates. The latter are immediately accessible if we require that the object be a built-in parametric type (such as a sphere or a mannequin) or require that the user assign u-v coordinates to the object.

Through the adjacency information offered by Cinema 4D, one can easily determine face normals and, by averaging the face normal vectors around a vertex, vertex normal vectors. To compute tangent and binormal vectors at a vertex, vectors pointing in the u and v directions of the texture coordinates are needed. This would be easy for spline-based objects: just take partial derivatives. However, here we have to deal with geometry that has been tessellated or was polyhedral from the very beginning. Under these circumstances, the u and v directions are not well defined. They may, for instance, be inferred for each vertex by considering the u and v coordinates of the vertex itself and those of its surrounding neighbors, trying to find a best linear approximation.

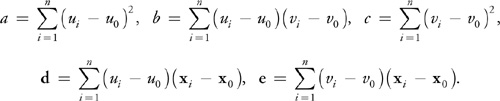

This works as follows: From the position x i and the texture coordinates ui , vi of the vertex i adjacent to vertex 0, compute the auxiliary quantities a, b, c, d, and e (vectors in boldface):

From these, determine non-normalized vectors u, v in the u and v directions, possibly not yet orthogonal to the normal n at the central vertex 0:

U = c d - b e, v = a e - b d.

To construct an orthogonal frame (normal, tangent, binormal), compute the tangential component of u:

u t = u - (u · n) n

and use the following triplet of vectors: n,  ,

,  , where

, where  denotes normalization of u t and x denotes the vector product. For normal maps generated from bump maps, the tangent and binormal vectors may be replaced by

denotes normalization of u t and x denotes the vector product. For normal maps generated from bump maps, the tangent and binormal vectors may be replaced by  and

and  or by the vectors (v x n)0 and (n x u)0, which resemble the original form of bump mapping more closely and work better for u-v coordinates that are not locally orthogonal. For other choices, see Kilgard 2000.

or by the vectors (v x n)0 and (n x u)0, which resemble the original form of bump mapping more closely and work better for u-v coordinates that are not locally orthogonal. For other choices, see Kilgard 2000.

34.3 Shader and Parameter Management

Thanks to the CgFX toolkit, it is easy to load an .fx file and use it as a shader, as described above. However, there still remains some work concerning its parameters: the user needs access to the tweakable ones, including their animation, and the untweakable ones have to be computed from the scene.

To handle .fx files, the plug-in adds a proprietary material type to Cinema 4D that can be attached to objects as usual. An instance of this material stores the name of the .fx file and the values and animations of its tweakable parameters. When the user reloads an .fx file or selects a new one, the plug-in uses an instance of ICgFXEffect to load and parse the file.

For each parameter, a special object is built, which manages the GUI of the parameter, its value, and so on. This object belongs to a subclass of a newly defined C++ base class called ParamWrapper; subclasses of this base class are used for the different types of parameters, such as color triplet or texture file name. Each ParamWrapper subclass contains virtual methods that are called to do the following:

- Build and initialize a GUI if this parameter type is tweakable

- Retrieve animated values from a GUI

- Allocate resources and initialize settings when starting to render a frame (such as loading a texture)

- Allocate resources and initialize settings when starting to render an object (for instance, hand its world matrix to CgFX)

- Release resources after an object has been rendered

- Release resources after a frame has been rendered

Furthermore, each ParamWrapper subclass can be asked to try to instantiate itself for a given parameter of an ICgFXEffect. The constructor then reads the parameter's type and annotations and reports if it was successful. On parsing an .fx file, the plug-in calls this method for each parameter until a fitting subclass is found. This approach makes it possible to encapsulate all knowledge about a parameter type inside the corresponding subclass.

Through the subclasses, some of the tweakable parameters receive special GUI elements offered by Cinema 4D. For textures, names of .dds files are stored. Positions and directions are linked to the position or the z axis of other objects, preferably light sources; these can be dragged to and dropped onto an input field of the GUI. The untweakable and hence GUI-less matrix parameters receive special treatment. The world matrix is delivered by the API as a by-product on traversing the scene. The view and projection matrices are computed from the camera settings. The products of these matrices, perhaps inverted or transposed, are computed if the shader requires them.

For convenience, the plug-in offers to open the .fx file in a user-specified editor. Upon reloading an edited .fx file, existing parameter values are conserved if they are the correct type and in the allowed range. If the CgFX toolkit reports an error upon loading an .fx file, this file is opened in the editor with the cursor placed on the error's line. All messages about errors, as well as problems such as unknown parameter types, are written to the text console of Cinema 4D.

34.4 Emulating the Offline Renderer

Instead of loading .fx files, the user may simply use standard Cinema 4D surface materials. The plug-in converts these internally and transparently to memory-based .fx shaders, fitting well into the process described for external shader files. The plug-in employs string streams to build the internal .fx shaders.

A Cinema 4D material can contain bitmap files, movie files, or procedural shaders as maps. The plug-in reads these pixel by pixel in a user-selectable resolution (typically 256x256 texels) and builds corresponding textures. Three types of maps are converted: diffuse maps, bump maps, and environment maps. The latter are used with spherical mapping in the ray tracer; the plug-in computes cube maps from them in order to optimize resolution. The bump maps are grayscale height fields. For these, a normal map is formed from horizontal and vertical pixelwise differences of the bump map, scaled by its size to compensate for steeper gradients at smaller resolutions.

For shadow generation, we relied on a shadow map method similar to the one used in Everitt et al. 2002. In contrast to a hardware-accelerated shadow-volume algorithm (Everitt and Kilgard 2002), it may later quite easily be extended to treat objects deformed by displacement maps or vertex shaders.

For each cone light in the scene tagged as shadow casting, a depth map of user-controllable size is generated (typically 256x256 texels). To this end, all objects of the scene, regardless of the surface material they carry, are rendered into an off-screen buffer without textures and shaders. This requires traversing the scene as in the final rendering described earlier, although no texture coordinates nor normal, tangent, or binormal vectors are computed during this pass. In fact, the rendering of the shadow maps does not use CgFX at all. To use an off-screen buffer as a depth-map texture, it is built with the OpenGL extension WGL_NV_render_depth_texture.

Another texture is used to store Cinema 4D's proprietary shape of specular highlights. It involves several transcendental functions, such as power and arc cosine. This complex relationship is precomputed per frame as a 1D texture of 1024 texels.

To allow an unlimited number of lights, one possibility is to use a single render pass for each light, possibly adding another pass for self-illumination. However, this causes a large amount of recomputation, such as for normalization of interpolated vectors. Joining several passes comes at a price, however. Because current graphics cards support only a fixed number of textures, the number of light sources that can cast shadows is strictly limited.

The vertex shader part of the generated CgFX shaders is quite simple:

- The position is transformed to normalized screen coordinates using the WorldViewProjection matrix.

- Tangent and binormal vectors are transformed to world coordinates through the World matrix.

- To preserve orthogonality, the normal vector is transformed to world coordinates through the inverse transposed World matrix.

- The position in world coordinates is computed using the World matrix.

- Finally, a unit vector from the viewer's position (which can be read off from the inverse transposed View matrix) to the vertex is determined in world coordinates.

Listing 34-1 shows the pixel shader generated when there is one cone light with shadow. When there are more light sources, parameters such as depthSampler1 are added and the block between the braces {...} is repeated accordingly. The listing's initial part is standard; the computations done per light source deserve some explanation, however. Ld is the world-space vector from the vertex to the light source. baseCol sums the contribution of the light source: first the diffuse, and then the specular part. The latter reads the precomputed highlight shape using specShadeSampler. If the reflected ray points away from the viewer, the texture coordinate for specShadeSampler becomes negative and is clamped to zero, where the texture is zero, too.

Example 34-1. The Pixel Shader Generated for a Material in a Scene Containing a Single Shadow-Casting Cone Light

pixelOutput mainPS(vertexOutput IN, uniform sampler2D diffuseSampler, uniform sampler1D specShapeSampler, uniform sampler2D normalSampler, uniform samplerCUBE enviSampler, uniform sampler2D depthSampler0, uniform float4 lumiCol, uniform float4 diffCol, uniform float bumpHeight, uniform float4 enviCol, uniform float4 specCol, uniform float4 lightPos0, uniform float4 lightCol0, uniform float4 lightParams0, uniform float4 lightUp0, uniform float4 lightDir0, uniform float4 lightSide0) { pixelOutput OUT; float3 Vn = normalize(IN.view); float3 Nn = normalize(IN.norm); float3 tangn = normalize(IN.tang); float3 binormn = normalize(IN.binorm); float3 bumps = bumpHeight * (tex2D(normalSampler, IN.uv.xy).xyz * 2.0 - float3(1.0, 1.0, 2.0)); float3 Nb = normalize(bumps.x * tangn + bumps.y * binormn + (1.0 + bumps.z) * Nn); float3 env = texCUBE(enviSampler, reflect(Vn, Nb)).rgb; float3 colorSum = lumiCol.rgb + env * enviCol.rgb; float3 baseDiffCol = diffCol.rgb + tex2D(diffuseSampler, IN.uv.xy).rgb; { float3 Ld = lightPos0.xyz - IN.wPos; float3 Ln = normalize(Ld); float3 baseCol = max(0.0, dot(Ln, Nb)) * baseDiffCol; float spec = tex1D(specShapeSampler, dot(Vn, reflect(Ln, Nb))).r; baseCol += specCol.rgb * spec; float3 L1 = (Ln / dot(Ln, lightDir0.xyz) - lightDir0.xyz) * lightParams0.z; float shadowFactor = max(lightParams0.x, smoothstep(1.0, lightParams0.w, length(L1))); float d = dot(Ld, lightDir0.xyz); float z = 10.1010101 / d + 1.01010101; float2 depthUV = float2(0.5, 0.5) + 0.5 * float2(dot(L1, lightSide0.xyz), dot(L1, lightUp0.xyz)); shadowFactor *= max(lightParams0.y, tex2Dproj(depthSampler0, float4(depthUV.x, depthUV.y, z - 0.00005, 1.0)).x); colorSum +=shadowFactor * baseCol * lightCol0.rgb; } OUT.col = colorSum; return OUT; }

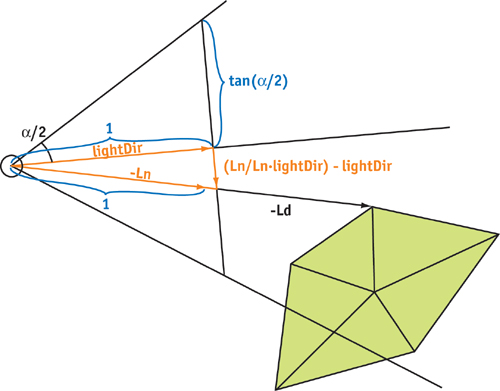

The remaining computations deal with the soft cone and the shadow, combined into the variable shadowFactor. L1 measures how far away the vertex is from the light's optical axis; this vector has length 1 if the vertex is exactly on the rim of the cone, as shown in Figure 34-3. To this end, lightParams0.z has to be set to the inverse of the tangent of the half aperture angle of the cone. To create a soft cone, lightParams0.w is set to the quotient of the tangent of the half aperture angle of the inner, fully lighted part of the cone divided by the former tangent. From this, the smoothstep function creates a soft transition. lightParams0.x is set to 1.0 for omni lights and 0.0 for cone lights, so that only the latter are restricted to a cone.

Figure 34-3 The Geometry of a Cone Light

To generate shadowing, d measures the z coordinate of the vertex in the coordinate frame of the light source. This is then converted to the depth coordinate used in the depth map and afterward is compared to the actual value found in that map. Note that for tex2Dproj to work, the texture has to be defined with glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_COMPARE_SGIX, GL_TRUE).

34.5 Results and Performance

Figure 34-1 showed that the rendered images can withstand comparison with the original offline renderer. Complex features such as transparency and reflection effects are still missing, but they may be added in a later version—for instance, through environment maps rendered on the fly and layered rendering (Everitt 2001). Further possible improvements include soft shadows (Hasenfratz et al. 2003), physically based lighting models, and indirect illumination (Kautz 2003).

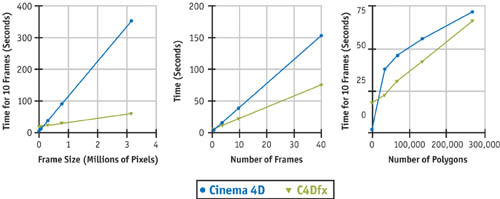

Of course, the main question is what speed the hardware-accelerated solution offers. In terms of raw megapixels per second, the graphics card can asymptotically outperform the offline renderer by nearly a factor of nine. This can be seen from the growth of render time with frame size in Figure 34-4. However, as Figure 34-4 also shows, compiling CgFX shaders on the fly and, in particular, building OpenGL textures from Cinema 4D texture maps and procedural shaders leads to some startup costs before any frame is rendered. Thus, the hardware-accelerated solution excels only for large frame sizes and frame sequences, but not for single frames. To improve on this result, an intelligent approach concerning the textures is needed. The textures need to be conserved as long as possible, rather than rebuilt each frame. This requires an automatic, in-depth examination of the animation.

Figure 34-4 Comparing Benchmark Results

The benchmarks performed use a base scene of approximately 33,000 polygons, almost all quadrilaterals, and an animation of 10 frames at 640x480 pixels (0.31 million pixels) with no antialiasing. The system used was a Pentium 4 PC at 2.5 GHz with a GeForce FX 5900, running Cinema 4D release 8.207. In each part of Figure 34-4, one of three parameters is varied: frame size, sequence length, and complexity.

With growing geometric complexity of the scene, the offline renderer of Cinema 4D obviously profits from some optimization techniques. The hardware-accelerated solution needs a similar improvement. Easiest to implement would be view-frustum culling and hardware-based occlusion culling on a per-object basis.

34.6 Lessons Learned

The CgFX toolkit makes it feasible to add both hardware-accelerated, high-quality displays and hardware-accelerated, offline renderers to existing software. The solution presented here fits into roughly 150 KB of C++ source code.

CgFX allows programmers to address a wide range of graphics cards from different vendors via a set of techniques defined in an .fx file. However, for optimum performance, it is a good idea to carefully count in advance how many parameters and textures will be needed simultaneously. Even the latest graphics cards may deliver only limited power in this respect.

Shaders usually form only a tiny part of a program. The remainder of the software tends to become quite specific in its demands for hardware. The project presented here started out with the large range of hardware compatibility offered by CgFX itself. But adding any slightly advanced feature, such as rendering into an off-screen buffer, multisampling, or shadow generation, cuts down the target range. Some OpenGL extensions may be missing or different on some graphics cards, or their implementation may even contain serious bugs.

Our plug-in functions only on Windows, despite the fact that Cinema 4D is available for both Windows and Mac OS. The unavailability of CgFX on platforms other than Windows (at the time of development) accounts for only part of the problem. A cross-platform API such as Cinema 4D's may hide many of the differences in the handling of windows, events, and threads. However, this design conflicts with the goal of providing enough functionality and granting enough access to the underlying structures to fully support complex OpenGL-based software.

Users of graphics design software may create arbitrarily complex scenes, but they still expect a decent response time from the software. During the development of the C4Dfx plug-in, we learned how crucial it is to understand and plan for the elaborate interworkings of threads and how data and events are shared and transmitted between them.

Another area requiring profound design consideration from the very beginning turned out to be the management of textures and shaders. Creating textures, loading them to the graphics card, and compiling shaders needs to be avoided at nearly all cost, as the benchmarks show. This restriction demands a highly intelligent design. Of course, such a design is difficult to implement as an add-on to existing software.

Real-time shaders can closely emulate many functions that usually are still being computed on the CPU. For instance, the soft rims of the light cones and the specular highlights of the plug-in described here look identical to those of the software renderer. But such a perfect reproduction requires access to the original algorithms or extensive reverse engineering. Even standard features such as bump mapping can be implemented in dozens of ways, all of which yield slightly different results. How do you form the tangent and the binormal? How do you mix the bumped normal vector with the original one, depending on the bump strength parameter? How do you compute the normal map from the bump map? Do you use the original, possibly non-power-of-two, bump-map image or some mipmap level? In this respect and many others, Cg has presented itself as an ideal tool for experimenting with computer graphics algorithms.

34.7 References

Everitt, C. 2001. "Order-Independent Transparency." Available online at http://developer.nvidia.com/view.asp?IO=order_independent_transparency

Everitt, C., and M. J. Kilgard. 2002. "Practical and Robust Stenciled Shadow Volumes for Hardware-Accelerated Rendering." Available online at http://developer.nvidia.com/object/robust_shadow_volumes.html

Everitt, C., A. Rege, and C. Cebenoyan. 2002. "Hardware Shadow Mapping." Available online at http://developer.nvidia.com

Hasenfratz, J.-M., M. Lapierre, N. Holzschuch, and F. X. Sillion. 2003. "A Survey of Real-time Soft Shadow Algorithms." Eurographics 2003 State of the Art Reports, pp. 1–20.

Kautz, J. 2003. "Hardware Lighting and Shading." Eurographics 2003 State of the Art Reports, pp. 33–57.

Kilgard, M. J. 2000. "A Practical and Robust Bump-Mapping Technique for Today's GPUs." Available online at http://developer.nvidia.com

Maxon. Cinema4D. http://www.maxon.net

Copyright

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals.

The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein.

The publisher offers discounts on this book when ordered in quantity for bulk purchases and special sales. For more information, please contact:

U.S. Corporate and Government Sales

(800) 382-3419

corpsales@pearsontechgroup.com

For sales outside of the U.S., please contact:

International Sales

international@pearsoned.com

Visit Addison-Wesley on the Web: www.awprofessional.com

Library of Congress Control Number: 2004100582

GeForce™ and NVIDIA Quadro® are trademarks or registered trademarks of NVIDIA Corporation.

RenderMan® is a registered trademark of Pixar Animation Studios.

"Shadow Map Antialiasing" © 2003 NVIDIA Corporation and Pixar Animation Studios.

"Cinematic Lighting" © 2003 Pixar Animation Studios.

Dawn images © 2002 NVIDIA Corporation. Vulcan images © 2003 NVIDIA Corporation.

Copyright © 2004 by NVIDIA Corporation.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior consent of the publisher. Printed in the United States of America. Published simultaneously in Canada.

For information on obtaining permission for use of material from this work, please submit a written request to:

Pearson Education, Inc.

Rights and Contracts Department

One Lake Street

Upper Saddle River, NJ 07458

Text printed on recycled and acid-free paper.

5 6 7 8 9 10 QWT 09 08 07

5th Printing September 2007

- Contributors

- Copyright

- Foreword

- Part I: Natural Effects

- Chapter 1. Effective Water Simulation from Physical Models

- Chapter 2. Rendering Water Caustics

- Chapter 3. Skin in the "Dawn" Demo

- Chapter 4. Animation in the "Dawn" Demo

- Chapter 5. Implementing Improved Perlin Noise

- Chapter 6. Fire in the "Vulcan" Demo

- Chapter 7. Rendering Countless Blades of Waving Grass

- Chapter 8. Simulating Diffraction

- Part II: Lighting and Shadows

- Chapter 9. Efficient Shadow Volume Rendering

- Chapter 10. Cinematic Lighting

- Chapter 11. Shadow Map Antialiasing

- Chapter 12. Omnidirectional Shadow Mapping

- Chapter 13. Generating Soft Shadows Using Occlusion Interval Maps

- Chapter 14. Perspective Shadow Maps: Care and Feeding

- Chapter 15. Managing Visibility for Per-Pixel Lighting

- Part III: Materials

- Chapter 16. Real-Time Approximations to Subsurface Scattering

- Chapter 17. Ambient Occlusion

- Chapter 18. Spatial BRDFs

- Chapter 19. Image-Based Lighting

- Chapter 20. Texture Bombing

- Part IV: Image Processing

- Chapter 21. Real-Time Glow

- Chapter 22. Color Controls

- Chapter 23. Depth of Field: A Survey of Techniques

- Chapter 24. High-Quality Filtering

- Chapter 25. Fast Filter-Width Estimates with Texture Maps

- Chapter 26. The OpenEXR Image File Format

- Chapter 27. A Framework for Image Processing

- Part V: Performance and Practicalities

- Chapter 28. Graphics Pipeline Performance

- Chapter 29. Efficient Occlusion Culling

- Chapter 30. The Design of FX Composer

- Chapter 31. Using FX Composer

- Chapter 32. An Introduction to Shader Interfaces

- Chapter 33. Converting Production RenderMan Shaders to Real-Time

- Chapter 34. Integrating Hardware Shading into Cinema 4D

- Chapter 35. Leveraging High-Quality Software Rendering Effects in Real-Time Applications

- Chapter 36. Integrating Shaders into Applications

- Part VI: Beyond Triangles

- Appendix

- Chapter 37. A Toolkit for Computation on GPUs

- Chapter 38. Fast Fluid Dynamics Simulation on the GPU

- Chapter 39. Volume Rendering Techniques

- Chapter 40. Applying Real-Time Shading to 3D Ultrasound Visualization

- Chapter 41. Real-Time Stereograms

- Chapter 42. Deformers

- Preface