GPU Gems

GPU Gems is now available, right here, online. You can purchase a beautifully printed version of this book, and others in the series, at a 30% discount courtesy of InformIT and Addison-Wesley.

The CD content, including demos and content, is available on the web and for download.

Chapter 32. An Introduction to Shader Interfaces

Matt Pharr

NVIDIA

The release of Cg 1.2 introduced an important new feature to the Cg programming language called shader interfaces. Shader interfaces provide functionality similar to Java or C# interface classes and C++ abstract base classes: they allow code to be written that makes calls to abstract interfaces, without knowing what the particular implementation of the interface will be. The user must provide objects that implement the interfaces used in the program to the Cg runtime, which then handles putting together the final shader code to run on the hardware.

Unlike object-oriented languages that provide this kind of mechanism for abstraction for developing large software systems, the motivation for adding this feature to Cg was to make it easy to construct shaders at runtime out of multiple pieces of source code. By allowing applications to build shaders by composing the effect of modular pieces that implement well-defined bits of functionality, shader interfaces give the application greater flexibility in creating shading effects and help hide limitations of hardware profiles with no reduction in the quality or efficiency of the final compiled code that runs on the GPU.

For example, consider writing a fragment program that needs to compute a shaded color for a surface while supporting a variety of different types of light source: point light, spotlight, complex projective light source with shadow map and procedurally defined distance attenuation function, and so on. A very inefficient approach would be to pass a "light type" parameter into the program and have a series of if tests to determine which light-source model to use. Alternatively, the preprocessor could be used, and the application could use #ifdef guards so that it could manually compile a specialized version of the program, depending on which light-source model was actually being used. This approach is somewhat unwieldy, and it doesn't scale elegantly to multiple lights—especially if the number of lights is unknown ahead of time as well.

Shader interfaces provide a type-safe solution to this kind of problem. They do so in a way that reduces the burden on the programmer without compromising performance. The developer can write generic shaders, with large chunks of the functionality expressed in terms of shader object interfaces (such as a basic "light source" interface). As new specific implementations of these interfaces are developed, old shaders can use them without needing to be rewritten. Applications that use this feature would no longer need to implement string-concatenation routines to synthesize Cg source code on the fly to solve this problem—avoiding an approach that is both error prone and unwieldy.

In this chapter, we describe the basic syntax for shader interfaces and how they are used with the Cg compiler and runtime. To give a sense of possible applications for shader interfaces, we then show three examples of how they can be used in Cg: (1) to choose between two methods of vector normalization, (2) to support variable numbers of lights in shaders, and (3) to describe materials and texture in a general, hierarchical manner.

Two other new features in Cg 1.2 complement shader interfaces, improving both the flexibility and the efficiency of shaders. The first, unsized arrays, allows the programmer to declare and use arrays without a fixed size. The array size must be provided to the Cg runtime before the program can be executed. This feature is particularly handy for writing shaders that use a variable number of light parameter sets, skinning matrices, and so on. The second feature is the ability to flag shader parameters as constant values; the compiler can use the knowledge that a particular parameter is constant to substantially improve how well it optimizes the code. For example, an if test based on only constant parameters can be fully evaluated at compile time, and the compiler can either discard all the code inside the block after the if or run it unconditionally, depending on the test's value.

32.1 The Basics of Shader Interfaces

We start with a simple example based on a fragment program that uses shader interfaces to choose between two different methods of normalizing vectors: one based on a cube-map texture lookup and one based on computing the normalized vector numerically. The application might want to choose between these two vector normalization methods at runtime for maximum performance, depending on the capabilities of the graphics hardware available. This is a simple example, but it helps convey some of the key ideas behind shader interfaces.

First, we need to describe a generic interface for vector normalization. The keyword interface introduces a new interface, which must be named with a valid identifier. The methods provided by the interface are then declared between the braces, and the declaration of the interface is terminated by a semicolon.

interface Normalizer { float3 nrm(float3 v); };

Multiple methods can be declared inside the interface, and the usual function-overloading rules apply. For example, we could have also declared a half3 nrm(half3) function as well, and the appropriate one would be chosen based on the type of the vector passed into it.

Given this declaration, we can write a Cg program that takes an instance of the Normalizer interface as a parameter. Listing 32-1 shows a very simple fragment program that computes a diffuse lighting term from a single point light. The nrm() function in the Normalizer interface is called twice: once to normalize the light vector from the light position to the point being shaded, and once to normalize the interpolated normal from the vertex shader.

By using a shader object interface, it was possible to write this shader based on some Normalizer implementation being bound to normalizer, but without knowing how normalization would actually be done at runtime.

We next need to define an implementation or two of the interface. First is StdNormalizer, which normalizes the vector using the normalize() Cg Standard Library routine, which divides the vector's components by its length. We define a new structure and indicate that it implements the Normalizer interface by following the structure name with a colon and the name of the interface that it provides. Having promised to implement the interface, the structure must define implementations of all of the functions declared in the interface. As in Java and C#, the implementations of the methods must be defined inside the structure definition.

Example 32-1. Simple Fragment Program Using a Shader Interface to Hide the Details of the Vector Normalization Technique

float4 main(float3 Pworld : TEXCOORD0, float3 Nworld : TEXCOORD1, float3 Kd : COLOR0, uniform float3 Plight, uniform Normalizer normalizer) : COLOR { float3 L = normalizer.nrm(Plight - Pworld); float3 C = Kd * max(0, dot(L, normalizer.nrm(Nworld))); return float4(C, 1); }

struct StdNormalizer : Normalize { float3 nrm(float3 v) { return normalize(v); } };

We might also define a shader object that normalizes vectors using a cube-map texture, where the faces of the texture have been initialized so that they hold the normalized vector for their corresponding direction. In the next snippet, CubeNormalizer declares a samplerCUBE parameter inside its structure to hold this cube map, and its implementation of nrm() uses it in the usual manner.

struct CubeNormalizer : Normalize { samplerCUBE normCube; float3 nrm(float3 v) { return texCUBE(normCube, v).xyz; } };

Given a program that has interface parameters like normalizer in the preceding code, we need to use the Cg runtime to specify a particular implementation of the interface before the program can execute. We first need to tell the runtime not to immediately try to compile the program we give it when we call cgCreateProgram() or cgCreateProgramFromFile(), as it does by default. Otherwise, the compilation will fail, because the interface hasn't yet been bound to a specific implementation. The cgSetAutoCompile() routine controls this behavior; here we will set it to not compile the program until we tell it to do so explicitly. (There are other settings available for cgSetAutoCompile() that will automatically recompile the program as needed, though possibly at a cost of excess recompilations.)

cgSetAutoCompile(context, CG_COMPILE_MANUAL); CGprogram prog = cgCreateProgramFromFile(context, CG_SOURCE, "frag.cg", profile, NULL, NULL);

The CGprogram handle returned by cgCreateProgramFromFile() can't yet be loaded into the GPU; we need to connect an instance of a StdNormalizer structure to the normalizer parameter of the program first. Fortunately, the runtime allows us to create an instance of a StdNormalizer structure purely through Cg runtime API calls. We turn the string type name "StdNormalizer" into a CGtype value with the cgGetNamedUserType() call, and then we use cgCreateParameter() with that type to create a StdNormalizer instance out of thin air.

CGtype nrmType = cgGetNamedUserType(prog, "StdNormalizer"); CGparameter stdNorm = cgCreateParameter(context, nrmType);

If StdNormalizer had parameters inside the structure, we could now initialize them with the cgSetParameter*() routines or profile-specific parameter-setting routines, including those for binding texture units to samplers, although we don't need to worry about that for StdNormalizer. (We would need to bind an appropriate cube-map texture to the CubeNormalizer structure's normCube parameter, however.)

Having created the instance of the StdNormalizer, all that's left is to get a parameter handle for the interface from the main() routine's parameter list and to connect the StdNormalizer that we just created to it.

CGparameter normIface = cgGetNamedParameter(prog, "normalizer"); cgConnectParameter(stdNorm, normIface);

We now have a fully specified program, with no interface parameters without bound implementations. We wrap up by manually compiling the program. After doing this, we can use the program in the normal fashion, loading it on the GPU and using it for rendering.

cgCompileProgram(prog);

If we later want to swap in a different Normalizer implementation for this instance of the program, we just call cgConnectParameter() again to connect the new parameter and recompile the new program. Furthermore, if we had multiple programs in the same CGcontext, they could all share the same single instance of the StdNormalizer, by connecting it to multiple parameters with multiple calls to cgConnectParameter()—it wouldn't be necessary to create multiple instances of the StdNormalizer.

32.2 A Flexible Description of Lights

A more interesting application of shader interfaces is to use them to handle the problem of writing a Cg program that supports an arbitrary type of light source. In conjunction with another new feature of Cg 1.2, unsized arrays, shader interfaces provide a clean solution to the general problem of writing a program that supports an arbitrary number of light sources, where each light source may be a totally different type of light.

Previously, one solution to this problem was to render the scene once per light source, adding together the results of each pass to compute the final image. This is an inefficient approach, because it requires running vertex programs multiple times, and because it leads to unnecessary repeated computation in the fragment programs each time through. It is also unwieldy, because adding a new type of light means having to write a new instance of every one of the existing surface shaders for the new light model. With shader interfaces, if we have a variety of different types of surface reflection models implemented using the same light-source interface, then adding a new type of light doesn't require any source code modification to the already-existing shaders.

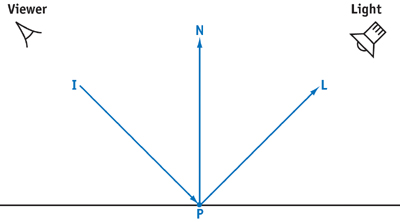

A basic, generic interface that provides a good abstraction for the behavior of diverse types of light sources passes the point being shaded P to the light source. The light source in turn is responsible for initializing a variable L, which gives the direction of incoming light at P, and returning the amount of light arriving at P. Figure 32-1 shows the basic setting for computing illumination from a light in this manner.

Figure 32-1 Basic Setting for Lighting Computation

interface Light { float3 illuminate(float3 P, out float3 L); };

Given this interface, we might define a simple shadow-mapped spotlight that implements the interface. This spotlight holds a sampler2D for its shadow map and uses a cube-map texture to describe the angular distribution of light. The 3D position of the light and its color round out the light's definition. For this example, we assume that the light's position and the position of the point being shaded are already expressed in the same coordinate system, so that we don't have to worry about transforming them to a common coordinate system.

The implementation of this light is quite straightforward; the light direction L is easily computed using the light's position, and the amount of light arriving at P is the product of the light's color, the visibility factor from the shadow-map lookup, and the texture lookup for the spotlight's intensity in the direction to the point receiving light. See Listing 32-2.

Example 32-2. The SpotLight Light Source, Defined As the Implementation of the Light Interface

struct SpotLight : Light { sampler2D shadow; samplerCUBE distribution; float3 Plight, Clight; float3 illuminate(float3 P, out float3 L) { L = normalize(Plight - P); return Clight * tex2D(shadow, P).xxx * texCUBE(distribution, L).xyz; } };

One can easily implement a wide variety of light types with this interface: point lights, directional lights, lights that project a texture into the scene, lights with linear or distance-squared fall-off, lights with fall-off defined by a 1D texture map, and many others.

In the fragment program in Listing 32-3, the Cg code loops over all of the lights that are illuminating the surface, calls the illuminate() function of each one, and then accumulates a sum of basic diffuse shading terms for each light. Cg's new unsized array feature lets us declare an array of indeterminate length by using the [] syntax for the array length after the array name, lights. We will shortly see how the Cg runtime can be used to set the number of elements in an unsized array at runtime before the shader finally executes. Note also that there is a length member for arrays that gives the number of elements in them; this lets us write a loop over all of the items in an unsized array, even on profiles that don't natively support a variable number of loop iterations.

Example 32-3. Fragment Shader That Takes an Arbitrary Number of Light Interface Parameters

float4 main(float3 Pworld : TEXCOORD0, float3 Nworld : TEXCOORD1, float2 uv : TEXCOORD2, uniform sampler2D diffuse, uniform Light lights[]) : COLOR { float3 C = float3(0, 0, 0); Nworld = normalize(Nworld); float3 Kd = tex2D(diffuse, uv).xyz; for (int i = 0; i < lights.length; ++i) { float3 L, Cl; Cl = lights[i].illuminate(Pworld, L); C += Kd * Cl * max(0, dot(Nworld, L)); } return float4(C, 1); }

Before this shader can be used in a program, two things must be done using the Cg runtime. First, the final length of the unsized lights[] array must be set, and second, each of the interface instances in the array must be bound to an instance of a structure that implements the Light interface. For the first problem, the Cg runtime has a routine to set the length of an unsized array:

void cgSetArraySize(CGparameter param, int size);

The second problem is solved as in the previous example, by calling cgCreateParameter() to create individual instances of lights and cgConnectParameter() to connect the instances to entries in the array of light interfaces for each of the lights in the array. As in that example, the same light-source instance can be shared by multiple programs in the same Cg context.

Because we have used shader interfaces and unsized arrays for this shader, we can write a shader that works with any implementation of the Light interface, and we can easily write a shader that doesn't need to have a hard-coded number of light sources bound to it. We have been able to write a very flexible Cg program in a clean way, and without needing to pay an efficiency penalty at runtime. Final GPU instructions are generated for the program only after the particular number of lights and their individual types have been set, so there is no performance price to pay for using the shader object and unsized array features.

Note that if we had instead solved this problem by re-rendering the scene once per light, then we'd have had to renormalize Nworld and redo the texture lookup for Kd for each pass. If the fragment program did more complex texturing operations than the simple ones here, the performance cost would be correspondingly worse.

32.3 Material Trees

Our final example shows how the composition of interfaces leads to interesting ways of describing materials. One of the most successful approaches to describing complex materials and texture in graphics has been to decompose them into trees or networks, where a collection of nodes is hooked up to compute a complex shading model through the actions of individual nodes that perform simpler operations. (Maya's HyperShade and 3ds max's material editor are two well-known examples of this approach.) Not only is this a convenient way to describe complex materials in content-creation applications, but it's also an elegant way to structure material and texture libraries in applications, allowing them great flexibility in constructing surface descriptions at runtime. Before shader interfaces were added to Cg, there wasn't a clean way to express materials described in this manner in Cg programs.

In this section, we show how shader interfaces make it possible to cleanly map this method of material description to Cg. Here, we make the distinction between a material, which represents the full procedural description of how a surface reacts to light, and texture, which represents a function that computes some value at a point that a material uses to account for variation in surface properties over a surface.

First, we need to define a material interface. A material has the responsibility of computing the color of reflected light at the given point, accounting for the material properties and illumination from the light sources. It needs information about the local geometry of the surface (at a minimum, the point being shaded P, the surface normal at that position N, and the texture coordinates uv), as well as the incident viewing direction I and information about the lights illuminating that point. Note that we are passing an unsized array of Light interfaces, lights[], into the color() method of the material interface.

interface Material { float3 color(float3 P, float3 N, float3 I, float2 uv, Light lights[]); };

A fragment program that uses a Material to compute shading is trivial; we just need a little bit of glue code that passes the right interpolated parameters into the material and returns the result as the color of the fragment, as shown in Listing 32-4.

Example 32-4. Fragment Shader That Delegates Almost All of Its Computation to a Material Interface Implementation

float4 main(float3 Pworld : TEXCOORD0, float3 Nworld : TEXCOORD1, float3 Iworld : TEXCOORD2, float2 uv : TEXCOORD3, uniform Light lights[], uniform Material material) : COLOR { Nworld = normalize(Nworld); float3 C = material.color(Pworld, Nworld, Iworld, uv, lights); return float4(C, 1); }

We might first implement a basic DiffuseMaterial that abstracts out the information about where diffuse color Kd comes from. So that we don't have to have different DiffuseMaterial objects for surfaces with a constant Kd, a Kd defined by a texture map, a Kd defined by a procedural checkerboard function, and so on, we define a Texture interface to hide the details of how such possibly varying color values are computed.

interface Texture { float3 eval(float3 P, float3 N, float3 uv); };

Now the DiffuseMaterial just calls the interface's eval() function and then does the usual diffuse-reflection computation, as shown in Listing 32-5.

By designing the material in this manner, we make it easy to use the DiffuseMaterial with any kind of Texture that we might develop in the future, without needing to modify its source code. As we assemble a large collection of different Material implementations (such as materials that implement complex BRDF models), this orthogonality becomes progressively more important.

Example 32-5. Diffuse Shading Model, Expressed As the Implementation of the Generic Material Interface—An instance of the Texture interface is used to compute the diffuse reflection coefficient at the shading point.

struct DiffuseMaterial : Material { Texture diffuse; float3 color(float3 P, float3 N, float3 I, float2 uv, Light lights[]) { float3 Kd = diffuse.eval(P, N, uv); float3 C = float3(0, 0, 0); for (float i = 0; i < lights.length; ++i) { float3 L; float3 Cl = lights[i].illuminate(P, L); C += Kd * max(0, dot(N, L)); } return C; } };

To connect this DiffuseMaterial to the material parameter of main() in Listing 32-4, we need first to create an instance of the DiffuseMaterial structure and then to connect an instance of an implementation of the Texture interface to its diffuse parameter. We can then connect the DiffuseMaterial to the material parameter of main().

A natural implementation of a Texture to have available is an ImageTexture that returns a color from a sampler2D.

struct ImageTexture : Texture { sampler2D map; float3 eval(float3 P, float3 N, float3 uv) { return tex2D(map, uv).xyz; } };

For convenience, we might also want a texture that always returns a constant color. This would allow us always to use the general DiffuseMaterial without needing a separate Material implementation for when the color was constant. Fortunately, there is no runtime performance penalty for organizing the code in this way.

struct ConstantTexture : Texture { float3 C; float3 eval(float3 P, float3 N, float3 uv) { return C; } };

Even more interesting, we can define a Texture implementation that itself uses Texture interfaces to do its work. In the following code snippet, a BlendTexture blends between two Textures according to a blend amount specified by a third Texture. Of course, each of the three Textures here could be a completely different type: one might look up a value from a 2D image map, one might always return a constant value, and the third might compute a value procedurally.

struct BlendTexture : Texture { Texture map1, map2, amt; float3 eval(float3 P, float3 N, float3 uv) { return lerp(map1.eval(P, N, uv), map2.eval(P, N, uv), amt.eval(P, N, uv)); } };

Shader interfaces allow us to implement the BlendTexture in a generic fashion, without needing to know ahead of time which particular types of Texture will be used for any particular instance of a BlendTexture. As with initializing the Texture interface parameter in the DiffuseMaterial, we need to connect instances of structures that implement the Texture interface to map1, map2, and amt using the Cg runtime. It's easy to extend the types of textures available in a system by just implementing new Texture types and extending the application to create them and connect them to interface parameters as appropriate. No preexisting types need to be modified.

Having begun writing implementations of interfaces that themselves hold interfaces, we can apply this idea in many other ways. For example, we can implement a Material that applies a fog atmospheric model to any other Material, as we've done in Listing 32-6.

Thus, we don't need to add support for fog to all of our materials. We can do it just once with the FogMaterial and connect a FogMaterial instance to the parameter to our program's main() function, with the FogMaterial's base instance variable set to our original unfogged material.

Example 32-6. Material That Modifies the Value Returned by Another Material, Blending in a Fog Term

struct FogMaterial : Material { Material base; float3 fogAtten, fogColor; float3 color(float3 P, float3 N, float3 I, float2 uv, Light lights[]) { float3 C = base.color(P, N, I, uv, lights); float fogFactor = exp(-P.z * fogAtten); return lerp(C, fogColor, fogFactor); } };

32.4 Conclusion

Shader interfaces and unsized arrays don't make it possible to write any program in Cg that couldn't be written before they were added to the language. However, by making it easier for applications to build shaders out of pieces of code at runtime, they make it substantially easier to implement a number of classic approaches to procedural shading. They provide this functionality in an efficient manner that has no performance penalty on the GPU; the only cost is some runtime and compiler overhead at compile time.

This chapter has described a few examples of this functionality. An application might demand a more complex light-source interface—for example, including a way to express the ideas of a light source that contributes only to diffuse reflection and doesn't cast specular highlights, as described in Barzel 1997. An application might require a more complex texture interface, with information about the surface's partial derivatives at the point being shaded. Many generalizations could be added to improve the interfaces we have used in the examples here.

For example, the Texture interface could be generalized to abstract out the decision about where texture coordinates come from by adding an interface that describes texture-coordinate generation (such as spherical mapping, cylindrical mapping, and so on). A texture-coordinate transformation implementation of this interface could transform the generated texture coordinates with a matrix, via a procedure or a texture-map lookup, for example.

Many useful Material interfaces could also be implemented, such as a double-sided material that chooses between two other materials, depending on which side of the surface is seen; or a blend material that blends between two materials according to a Texture or Fresnel reflection term. It would also likely be useful to be able to express bump mapping in a generic way with these interfaces.

The Cg 1.2 User's Manual has extensive information about the language syntax for shader interfaces and unsized arrays, as well as a description of the new Cg runtime calls that were added for these features.

32.5 References

Barzel, Ronen. 1997. "Lighting Controls for Computer Cinematography." Journal of Graphics Tools 2(1), pp. 1–20. This article describes a complex light-source model with many useful parameters.

Cook, Robert. 1984. "Shade Trees." Computer Graphics (Proceedings of SIGGRAPH 84) 18(3), pp. 223–231. This paper was the first to present the idea of hierarchical description of shading models with a tree of shading nodes.

Copyright

Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals.

The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein.

The publisher offers discounts on this book when ordered in quantity for bulk purchases and special sales. For more information, please contact:

U.S. Corporate and Government Sales

(800) 382-3419

corpsales@pearsontechgroup.com

For sales outside of the U.S., please contact:

International Sales

international@pearsoned.com

Visit Addison-Wesley on the Web: www.awprofessional.com

Library of Congress Control Number: 2004100582

GeForce™ and NVIDIA Quadro® are trademarks or registered trademarks of NVIDIA Corporation.

RenderMan® is a registered trademark of Pixar Animation Studios.

"Shadow Map Antialiasing" © 2003 NVIDIA Corporation and Pixar Animation Studios.

"Cinematic Lighting" © 2003 Pixar Animation Studios.

Dawn images © 2002 NVIDIA Corporation. Vulcan images © 2003 NVIDIA Corporation.

Copyright © 2004 by NVIDIA Corporation.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior consent of the publisher. Printed in the United States of America. Published simultaneously in Canada.

For information on obtaining permission for use of material from this work, please submit a written request to:

Pearson Education, Inc.

Rights and Contracts Department

One Lake Street

Upper Saddle River, NJ 07458

Text printed on recycled and acid-free paper.

5 6 7 8 9 10 QWT 09 08 07

5th Printing September 2007

- Contributors

- Copyright

- Foreword

- Part I: Natural Effects

- Chapter 1. Effective Water Simulation from Physical Models

- Chapter 2. Rendering Water Caustics

- Chapter 3. Skin in the "Dawn" Demo

- Chapter 4. Animation in the "Dawn" Demo

- Chapter 5. Implementing Improved Perlin Noise

- Chapter 6. Fire in the "Vulcan" Demo

- Chapter 7. Rendering Countless Blades of Waving Grass

- Chapter 8. Simulating Diffraction

- Part II: Lighting and Shadows

- Chapter 9. Efficient Shadow Volume Rendering

- Chapter 10. Cinematic Lighting

- Chapter 11. Shadow Map Antialiasing

- Chapter 12. Omnidirectional Shadow Mapping

- Chapter 13. Generating Soft Shadows Using Occlusion Interval Maps

- Chapter 14. Perspective Shadow Maps: Care and Feeding

- Chapter 15. Managing Visibility for Per-Pixel Lighting

- Part III: Materials

- Chapter 16. Real-Time Approximations to Subsurface Scattering

- Chapter 17. Ambient Occlusion

- Chapter 18. Spatial BRDFs

- Chapter 19. Image-Based Lighting

- Chapter 20. Texture Bombing

- Part IV: Image Processing

- Chapter 21. Real-Time Glow

- Chapter 22. Color Controls

- Chapter 23. Depth of Field: A Survey of Techniques

- Chapter 24. High-Quality Filtering

- Chapter 25. Fast Filter-Width Estimates with Texture Maps

- Chapter 26. The OpenEXR Image File Format

- Chapter 27. A Framework for Image Processing

- Part V: Performance and Practicalities

- Chapter 28. Graphics Pipeline Performance

- Chapter 29. Efficient Occlusion Culling

- Chapter 30. The Design of FX Composer

- Chapter 31. Using FX Composer

- Chapter 32. An Introduction to Shader Interfaces

- Chapter 33. Converting Production RenderMan Shaders to Real-Time

- Chapter 34. Integrating Hardware Shading into Cinema 4D

- Chapter 35. Leveraging High-Quality Software Rendering Effects in Real-Time Applications

- Chapter 36. Integrating Shaders into Applications

- Part VI: Beyond Triangles

- Appendix

- Chapter 37. A Toolkit for Computation on GPUs

- Chapter 38. Fast Fluid Dynamics Simulation on the GPU

- Chapter 39. Volume Rendering Techniques

- Chapter 40. Applying Real-Time Shading to 3D Ultrasound Visualization

- Chapter 41. Real-Time Stereograms

- Chapter 42. Deformers

- Preface