Tutorials

March 05, 2026

NVIDIA Blackwell Sets STAC-AI Record for LLM Inference in Finance

March 05, 2026

Tuning Flash Attention for Peak Performance in NVIDIA CUDA Tile

March 05, 2026

Controlling Floating-Point Determinism in NVIDIA CCCL

News

February 10, 2026

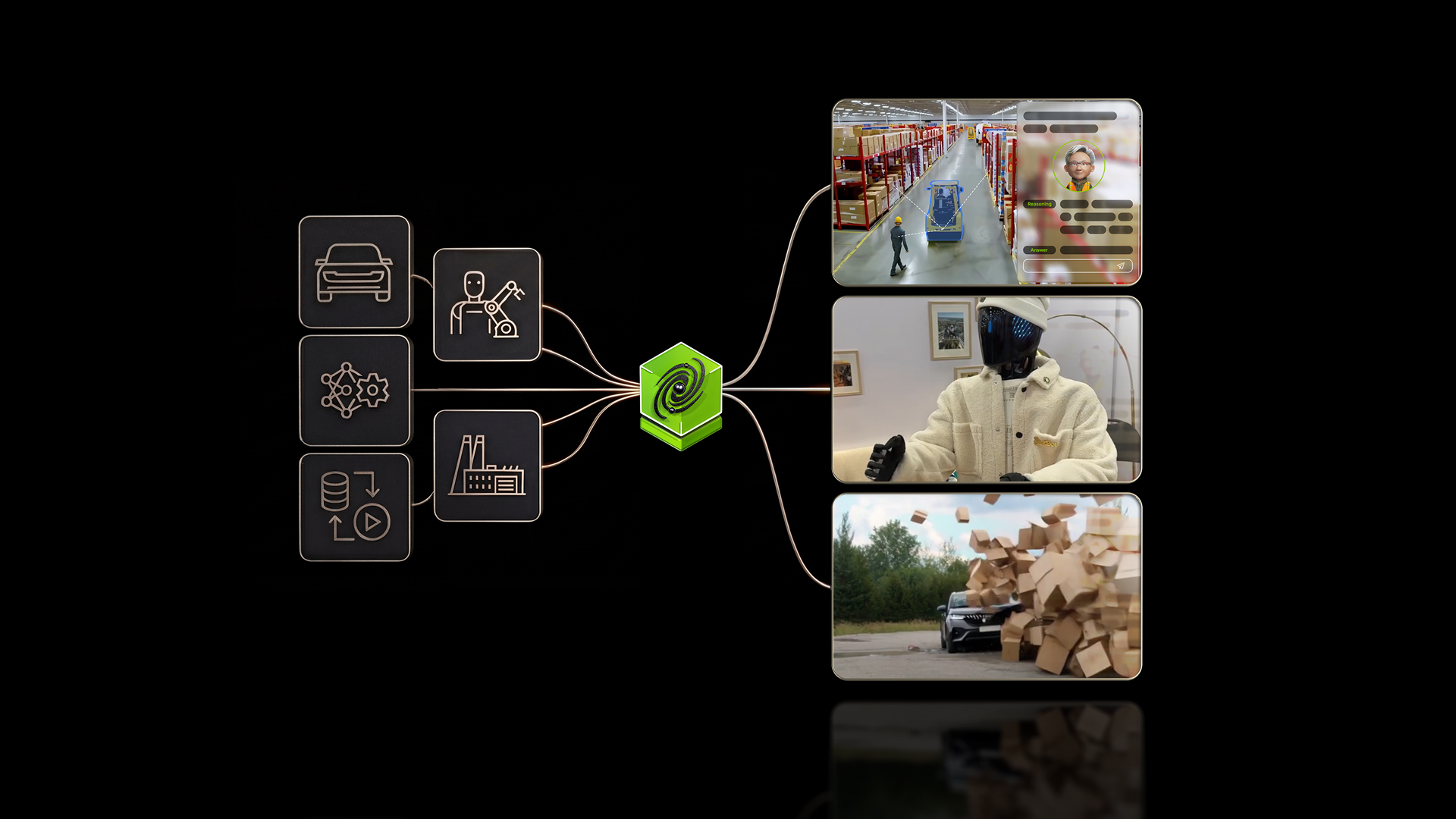

R²D²: Scaling Multimodal Robot Learning with NVIDIA Isaac Lab

February 04, 2026

How Painkiller RTX Uses Generative AI to Modernize Game Assets at Scale

January 27, 2026

Accelerating Diffusion Models with an Open, Plug-and-Play Offering

January 13, 2026

NVIDIA DLSS 4.5 Delivers Super Resolution Upgrades and New Dynamic Multi Frame Generation

Training

The NVIDIA Cosmos Cookoff Is Here

Global Webinar: Get Ready for AI Infrastructure Certification