GPU Gems 3

GPU Gems 3 is now available for free online!

The CD content, including demos and content, is available on the web and for download.

You can also subscribe to our Developer News Feed to get notifications of new material on the site.

Chapter 9. Interactive Cinematic Relighting with Global Illumination

Fabio Pellacini

Dartmouth College

Milo

Ha

an

Cornell University

Kavita Bala

Cornell University

9.1 Introduction

Cinematic lighting design is the process artists use for defining the look of each frame in a feature film by carefully setting all the parameters of the light shaders. Rendering high-quality images interactively for cinematic lighting is becoming a necessity, but it remains a challenge due to the complex data sets used in production rendering.

For faster user feedback, relighting engines were developed to take advantage of the fact that lighting artists are often interested in changing light parameters during lighting design—not the camera, objects, or materials. Relighting engines use a deep frame buffer to cache position, normals, and material parameters of all visible points and then recompute lighting on them interactively. This approach is similar to deferred shading techniques used in games, but it differs because the caches are computed offline. While deep frame buffers have been common in most relighting engines, the use of GPUs to interactively compute the final lighting was first introduced by Gershbein and Hanrahan 2000 and was extended to support a production scene's geometric and shading complexity in the Lpics system (Pellacini et al. 2005). Even though these systems show interactive relighting for cinematic scenes, they remain limited to direct illumination and cannot support indirect effects.

The direct-to-indirect transfer algorithm

(Haan

et al. 2006) extends relighting engines to support cinematic relighting with multiple-bounce indirect

illumination. The relighting is achieved by transferring the direct illumination of a set of gather samples to

the visible samples, which can be computed using a large transfer matrix precomputed offline. Our implementation

achieves high-quality multiple-bounce indirect illumination at 10 to 20 frames per second for scenes with up to

two million polygons, with diffuse and glossy materials and arbitrary direct illumination shaders.

Precomputation takes up to 3 hours. We review the principles behind this framework here, describe the details of

the implementation, and discuss the trade-offs.

9.10 Conclusion

In this chapter we presented a GPU relighting engine for cinematic lighting design, using an engine that extends traditional frame-buffer approaches. This engine supports multiple-bounce indirect illumination for scenes with high geometric complexity, glossy materials, and flexible direct lighting models expressed using procedural shaders.

9.11 References

Gershbein, Reid, and Patrick M. Hanrahan. 2000. "A Fast Relighting Engine for Interactive Cinematic Lighting Design." In Proceedings of SIGGRAPH 2000.

Haan,

Milo

,

Fabio Pellacini, and Kavita Bala. 2006. "Direct-to-Indirect Transfer for Cinematic Relighting." In

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2006) 25(3).

Jensen, Henrik W. 2001. Realistic Image Synthesis Using Photon Mapping. AK Peters.

Ng, Ren, Ravi Ramamoorthi, and Pat Hanrahan. 2003. "All-Frequency Shadows Using Non-linear Wavelet Lighting Approximation." In ACM Transactions on Graphics (Proceedings of SIGGRAPH 2003) 22(3).

Pellacini, Fabio, and Kiril

Vidime.

2004. "Cinematic Lighting." In GPU Gems, edited by Randima Fernando, pp. 167–183. Addison-Wesley.

Pellacini, Fabio, Kiril

Vidime,

Aaron Lefohn, Alex Mohr, Mark Leone, and JohnWarren. 2005. "Lpics: A Hybrid Hardware-Accelerated Relighting

Engine for Computer Cinematography." In ACM Transactions on Graphics (Proceedings of SIGGRAPH 2005)

24(3).

Stollnitz, Eric, Tony DeRose, and David Salesin. 1995. "Wavelets for Computer Graphics: A Primer. Part 1." Available online at http://grail.cs.washington.edu/pub/stoll/wavelet1.pdf.

9.2 An Overview of the Algorithm

We would like to compute the direct and indirect illumination on a set of points visible from the current camera (the pixels in the deep frame buffer), which we call view samples. Computing the direct illumination component is straightforward. The deep frame buffer contains the positions, normals, and material parameters for each view sample. Shadows are computed using shadow mapping.

To compute indirect illumination, we will need a set of points distributed throughout the scene, called gather samples. Note that gather samples are everywhere, not just in the visible part of the scene, because indirect illumination can come from surfaces that are not directly visible.

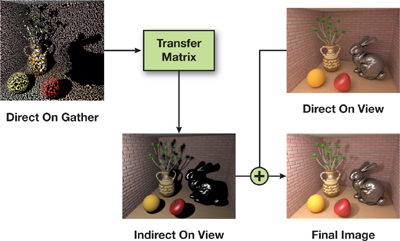

The basic idea behind the algorithm is that indirect illumination on the view samples can be computed by a linear transformation from the direct illumination on the gather samples—thus the name direct-to-indirect transfer. If we arrange the view and gather samples into vectors, this linear transformation can be thought of as a large matrix, which can be precomputed offline and multiplied by the direct illumination every time the lighting changes. Figure 9-1 shows a high-level overview of the overall algorithm, which can be summarized with the following equation:

|

V f = V d + V i = V d + T · g d , |

Figure 9-1 An Overview of Direct-to-Indirect Transfer

where v f is the final solution, v d is the direct illumination on view samples, v i is the indirect illumination on view samples, T is the transfer matrix, and g d is the direct illumination on the gather cloud. Note that the gather samples are diffuse-only, so this algorithm cannot handle caustics.

Unfortunately, the algorithm is not practical in this simplistic form. One reason is that the transfer matrix is very large. If we use a 640x480 image with 65,536 gather samples, it will produce a matrix with 19 billion elements, which is difficult to store and multiply with. Worse, every column of the matrix is essentially an image with one point light and full global illumination, so a naive approach to precomputation would take a long time. Therefore, we introduce several new ideas. First, we split the transfer T into a final gather component F and a multibounce component M so that the indirect illumination will be computed as

|

V i = F · (M + I)· g d . |

The advantage of using this equation is that elements of the final gather matrix F can be defined analytically and stored in high precision; the multibounce matrix M can be precomputed and stored with lower precision because it does not influence image quality as directly as the final gather. Next, we apply the Haar wavelet transform (Stollnitz et al. 1995) to the rows of the matrices and cull unimportant coefficients, achieving a sparse representation. Finally, we map the sparse matrix multiplications to the GPU, achieving a real-time update of indirect illumination as the direct lighting changes.

In the rest of this chapter, we explain in more detail how to implement these ideas.

9.3 Gather Samples

Here we discuss how to compute the gather samples and map them into a square texture. Each gather sample includes a position, a normal, and a diffuse color. The surface area it represents should also be stored, but we need it only for precomputation, so we don't have to send it to the GPU. It is advantageous to choose the number of gather samples as a power of four, because we will need to map them to a square power-of-two texture for wavelet projection. Our implementation uses 65,536 (256x256) samples; we found this to be the lowest number that gives enough quality.

A simple divide-and-conquer approach to creating the gather samples is presented in Listing 9-1. The idea is to

distribute a budget of samples onto a set of mesh triangles by recursively splitting the budget and the set

until there is only one sample or only one triangle left. The simple divide-and-conquer method works well for

scenes such as rooms, but it becomes less efficient for large environments, where choosing gather samples in a

nonuniform way can improve the accuracy of the overall algorithm.

Haan

et al. 2006 presents a more complex importance-sampling variation of gather selection that is robust enough even

for large environments.

Example 9-1. Pseudocode for Divide-and-Conquer Gather Sample Computation

GatherSample[] createGather(Triangle[] triangles, int budget)

{

if (triangles.length == 1)

{

// Pick all samples within one triangle.

// Distribute the area across the samples.

float area = triangles[0].area / budget;

return sampleTriangle(triangles[0], budget, area);

}

else if (budget == 1)

{

// Pick a random triangle and pick one sample on it.

Triangle t = pickRandomAreaWeighted(triangles);

float area = getTotalArea(triangles);

return sampleTriangle(t, 1, area);

}

else

{

// Split triangles (e.g., by some plane).

(ts1, ts2) = split(triangles);

// Split budget based on total areas.

area1 = getTotalArea(ts1);

area2 = getTotalArea(ts2);

int b1 = (int)(budget * area1 / (area1 + area2));

b1 = max(b1, 1);

b1 = min(b1, budget - 1);

int b2 = budget - b1;

// Continue recursively.

return union(createGather(ts1, b1), createGather(ts2, b2));

}

}We have created the set of gather samples, but we still have to "flatten" them—that is, define the mapping to

the power-of-two texture. (In this mapping, sub-block samples that correspond to Haar wavelet basis functions

should be similar to each other; this will become important later.) The solution is another divide-and-conquer

algorithm. We will need a function, splitSamples() that takes a set of gather samples (of a size

divisible by two) and splits it coherently into two subsets of equal size with respect to positions and normals

of the samples. A sample implementation of this function, based on "k-means clustering," can be found in

Haan

et al. 2006. However, a simpler technique should work fine, as long as it takes both positions and normals into

account. Assuming we have such a function, we can implement the mapping easily, as shown in Listing 9-2.

Example 9-2. Pseudocode for Flattening the Gather Samples into a Texture

float dist(GatherSample x, GatherSample y)

{

// Distance should depend on both positions and normals.

// The constant "sceneBoundingBoxDiagonal" should be precomputed.

return 400 * length(x.pos - y.pos) ^ 2 + sceneBoundingBoxDiagonal ^

2 * length(x.normal - y.normal) ^ 2;

}

(Gathersample[], GatherSample[]) splitSamples(GatherSample[] samples)

{

// Split into 2 clusters using the distance function above.

// Use (for example) the k-means algorithm.

return kMeans(samples, 2, dist);

}

SquareTexture flattenGather(GatherSample[] samples)

{

int n = samples.length;

assert isPowerOf4(n);

// Has to be power of 4

SquareTexture result = new SquareTexture();

map(samples, result, 0, 0, n);

return result;

}

void map(GatherSample[] smp, SquareTexture t, int x, int y, int a)

{

// Trivial case

if (a == 1)

{

t(x, y) = smp[0];

return;

}

// Split into 4 subsets.

(s1, s2) = splitSamples(smp);

(s11, s12) = splitSamples(s1);

(s21, s22) = splitSamples(s2);

// Map recursively.

map(s11, x, y, a / 2);

map(s12, x + a / 2, y, a / 2);

map(s21, x, y + a / 2, a / 2);

map(s22, x + a / 2, y + a / 2, a / 2);

}9.4 One-Bounce Indirect Illumination

As given, the matrix T contains multiple bounces of indirect illumination for each of its elements. However, such a matrix is very expensive to precompute directly. For simplicity, we first aim for one-bounce indirect, and later we show how to handle multiple bounces. For one bounce, precomputing the elements of T is relatively simple, as shown in Listing 9-3.

At this point we could try out the algorithm, using a 256x256 image and 1,024 gather samples. If we store each matrix element as three floats, the matrix size will be 256 x 256 x 1024 x 3 x 4 = 768 MB—too heavyweight for real-time performance. However, for higher quality, we might want 65,536 gather samples and a 640x480 view resolution, which requires a transfer matrix of roughly 19 billion elements. Clearly, we need a good compression approach, which we discuss next.

Example 9-3. Pseudocode for Matrix Element Evaluation

RgbColor evalElement(ViewSample v, GatherSample g, Vector3 cameraPos)

{

Vector3 out = (cameraPos - v.position).normalize();

Vector3 in = (g.position - v.position).normalize();

// Evaluate the surface shader (or "brdf").

// The cosine term is assumed to be included.

RgbColor color = v.evalBrdfCosine(in, out);

// The gather sample is treated as a cosine-emitting light.

color *= max(dot(g.normal, -in), 0);

color *= g.area;

// The 1/r^2 distance falloff term

color /= (g.position - v.position).lengthSquared();

// Trace a shadow ray to check visibility.

if (!color.isZero()) color *= visibility(v.position, g.position);

// Clamping is needed to prevent blowup of 1/r^2 term in corners

color.clamp(CLAMP_CONST);

return color;

}9.5 Wavelets for Compression

There are several approaches for compressing the large matrices that arise in lighting. For example, precomputed radiance transfer techniques have introduced spherical harmonics and wavelets to solve this problem, but they traditionally considered only distant illumination, not truly local lighting. Our algorithm extends these techniques to direct-to-indirect transfer matrices. We decided to use wavelets because they work well when sharp lighting details need to be maintained. In our case, these details may be sharp, secondary shadows or glossy reflections. We suggest that readers unfamiliar with wavelets consult Stollnitz et al. 1995.

As in Ng et al. 2003, we project the rows of T into 2D Haar wavelets, which results in many coefficients close to zero. This allows us to discard most of these coefficients, keeping only a few per row (let's say 100 per row), which makes the matrix sparse.

The question is which coefficients to keep. Should we preserve the ones with the largest absolute values? We found that this is not optimal, and we should instead weight the coefficients by the area of support of the corresponding wavelet basis function (the number of its nonzero elements). The wavelet transform code is presented in Listing 9-4.

Once a row of the matrix is computed and transformed, we need to preserve the most important coefficients and store the result as a sparse vector, as in Listing 9-5.

Now we have everything we need to precompute the whole sparse matrix for onebounce indirect illumination. For each view sample, we compute the corresponding row of T, transform it, cull unimportant coefficients, and output the resulting sparse vector to a file (so that we do not have to store it in memory).

Example 9-4. Pseudocode for the 2D Haar Wavelet Transform

SquareTexture waveletTransform(SquareTexture a)

{

int n = a.size(); // Texture is n x n

assert isPowerOf2(n); // Only works for power-of-two textures

SquareTexture b = new SquareTexture(n);

for (int side = n; side >= 2; side /= 2)

for (int i = 0; i < side; i += 2)

for (int j = 0; j < side; j += 2)

{

b(i/2, j/2) = 0.5 * // Box filter

(a(i,j) + a(i+1, j) + a(i, j+1) +

a(i+1, j+1));

b(i/2, j/2 + side/2) = 0.5 * // Horizontal

(a(i,j) - a(i+1, j) + a(i, j+1) -

a(i+1, j+1));

b(i/2 + side/2, j/2) = 0.5 * // Vertical

(a(i,j) + a(i+1, j) - a(i, j+1) -

a(i+1, j+1));

b(i/2 + side/2, j/2 + side/2) = 0.5 * // Diagonal

(a(i,j) - a(i+1, j) -

a(i, j+1) + a(i+1, j+1));

}

}

return b;

}Example 9-5. Pseudocode for Wavelet Coefficient Culling

struct WaveletCoefficient

{

int index,

RgbColor value;

// Assuming 64k gather samples

int getX() { return index % 256; }

int getY() { return index / 256; }

// You might want to use lookup tables instead.

int getLevel() { return ceil(log(1 + max(getX(), getY()))); }

int getArea() { return 4^(9 - getLevel()); }

}

type SparseVector = List<WaveletCoefficient>;

SparseVector keepImportantCoeffs(SquareTexture a)

{

int n = a.size(); // Texture is n x n.

SparseVector sv = new SparseVector();

// Turn the 2D array into a list of coefficients.

for (int i = 0; i < n; i++)

for (int j = 0; j < n; j++)

{

WaveletCoefficient w = new WaveletCoefficient();

w.index = i * 256 + j;

w.value = a(i, j) * w.getArea(); // Weight by area

sv.add(w);

}

sortDescendingByAbsoluteValue(sv);

sv.keepSubList(0, 100); // Keep 100 most important coefficients.

// Undo the area weighting.

for (WaveletCoefficient w: sv) w.value /= w.getArea();

return result;

}9.6 Adding Multiple Bounces

To make multiple bounces manageable, we split the transfer matrix into two components: a multibounce matrix to encode the direct-to-indirect transfer within the gather cloud, and a final gather matrix to encode the transfer from gather samples to view samples. The advantage of this split is that we can compute the multibounce matrix at lower accuracy. This split can be summarized by the following equation:

|

V i = F . (M + I)· g d , |

where F is the final gather matrix, M is the multiple-bounce matrix, and I is the identity matrix. If we denote by g i the (multibounce) indirect illumination on the gather cloud, we can also write this equation as

|

g i |

= |

M · g d |

|

V i |

= |

F · (g d + g i )· |

We can afford to compute the matrix M at lower accuracy because its contribution to the image

is not often directly visible. We do so by using a modified version of the photon-mapping algorithm (Jensen

2001). We treat each gather sample as a small area light with Lambertian emission. We then shoot about one

million photons from the gather samples (about 15 photons from each). A larger number of photons might improve

accuracy at the price of longer precomputation time; however, as noted before, this matrix does not require very

high accuracy. Each photon carries the ID of the gather sample from which it originated. In a second pass, a

k-nearest-neighbor computation is done on each gather sample (k is about 1,000), and these

k photons are treated as a sparse, k-element approximation to the corresponding row of the

matrix M. More details can be found in

Haan

et al. 2006.

The multibounce matrix is already sparse, so we might choose not to compress it at all. However, we can improve performance by applying the same wavelet compression technique we used for the final gather matrix. We can use a smaller number of coefficients per row (we use 40, but again note that this number does not affect image quality much because the final gather will blur out errors). This means that each row of the matrix will have 40 nonzero elements instead of 1,000. The complete relighting algorithm, with multiple bounces and wavelet compression included, can be summarized as

|

V i = F · (M + I) · g d = F w · wt (M w · wt(g d ) + g d ), |

where F w and M w are the wavelet-projected final gather and multiple-bounce matrixes, respectively, and wt is the wavelet transform operator. Listing 9-6 shows the full relighting algorithm (for one light) summarized in pseudocode, and Figure 9-2 shows the algorithm applied.

Figure 9-2 The Complete Relighting Algorithm

Example 9-6. Pseudocode for the Complete Relighting Algorithm

Texture computeLighting(light, viewSamples, gatherSamples)

{

// Direct illumination on view and gather samples

viewDirect = computeDirectIllumination(light, viewSamples);

gatherDirect = computeDirectIllumination(light, gatherSamples);

// Multibounce indirect illumination on gather samples

gatherDirectWavelet = waveletTransform(gatherDirect);

gatherIndirect =

sparseMultiply(multiBounceWaveletMatrix, gatherDirectWavelet);

gatherFull = gatherDirect + gatherIndirect;

// Final bounce from gather to view samples

gatherFullWavelet = waveletTransform(gatherFull);

viewIndirect = sparseMultiply(finalGatherWaveletMatrix, gatherFullWavelet);

// Combine into final image.

viewFull = viewDirect + viewIndirect;

return viewFull;

}9.7 Packing Sparse Matrix Data

There are many ways to store a sparse matrix. The three most common methods are these:

- Row-based—store a list of nonzero elements for each row

- Column-based—store a list of nonzero elements for each column

- An unordered list of triples—row, column, value

None of these methods is particularly efficient for GPU implementation, so the question is whether we can do better.

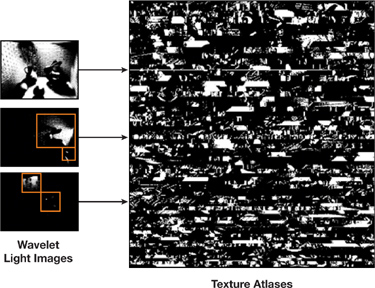

Figure 9-3 shows the columns of the compressed final gather matrix. You can think of these columns as images lit by "wavelet lights." We can see that many of these are zero, and that the nonzero elements tend to cluster together. This scenario suggests that we should use a simple image-based technique to store the data; that is, cut out the nonzero elements from each of these images into one or more rectangles. The easiest approach is to cut out a single rectangle that fits most tightly around the nonzero elements, but we can use more than one rectangle, to improve the storage efficiency.

Figure 9-3 Example Columns from the Transformed One-Bounce Matrix

Finally, we pack all these rectangular blocks into 4,096x4,096 texture atlases. This is much more efficient than using a separate texture for each block, because the number of state changes is much lower. Figure 9-4 illustrates this packing.

Figure 9-4 Packing the Sparse Final Gather Matrix

9.8 A GPU-Based Relighting Engine

While we ran all the precomputation on the CPU, we implemented the interactive relighting engine entirely on the GPU, using the OpenGL API and GLSL running on a Shader Model 3 graphics card (a GeForce 7800, in our case). The complete algorithm for one light is summarized in pseudocode in Listing 9-7, in Section 9.8.2. The algorithm appears complex, but only three operations need to be implemented: direct illumination computation, wavelet transform of a square image, and sparse-matrix multiplication. In the rest of this chapter, we describe our implementation for each operation.

9.8.1 Direct Illumination

We evaluate direct illumination in the same way we did on the Lpics relighting engine (Pellacini et al. 2005). We use deferred shading of deep frame-buffer data that is stored using four texture rectangles encoding positions, normals, and diffuse and specular coefficients, respectively. We use two sets of textures for view and gather samples. To save GPU memory, we encode normals and diffuse components by using 8 bits per channels (bpc), while keeping positions and specular coefficients in floating point at 16 bpc.

We shade the deep frame buffer by drawing a quad that covers the required resolution. A GLSL shader is used to

define the direct illumination model. In our implementation, we used Phong shading for surface reflection;

alternatively, a more complex uber-shader can be used for material representation. We tested our algorithm with

many different light models, including point and spotlights, a projector light, and a light inspired by the

uber-light cinematic light shader (Pellacini and

Vidime

2004). To avoid code duplication, we include all possible light types by using preprocessor macros, and then we

bind the proper shader variant for each light.

We support shadowing by using shadow mapping for sharp shadows. For spot and projector lights, we use a standard shadow map, and we use six maps for point lights. We have also implemented a slower method for area lights that samples their shadows uniformly with multiple shadow maps. We render shadow maps by using frame-buffer objects. For each object in the scene, we store triangles by using vertex-buffer objects directly in video memory. View-frustum culling of objects further speeds up rendering shadow maps. Although this implementation could be improved substantially, we found it sufficient in our case because most of the computation time remains in the multiplication.

To increase image quality, we antialias direct illumination by supersampling it at 2x2 samples per pixel. We compute indirect illumination per pixel and upsample it to the direct illumination's resolution, while trying to avoid light leaking across the edges. We do this by assigning the closest neighbor (based on normal and position) searched in a 3x3 region around each pixel. We finally sum the direct and indirect contributions and downsample using a 2x2 box filter.

9.8.2 Wavelet Transform

We perform the Haar 2D wavelet transform of square images by using a simple recursive algorithm. As shown earlier in Listing 9-4, the transform is essentially a sequence of filtering operations. To implement this on the GPU, we convert the outer for-loop to a multipass approach, and we use a fragment shader to implement the filtering operation in each pass. Listing 9-7 shows the pseudocode for the recursive algorithm, and Listing 9-8 shows the fragment shader code.

Example 9-7. Pseudocode for the CPU-Side Wavelet Transform Loop

SquareTexture waveletTransform(SquareTexture source)

{

int size = source.size();

// 256 in our implementation

SquareTexture result = new SquareTexture(size);

bindBuffer(result);

while (size > = 2)

{

glViewport(0, 0, size, size);

bindProgram(waveletFragmentShader, source);

drawQuadWithTextureCoordinates(0, 0, size, size);

unbindProgram();

size /= 2;

// Size is halved in each pass

copyToTexture(source);

}

unbindBuffer();

return result;

}Example 9-8. The Wavelet Transform Fragment Shader

void main(uniform samplerRect source, uniform float size)

{

vec2 loc = 0;

vec4 weights = 0;

vec2 curLoc = floor(gl_TexCoord[0].xy);

float halfSize = floor(size / 2);

// Determine which coefficient to compute

// and set appropriate weights.

if (curLoc.x > = halfSize)

{

if (curLoc.y > = halfSize)

{

loc = curLoc - vec2(halfSize, halfSize);

weights = vec4(+1, -1, -1, +1);

}

else

{

loc = curLoc - vec2(halfSize, 0);

weights = vec4(+1, -1, +1, -1);

}

}

else

{

if (curLoc.y > = halfSize)

{

loc = curLoc - vec2(0, halfSize);

weights = vec4(+1, +1, -1, -1);

}

else

{

loc = curLoc;

weights = vec4(+1, +1, +1, +1);

}

}

// Look up source pixels.

vec4 s00 = textureRect(source, 2 * loc + vec2(0, 0));

vec4 s01 = textureRect(source, 2 * loc + vec2(0, 1));

vec4 s10 = textureRect(source, 2 * loc + vec2(1, 0));

vec4 s11 = textureRect(source, 2 * loc + vec2(1, 1));

// Compute the weighted average for the coefficient.

vec4 c = 0.5 * (weights.x * s00 + weights.y * s10 + weights.z * s01 +

weights.w * s11);

gl_FragColor = c;

}9.8.3 Sparse Matrix Multiplication

Sparse matrix multiplication is the most expensive component of the relighting algorithm in terms of speed and memory. As shown in the previous section, we store matrix coefficients by using rectangular image blocks packed in 4,096x4,096 texture atlases. To obtain maximum performance, we convert the multiplication to a (large) sequence of blending operations by interpreting the multiplication as column (image) accumulation. In particular, we accumulate the values in each block, multiplied by the intensity of the corresponding wavelet light in the final image. Furthermore, this image-based strategy lets us take advantage of the sparsity in the light vector by skipping all blocks whose light intensity is smaller than a threshold. We call this on-the-fly culling.

For each block, we construct a camera-aligned quad whose vertices are set to the block image coordinates. At each quad vertex, we store the atlas texture coordinates. Texture data is quantized using 8 bpc together with a scale and an offset value stored at the vertices of each quad. We store all quads in a vertex-buffer object to avoid transferring to the GPU during rendering. We evaluate the sparse matrix multiply by drawing all the quads and using shaders to multiply the wavelet light coefficients (constant per quad) by the matrix coefficients (after scaling and offsetting) stored in the texture atlases. Using frame-buffer blending, we accumulate the results by setting the source and destination weights to 1. Listing 9-9 lists the vertex and fragment shader code used for the multiply.

To support on-the-fly culling of wavelet lights, we store image norms in quad vertex data and multiply them by the wavelet light coefficient in the vertex shader. If such a product is smaller than a given epsilon, we cull the quad by sending it off-screen directly in the vertex shader. This allows us to speed up the operation considerably without requiring any CPU intervention.

Example 9-9. Vertex and Fragment Shader Code for Sparse Matrix Multiplication

// Vertex Shader ----------------------------------------

void main(uniform float waveletEpsilon, // For on-the-fly culling

uniform sampler2D waveletLightCoefficients,

uniform vec2 waveletLightCoefficientsSize)

{

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

gl_TexCoord[0] = gl_MultiTexCoord0;

// Block atlas texture coord

gl_TexCoord[1] = gl_MultiTexCoord1;

// Light coefficient coord

gl_TexCoord[2] = gl_MultiTexCoord2;

// Scale/offset of block

float boxNorm = gl_MultiTexCoord2.y; // Column norm of the block

vec3 waveletCoefficient =

texture2D(sourceVertex, gl_MultiTexCoord1.xy / sourceVertexSize).xyz;

float sourceNorm = dot(waveletCoefficient, waveletCoefficient);

// Test the culling threshold against the product of

// wavelet light coefficient times column norm

if (boxNorm * sourceNorm < waveletEpsilon)

{

gl_Position = vec4(-10, 0, 0, 1); // Toss out the vertex

}

} // Fragment shader --------------------------------------

void main(uniform samplerRect waveletLightCoefficients,

uniform samplerRect transferCoefficients)

{

// Read wavelet light intensity.

vec3 l = textureRect(waveletLightCoefficients, gl_TexCoord[1].xy).xyz;

// Read matrix coefficient.

vec3 w = textureRect(transferCoefficients, gl_TexCoord[0].xy).xyz;

// Scale and offset the matrix coefficient to support 8 bits compression.

w = gl_TexCoord[2].z + (gl_TexCoord[2].w - gl_TexCoord[2].z) * w;

// Scale the result and return.

gl_FragColor = vec4(l * w, 1);

}9.9 Results

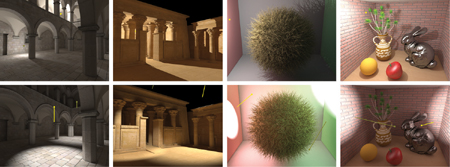

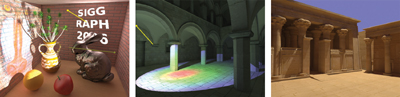

We tested our algorithm on a 3.2 GHz Pentium 4 with 2 GB of RAM and an NVIDIA GeForce 7800 graphics accelerator with 256 MB of RAM. We ran our tests at a video resolution of 640x480, with per-pixel indirect illumination computed from 65,536 gather samples and 2x2 supersampled direct illumination. Images of the scenes we tested, lit by omnilights and spotlights, are presented in Figure 9-5 and show large areas with pure indirect illumination. Figure 9-6 shows the same scenes lit using arbitrary direct lighting shaders, while still providing interactive feedback to light designers with global illumination.

Figure 9-5 Scenes Rendered in Our Systems Using Point and Spot Lighting

Figure 9-6 Arbitrary Lighting Effects Can Be Supported by Our Algorithm

For all tested scenes, ranging from roughly 60,000 to 2.1 million triangles, we were able to compute accurate indirect illumination at interactive rates of about 7 to 15 frames per second without on-the-fly wavelet culling, and about 9 to 25 frames per second with culling, with almost no perceptible difference. Because the algorithm runs entirely on the GPU, we found that the performance depends mostly on the GPU. Relighting times are dominated by the transfer computation and occasionally by the shadow-map rendering. The bottleneck of the CPU precomputation is the final gather matrix, which took at most 2.6 hours; all other precomputation phases combined should be well under 20 minutes. GPU memory requirements are dominated by the transfer matrices, which took about 100 MB (using 100 coefficients for final gather and 40 for the multibounce matrix) after quantization.

- Contributors

- Foreword

- Part I: Geometry

-

- Chapter 1. Generating Complex Procedural Terrains Using the GPU

- Chapter 2. Animated Crowd Rendering

- Chapter 3. DirectX 10 Blend Shapes: Breaking the Limits

- Chapter 4. Next-Generation SpeedTree Rendering

- Chapter 5. Generic Adaptive Mesh Refinement

- Chapter 6. GPU-Generated Procedural Wind Animations for Trees

- Chapter 7. Point-Based Visualization of Metaballs on a GPU

- Part II: Light and Shadows

-

- Chapter 8. Summed-Area Variance Shadow Maps

- Chapter 9. Interactive Cinematic Relighting with Global Illumination

- Chapter 10. Parallel-Split Shadow Maps on Programmable GPUs

- Chapter 11. Efficient and Robust Shadow Volumes Using Hierarchical Occlusion Culling and Geometry Shaders

- Chapter 12. High-Quality Ambient Occlusion

- Chapter 13. Volumetric Light Scattering as a Post-Process

- Part III: Rendering

-

- Chapter 14. Advanced Techniques for Realistic Real-Time Skin Rendering

- Chapter 15. Playable Universal Capture

- Chapter 16. Vegetation Procedural Animation and Shading in Crysis

- Chapter 17. Robust Multiple Specular Reflections and Refractions

- Chapter 18. Relaxed Cone Stepping for Relief Mapping

- Chapter 19. Deferred Shading in Tabula Rasa

- Chapter 20. GPU-Based Importance Sampling

- Part IV: Image Effects

-

- Chapter 21. True Impostors

- Chapter 22. Baking Normal Maps on the GPU

- Chapter 23. High-Speed, Off-Screen Particles

- Chapter 24. The Importance of Being Linear

- Chapter 25. Rendering Vector Art on the GPU

- Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing

- Chapter 27. Motion Blur as a Post-Processing Effect

- Chapter 28. Practical Post-Process Depth of Field

- Part V: Physics Simulation

-

- Chapter 29. Real-Time Rigid Body Simulation on GPUs

- Chapter 30. Real-Time Simulation and Rendering of 3D Fluids

- Chapter 31. Fast N-Body Simulation with CUDA

- Chapter 32. Broad-Phase Collision Detection with CUDA

- Chapter 33. LCP Algorithms for Collision Detection Using CUDA

- Chapter 34. Signed Distance Fields Using Single-Pass GPU Scan Conversion of Tetrahedra

- Chapter 35. Fast Virus Signature Matching on the GPU

- Part VI: GPU Computing

-

- Chapter 36. AES Encryption and Decryption on the GPU

- Chapter 37. Efficient Random Number Generation and Application Using CUDA

- Chapter 38. Imaging Earth's Subsurface Using CUDA

- Chapter 39. Parallel Prefix Sum (Scan) with CUDA

- Chapter 40. Incremental Computation of the Gaussian

- Chapter 41. Using the Geometry Shader for Compact and Variable-Length GPU Feedback

- Preface