At NVIDIA, we are driving change in data science, machine learning, and artificial intelligence. Some of the key trends that drive us are as follows:

- The rise of Python as the most-used language for data analytics

- Increased demand for highly usable distributed computing

- The need for more computational power

- Open-source software becoming mainstream in industry

At the intersection of these trends is Dask, an open-source library designed to provide parallelism to the existing Python stack. In this post, we talk about Dask, what it is, how we use it at NVIDIA, and why it has so much potential at large enterprises. We conclude by highlighting the growing need for enterprise Dask support and the companies, like Coiled, Anaconda, and Quansight, that are addressing those needs for small and large-scale customers.

Dask: Answering the historical challenges of scaling Python

Python is slow. Originally developed by Guido Van Rossum as a holiday hobby project in 1989, Python was not intended to handle the terabyte-scale production workloads that it does today in some of the most compute-heavy organizations. What happened?

Python is highly usable, which transformed it into a “glue language.” It connects high-performance languages and APIs like Fortran and CUDA to lightweight, user-friendly APIs. By mixing accessibility with performance, it has seen significant adoption by scientists, subject matter experts, and other data practitioners who may not have a traditional computer science background. Successful projects like NumPy, scikit-learn, and especially pandas changed how we think about accessibility in data science and machine learning.

Developed before big data use cases became so prevalent, these projects didn’t have a strong solution for parallelism. Python was the choice for single-core computing, but users were forced to find other solutions for multi-core or multi-machine parallelism. This caused a break in user experience and frustration.

Many great developers attempted to address this frustration. Libraries like mrjob for Hadoop and PySpark for Apache Spark allowed you to parallelize computation with Python, but the user experience was not the same as with favorites like NumPy, pandas, and scikit-learn. This created a pattern where work had to be done twice: develop your ideas in pandas and scikit-learn and then refactor in PySpark and MLlib to work at scale. Often, this work is done by two separate teams, slowing deployment and creating overhead as the different teams communicate to troubleshoot errors.

Enter Dask. This growing need to scale workloads in Python has led to the natural growth of Dask over the last five years. Also popular with web developers, Python has a robust networking stack that Dask leverages to build a flexible, performant, distributed computing system capable of scaling a wide variety of workloads. Dask’s flexibility helps it to stand out against other big data solutions like Hadoop or Apache Spark. Its support of native code makes it particularly easy to work with for Python users and C/C++/CUDA developers.

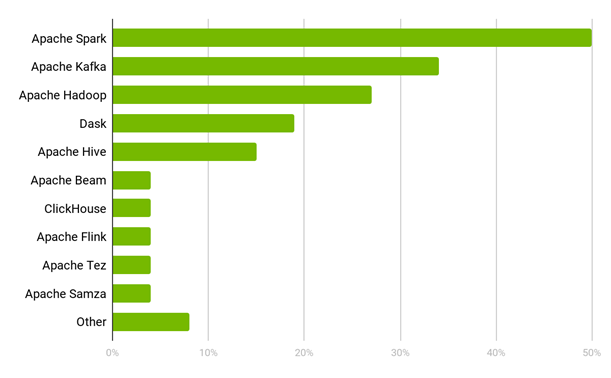

Dask has been quickly adopted by the Python developer community. Today, Dask is managed by a community of developers that spans dozens of institutions and PyData projects such as pandas, Jupyter, and scikit-learn. Dask’s integration with these popular tools has led to rapidly rising adoption, with about 20% adoption among developers who need Pythonic big data tools.

Dask and NVIDIA: Driving accessible accelerated analytics

NVIDIA understands the power that GPUs offer to data analytics. That’s why we have tried to help you get the most out of your data. After seeing the power and accessibility of Dask, we started using it on the RAPIDS project with the goal of horizontally scaling accelerated data analytics workloads to multiple GPUs and GPU-based systems.

Due to the accessible Python interface and versatility beyond data science, Dask grew to other projects throughout NVIDIA, becoming a natural choice in new applications ranging from parsing JSON to managing end-to-end deep learning workflows. Here are a few of our many ongoing projects and collaborations using Dask.

RAPIDS

RAPIDS is a suite of open-source software libraries and APIs for executing data science pipelines entirely on GPUs, often reducing training times from days to minutes. Built on NVIDIA CUDA-X AI, RAPIDS unites years of development in graphics, machine learning, high-performance computing (HPC), and more.

While CUDA-X is incredibly powerful, most data analytics practitioners prefer experimenting, building, and training models with a Python toolset, like NumPy, pandas, and scikit-learn. Dask is a critical component of the RAPIDS ecosystem, making it even easier for you to take advantage of accelerated computing through a comfortable Python-based user experience.

NVTabular

NVTabular is a feature engineering and preprocessing library designed to manipulate terabytes of tabular datasets quickly and easily. Built on the Dask-cuDF library, it provides a high-level abstraction layer, simplifying the creation of high-performance ETL operations at massive scale. NVTabular can scale to thousands of GPUs by using RAPIDS and Dask, eliminating the bottleneck of waiting for ETL processes to finish.

BlazingSQL

BlazingSQL is an incredibly fast, distributed SQL engine on GPUs also built upon Dask-cuDF. It enables data scientists to easily connect large-scale data lakes to GPU-accelerated analytics. With a few lines of code, you can directly query raw file formats such as CSV and Apache Parquet inside data lakes like HDFS and Amazon S3 and then directly pipe the results into GPU memory.

BlazingDB, Inc., the company behind BlazingSQL, is a core contributor to RAPIDS and collaborates heavily with NVIDIA.

cuStreamz

At NVIDIA, we’re using Dask internally to fuel parts of our products and business operations. Using Streamz, Dask, and RAPIDS, we’ve built cuStreamz, an accelerated streaming data platform using 100% native Python. With cuStreamz, we’re able to conduct real-time analytics for some of our most demanding applications like GeForce NOW, NVIDIA GPU Cloud (NGC), and NVIDIA Drive SIM. While it’s a young project, we’ve already seen impressive reductions to total cost of ownership over other streaming data platforms using the Dask-enabled cuStreamz.

Dask and RAPIDS: Enabling innovation in the enterprise

Many companies are adopting both Dask and RAPIDS to scale some of their most important operations. Some of NVIDIA’s biggest partners, leaders in their industries, are using Dask and RAPIDS to power their data analytics. Here are some recent exciting examples.

Capital One

On a mission to “change banking for good,” Capital One has invested heavily in large-scale data analytics to provide better products and services to its customers and improve operational efficiencies across their enterprise. With a large community of Python-friendly data scientists, Capital One uses Dask and RAPIDS to scale and accelerate traditionally hard to parallelize Python workloads and significantly lessen the learning curve for big data analytics.

National Energy Research Scientific Computing Center

Devoted to providing computational resources and expertise for basic scientific research, NERSC is a world leader in accelerating scientific discovery through computation. Part of that mission is making supercomputing accessible to researchers to fuel scientific exploration. With Dask and RAPIDS, the incredible power of their latest supercomputer “Perlmutter” becomes easily accessible by researchers and scientists with limited background in supercomputing. By using Dask to create a familiar interface, they put the power of supercomputing into the hands of scientists driving potential breakthroughs across fields.

Oak Ridge National Laboratory

Amid a global pandemic, Oak Ridge National Laboratory (ORNL) is pushing boundaries of innovation by building a “virtual lab” for drug discovery in the fight against COVID-19. Using Dask, RAPIDS, BlazingSQL, and NVIDIA GPUs, researchers can use the power of the Summit supercomputer from their laptops to screen small-molecule compounds for their ability to bind with the SARS-CoV-2 main protease. With such a flexible toolset, engineers were able to get this custom workflow up and running in less than two weeks and see subsecond query results.

Walmart Labs

A giant in the retail space, Walmart uses massive datasets to better serve their customers, predict product needs, and improve internal efficiencies. Relying on large-scale data analytics to accomplish these goals, Walmart Labs has turned to Dask, XGBoost, and RAPIDS to reduce training times by 100X, enabling fast model iteration and accuracy improvements to further their business. With Dask, they open the power of NVIDIA GPUs to data scientists to solve their hardest problems.

Dask in the enterprise: A growing market

While it’s often easy for practitioners across an enterprise to experiment with open-source software, it’s much more challenging to use that software in production. With budding, promising open-source technologies, enterprises may roll their own deployments to address real-world business problems. As that open-source software matures and gains traction, companies pop up and begin filling the need for enterprise-grade deployments, integration, and support.

With its growing success in large institutions, we’ve begun to see more companies fill the need for Dask products and services in the enterprise. Here are some companies that are addressing enterprise Dask needs, signaling the beginnings of a maturing market.

Anaconda

Like a large portion of the SciPy ecosystem, Dask began at Anaconda Inc, where it gained traction and matured into a larger open-source community. As the community grew and enterprises began adopting Dask, Anaconda began providing consulting services, training, and open-source support to ease enterprise usage. A major proponent of open-source software, Anaconda also employs many Dask maintainers, providing a deep understanding of the software to enterprise customers.

Coiled

Founded by Dask maintainers, like Dask project lead and former NVIDIA employee Matthew Rocklin, Coiled provides a managed solution around Dask to make it easy in both cloud and enterprise environments, as well as enterprise support to help optimize Python analytics within institutions. Recently released for general availability, their publicly-hosted managed deployment product provides a robust yet intuitive way to use both Dask and RAPIDS today.

Quansight

Dedicated to helping enterprises create value out of their data, Quansight provides a variety of services to propel data analytics across industries. Like Anaconda, Quansight provides consulting services and training to enterprises using Dask. Engrained with the PyData and NumFOCUS ecosystems, Quansight also provides support for enterprises that need enhancements or bug fixes in open-source software.

Conclusion

Dask is a powerful and accessible open-source project that allows data analytics practitioners to easily scale Python workloads. Due to its promise and ease of use, Dask has seen substantial traction among data scientists and is beginning to show amazing results in enterprise settings. At NVIDIA, we believe in Dask’s transformative power enough that we ingrained it as a major component in the RAPIDS suite, allowing the power of accelerated computing to be accessed through a Python interface.

As Dask continues to mature, we’re beginning to see more companies addressing the need for managed Dask deployments and support for enterprises. This maturation marks major progress in the data analytics industry, driving accessible high-performance analytics to a broader audience and making game-changing, data-driven innovation inevitable.

To learn more about the growing innovations around Dask, keep your eyes on dask.org, follow the Dask blog, and join us at GTC 2020 to hear more about deploying Dask and RAPIDS at scale.