AI will soon massively empower architects in their day-to-day practice. This potential is around the corner and my work provides a proof of concept. The framework used in my work offers a springboard for discussion, inviting architects to start engaging with AI, and data scientists to consider Architecture as a field of investigation. In this post, I summarize a part of my thesis, submitted at Harvard in May 2019, where Generative Adversarial Neural Networks (GANs) get leveraged to design floor plans, and entire buildings.

I believe a statistical approach to design conception will shape AI’s potential for Architecture. This approach is less deterministic and more holistic in character. Rather than using machines to optimize a set of variables, relying on them to extract significant qualities and mimicking them all along the design process represents a paradigm shift.

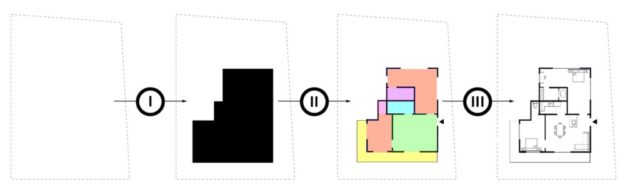

Let’s unpack floor plan design into 3 distinct steps:

- (I) building footprint massing

- (II) program repartition

- (III) furniture layout

Each step corresponds to a Pix2Pix GAN-model trained to perform one of the 3 tasks above. By nesting these models one after the other, I create an entire apartment building “generation stack” while allowing for user input at each step. Additionally, by tackling multi-apartment processing, this project scales beyond the simplicity of single-family houses.

Beyond the mere development of a generation pipeline, this attempt aims at demonstrating the potential of GANs for any design process, whereby nesting GAN models, and allowing user input between them, I try to achieve a back and forth between humans and machines, between disciplinarian intuition and technical innovation.

Representation, Learning, and Framework

Pix2Pix uses a conditional generative adversarial network (cGAN) to learn a mapping from an input image to an output image. The network consists of two main pieces, the Generator and the Discriminator. The Generator transforms the input image to an output image; the Discriminator tries to guess if the image was produced by the generator or if it is the original image. The two parts of the network challenge each other resulting in higher quality outputs which are difficult to differentiate from the original images.

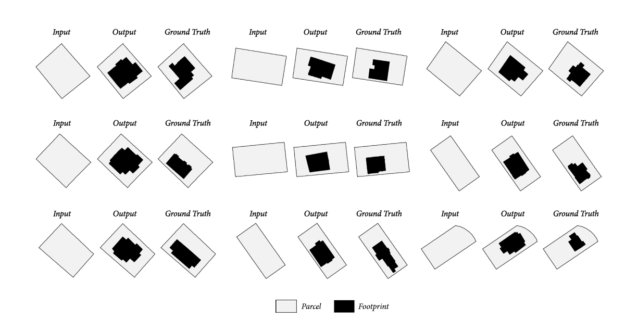

We use this ability to learn image mappings which lets our models learn topological features and space organization directly from floor plan images. We control the type of information that the model learns by formatting images. As an example, just showing our model the shape of a parcel and its associated building footprint yields a model able to create typical building footprints given a parcel’s shape.

I used Christopher Hesse’s implementation of pix2pix. His code uses Tensorflow, as opposed to the original version, which is based on Torch, and has proven to be easy to deploy. I prefered Tensorflow because the large user base and knowledge base gave me confidence that I can easily find answers in case I run into an issue.

I ran fast iterations & tests using an NVIDIA Tesla V100 GPU for the training process on Google Cloud Platform (GCP). The simplicity of the NVIDIA GPU Cloud Image for Deep Learning offered on GCP allowed a seamless deployment by installing all the necessary libraries for Pix2Pix (Tensorflow, Keras etc) and packages to run this code on the machine’s GPU (CUDA & cuDNN). I used TensorFlow 1.4.1 but a newer version of pix2pix with Tensorflow 2.0 is available [here].

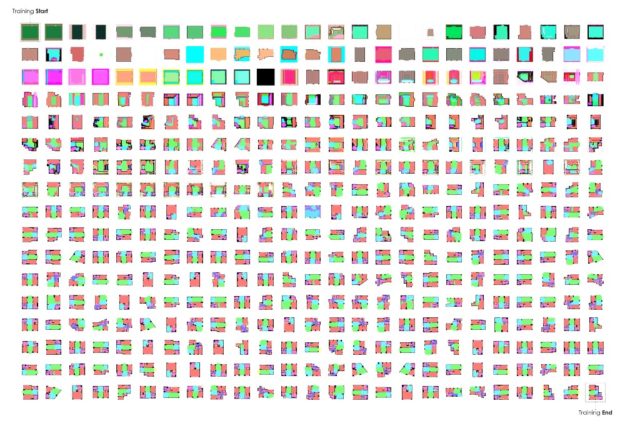

Figure 2 displays the results of a typical training. This sequence first took over a day and a half to train. It eventually took under 2 hours on a Tesla V100 in GCP, allowing for more tests and iterations than by running the same training locally.

We show how one of my GAN-models progressively learns how to layout rooms and the position of doors and windows in space, also called fenestration, for a given apartment unit in the sequence in figure 2.

Although the initial attempts proved imprecise the machine builds some form of intuition after 250 iterations.

Precedents

The early work of Isola et al. in November 2018 enabling image-to-image translation with their model Pix2Pix has paved the way for my research.

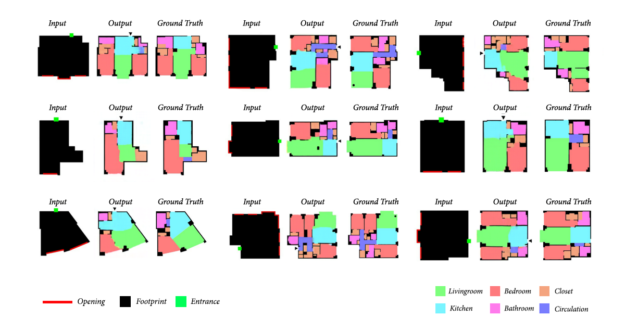

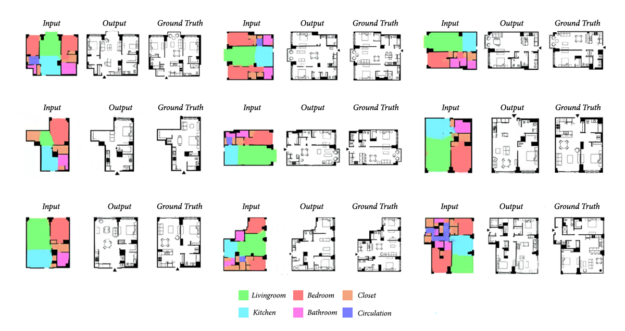

Zheng and Huang in 2018 [3] first studied floor plan analysis using GAN. The authors proposed to use GANs for floor plan recognition and generation using Pix2PixHD [1]. Floor plan images processed by their GAN architecture get translated into programmatic patches of colors. Inversely, patches of colors in their work turn into drawn rooms. If the user specifies the position of openings and rooms, the network elements laid out become furniture. Nathan Peters’ thesis [2] at the Harvard Graduate School of Design in the same year tackled the possibility of laying out rooms across a single-family home footprint. Peters’ work turns an empty footprint into programmatic patches of color without specified fenestration.

Regarding GANs as design assistants, Nono Martinez’ thesis [3] at the Harvard GSD in 2017 investigated the idea of a loop between the machine and the designer to refine the very notion of “design process”.

Stack and Models

I build upon the previously described precedents to create a 3-step generation stack. As described in Figure 3, each model of the stack handles a specific task of the workflow: (I) footprint massing, (II) program repartition, (III) furniture layout.

An architect is able to modify or fine tune the model’s output between each step, thereby achieving the expected machine-human interaction.

Model I: Footprint

Building footprints significantly define the internal organization of floor plans. Their shape is heavily conditioned by their surroundings and, more specifically, the shape of their parcel. Since the design of a housing building footprint can be inferred from the shape of the piece of land it stands on, I have trained a model to generate typical footprints, using GIS-data (Geographic Information System) from the city of Boston. We feed pairs of images to the network during training in a format suitable for Pix2Pix, displaying the raw parcel (left image) and the same parcel with a given building drawn over it (right image). We show some typical results in Figure 4.

Model II: Program

Model II handles repartition and fenestration. The network takes as input the footprint of a given housing unit produced by Model I, the position of its entrance door (green square), and the position of the main windows specified by the user. The plans used to train the network derive from a database of 800+ plans of apartments, properly annotated and given in pairs to the model during training. In the output, The program encodes rooms using colors while representing the wall structure and its fenestration using a black patch. Some typical results are displayed in Figure 5.

Model III: Furnishing

Finally, Model III tackles the challenge of furniture layout using the output of model II. This model trains on pairs of images, mapping room programs in color to adequate furniture layouts. The program retains wall structure and fenestration during image translation while filling the rooms with relevant furniture, specified by each room’s program. Figure 6 displays some typical results.

UI and Experience

I provide the user with a simple interface for each step throughout our pipeline. On the left, they can input a set of constraints and boundaries to generate the resulting plan on the right. The designer can then iteratively modify the input on the left to refine the result on the right. The animations in Figure 7 showcase this type of interface & process set up for Model II.

|  |

|  |

You can also try out this interface yourself. (Performance depending on screen resolution/browser version — Chrome Advised).

Model Chaining and Apartment-Building Generation

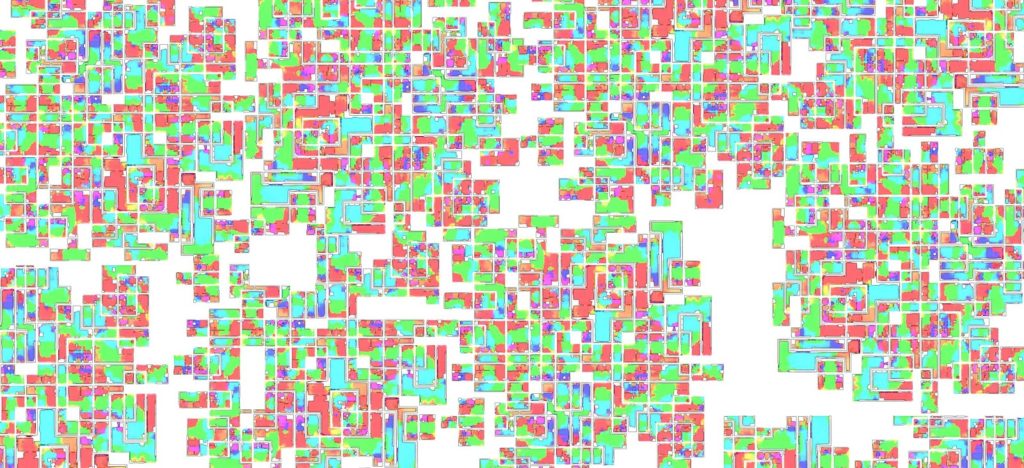

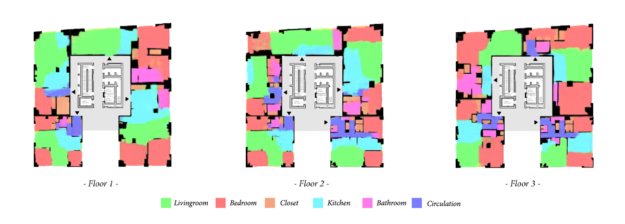

I scale the utilization of GANs in this part to entire apartment building design. The project uses an algorithm to chain models I, II and III, one after the other, processing multiple units as single images at each step. Figure 8 shows this pipeline.

The challenge of drawing floor plates hosting multiple units marks the difference between single-family houses and apartment buildings. Strategically, the ability to control the position of windows and units’ entrances is key to enable unit placement while ensuring each apartment’s quality. Since Model II takes doors and windows position as input, the generation stack described above can scale to entire floor plates generation.

The user is invited to specify the unit split between Model I and Model II, In other words, specifying how each floor plate divides into apartments and to position each unit entrance door and windows, as well as potential vertical circulations (staircases, cores, etc). The proposed algorithm then feeds each resulting unit to Model II (results shown in Figure 9), and then III (result in Figure 10), to finally reassemble each floor plate of the initial building. The algorithm finally outputs as individual images, all floor plates of the generated building.

Going Further

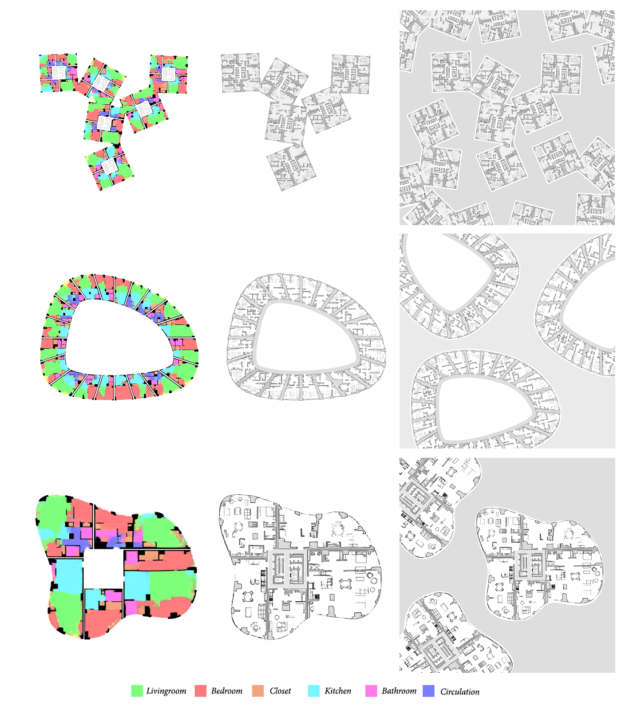

If generating standard apartments can be achieved using this technique, pushing the boundaries of my models is the natural next step. GANs offer remarkable flexibility to solve seemingly highly constrained problems. In the case of floor plan layout, partitioning and furnishing the space by hand can be a challenging process as the footprint changes in dimension and shape. My models prove to be quite “smart” in their ability to adapt to changing constraints, as evidenced in Figure 11.

|  |  |

|  |  |

|  |  |

The ability to control the units’ entrance door and windows position, coupled with the flexibility of my models, allows us to tackle space planning at a larger scale, beyond the logic of a single unit. In Figure 12, I scale the pipeline to entire buildings generation while investigating my model’s reaction to odd apartment shapes and contextual constraints.

Limitations and Future Improvements

If the above results lay down the premise of GANs’ potential for Architecture, some clear limitations will drive further investigations in the future.

First, as apartment units stack up in a multi-story building, we cannot guarantee for now the continuity of load-bearing walls from one floor to the next. Since all the internal structure is laid out differently for each unit, load bearing walls might not be aligned. For now, we consider the façade to be load bearing. However, the ability to specify load-bearing elements’ position in the input of Model II could potentially help address this issue.

Additionally, increasing the size of the output layer by obtaining larger images which offer better definition is a natural next step. We want to deploy the Pix2Pix HD project developed by NVIDIA in August 2018 to achieve this. We hope to leverage TensorRT to deal with the increased computational power needed.

Finally, a major challenge comes from the data format of our outputs. GANs like Pix2Pix handle only pixel information. The resulting images produced in our pipeline cannot, for now, be used directly by architects & designers. Transforming this output from a raster image to a vector format is a crucial step for allowing the above pipeline to integrate with common tools & practices.

Future for GANs in Architecture?

I am convinced that our ability to design the right pipeline will determine AI’s success as a new architectural toolset. Breaking out this pipeline into discrete steps will ultimately permit the user to participate along the way. I believe their control over the machine is the ultimate guarantee of the design process quality and relevance.

At a more technical level, if GANs cannot create entirely fit design options, their “intuition” remains a game-changer, especially as their output can offer a tremendous starting point for standard optimization techniques. By coupling GANs’ results with optimization algorithms, I think we could get the best of each world, by achieving both architectural quality and efficiency.

Want to know more about these topics? I have recently published a sequence of articles, laying down the premise of AI’s intersection with Architecture. Read here about the historical background behind this significant evolution, to be followed by AI’s potential for floor plan design, and for architectural style analysis & generation.

References

[1] Hao Zheng, Weixin Huang. 2018. “Architectural Drawings Recognition and Generation through Machine Learning”. Cambridge, MA, ACADIA.

[2] Nathan Peters. 2017. Master Thesis: “Enabling Alternative Architectures: Collaborative Frameworks for Participatory Design”. Harvard Graduate School of Design, Cambridge, MA.

[3] Nono Martinez. 2016. “Suggestive Drawing Among Human and Artificial Intelligences”, Harvard Graduate School of Design, Cambridge, MA.