Explore Computer Vision SDKs and Libraries

Get the flexibility and reliability you need to integrate powerful visual perception into your application.

Whether you're developing an autonomous vehicle's driver assistance system or a sophisticated industrial system, your computer vision pipeline needs to be versatile. This means supporting deployment from the cloud to the edge, while remaining stable and production-ready. NVIDIA SDKs and libraries deliver the right solution for your unique needs.

Benefits of NVIDIA Computer Vision Software

High Accuracy

Tap into production-ready, pretrained models and low-code tools for fine tuning on custom data sets.

High Throughput

Achieve real-time processing with high-throughput SDKs and libraries.

Versatile Deployment

Scale your systems in deployment environments from the edge to the cloud and data center.

Flexible Interoperability

NVIDIA SDKs are interoperable with each other and with popular frameworks like TensorFlow and Pytorch.

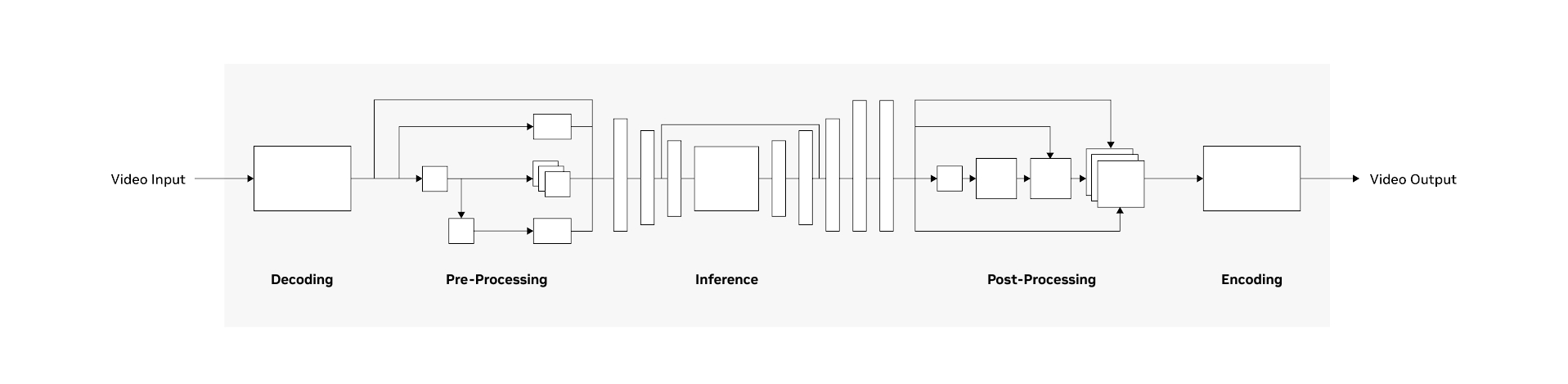

Computer Vision Pipelines

A computer vision pipeline process begins with decoding the image or video input to make it suitable for analysis. Pre-processing steps follow, where the data is transformed, normalized, and enhanced to improve the accuracy of subsequent computations. Next is the inference stage, where the data is analyzed and interpreted to extract meaningful information, such as object detection or image classification results. After inference, post-processing steps are applied to refine and organize the output. And finally, the processed image or video is encoded to its appropriate format before being output from the pipeline, ready for further use or presentation.

This complete pipeline, supported by our versatile SDKs and libraries, seamlessly guides you through every step of the computer vision process.

Encoding and Decoding

Achieve 4X faster lossless image decoding and up to 7X higher throughput with video encoding using GPU-accelerated libraries for encoding and decoding.

Image-Decoding Libraries

nvJPEG and nvJPEG 2000

C++ libraries for decoding, encoding, and transcoding JPEG and JPEG 2000 format images.

Hardware-Accelerated Video Encoding

Video Codec SDK

A set of APIs for hardware-accelerated video encode, transcode, and decode on Windows and Linux.

Hardware-Accelerated Video Encoding

Video Processing Framework (VPF)

Python bindings of C++ libraries from Video Codec SDK.

Pre- and Post-Processing

Move your pre- and post-processing pipelines that are bottlenecked on the CPU to the GPU and achieve up to a 49X end-to-end speedup.

Learn more about pre- and post-processing SDKs

Open Source Pre- and Post-Processing Library

CV-CUDA

An open-source, low-level library that easily integrates into existing custom CV applications to accelerate video and image processing.

Portable Data Processing Pipeline

Data Loading Library (DALI)

Load and pre-process image, audio, and video data using GPUs. DALI can be used directly in TensorFlow, PyTorch, MXNet, and PaddlePaddle models.

Embedded Computer Vision and Image Processing Library

Vision Programming Interface (VPI)

A low-level library for running highly optimized algorithms for processing pipelines on embedded devices. Efficiently distribute workloads across multiple compute engines with support for both C and Python.

Image and Signal-Processing Library

NVIDIA Performance Primitives (NPP)

A comprehensive set of image, video, and signal processing functions for high-performance, low-level image or signal processing of large images (e.g., 20kx20k pixels).

Motion Flow Generation

Optical Flow SDK

Detect and track objects in successive video frames, interpolate, or extrapolate video frames to improve smoothness of video playback and compute flow vectors.

Training

Simplify the model training process and create and train customized AI models in a fraction of the time as before with GPU-accelerated transfer learning and synthetic data.

Synthetic Data Generation

NVIDIA Omniverse™ Replicator

Fine-tune pretrained models with custom, physically accurate 3D synthetic visual data generated in minutes or hours using OpenUSD rather than months.

Open-Source Training Toolkit

NVIDIA TAO Toolkit

Create highly accurate, customized, and enterprise-ready AI models with this low-code toolkit and deploy them on any device, at the edge or the cloud.

Inference

Optimize and speed up inference performance by 36X over CPU-only platforms during inference.

Inference Optimizer and Runtime

NVIDIA TensorRT™

A high-performance deep learning inference SDK that delivers low latency and high throughput for inference applications.

Open Source Inference Serving Software

NVIDIA Triton™

Streamlines and standardizes AI inference by enabling teams to deploy, run, and scale trained ML or DL models from any framework on any GPU- or CPU-based infrastructure.

Streaming Analytics Toolkit

NVIDIA DeepStream SDK

AI-based multi-sensor processing, video, audio, and image understanding to enable real-time analytics.

The world of computer vision solutions is powered by NVIDIA.