A picture is worth a thousand words and videos have thousands of pictures. Both contain incredible amounts of insights only revealed through the power of intelligent video analytics (IVA).

The NVIDIA DeepStream SDK accelerates development of scalable IVA applications, making it easier for developers to build core deep learning networks instead of designing end-to-end applications from scratch.

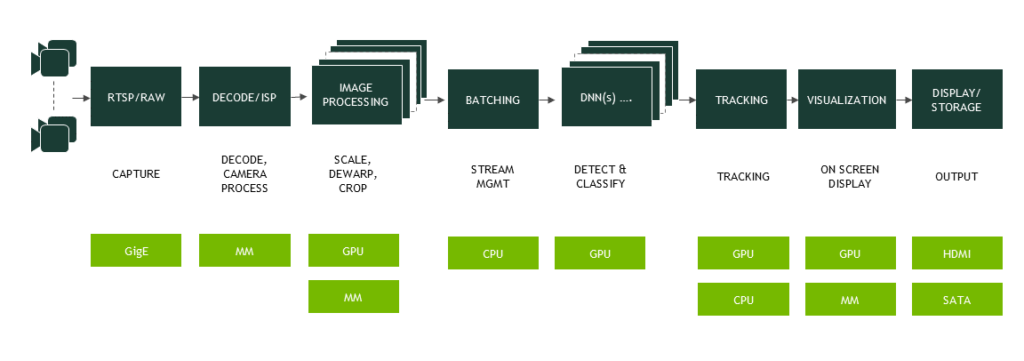

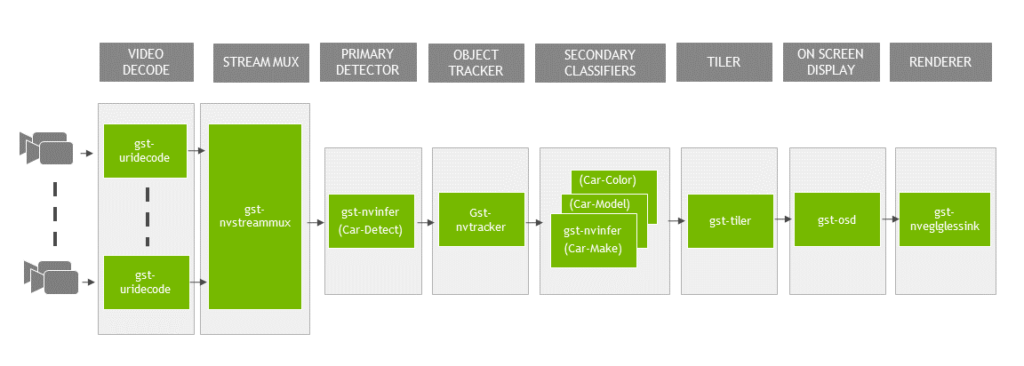

The DeepStream SDK 2.0, described in-depth in a previous post, enables implementation of IVA applications as a pipeline of hardware-accelerated plugins based on the GStreamer multimedia framework, shown in figure 1. These plugins support video input, video decode, image pre-processing, TensorRT-based inference, tracking, and display. The SDK provides out-of-the-box capabilities to quickly assemble flexible, multi-stream video analytics applications.

The latest DeepStream SDK 3.0 extends these capabilities by providing many new features to see beyond the pixels. This includes support for TensorRT 5, CUDA 10, and Turing GPUs. DeepStream 3.0 applications can be deployed as part of a larger multi-GPU cluster or a microservice in containers. This allows highly flexible system architectures and opens up new application capabilities.

The new SDK also supports the following new features:

- Dynamic stream management enabling the addition and removal of streams, along with frame rate and resolution changes.

- Enhanced inferencing capabilities within video pipelines, including support for custom layers, transfer learning, and user-defined parsing of detector outputs.

- Support for 360-degree camera using GPU-accelerated dewarping libraries.

- Custom metadata definition enabling application-specific rich insights.

- Ease of integration with stream and batch analytics systems for metadata processing.

- Robust set of ready to use samples and reference applications in source format.

- Pruned and efficient model support from NVIDIA TAO Toolkit.

- Ability to get detailed performance analysis with the NVIDIA Nsight system profiler tool.

This post takes a closer look at the new features and how they can build scalable video analytics applications. The latest DeepStream 3.0 SDK provides the following hardware-accelerated plugins to make implementation easy or developers, shown in table 1.

| Plugin Name | Functionality |

| gst-nvvideocodecs | H.264 and H.265 video decoding |

| gst-nvstreammux | Stream aggregation and batching |

| gst-nvinfer | TensorRT-based inferencing for detection and classification |

| gst-nvtracker | Object tracking reference implementation |

| gst-nvosd | On-screen display for highlighting objects and text overlay |

| gst-tiler | Frame rendering from multi-source into a 2D grid array |

| gst-eglglessink | Accelerated X11/EGL-based rendering |

| gst-nvvidconv | Scaling, format conversion, and rotation |

| gst-nvdewarp | Dewarping for 360-degree camera input |

| gst-nvmsgconv | Metadata generation and encoding |

| gst-nvmsgbroker | Messaging to cloud |

Video Stream Processing & Management

DeepStream 3.0 adds features to facilitate flexible stream management. It enables multi-GPU support, allowing applications to select different GPUs for specific workloads. The capability allows static and adaptive scheduling of video processing among various GPUs available in a system. Workloads can be distributed based on the number of streams, video format, grouping of deep learning networks for analytics, and memory.

On-the-Fly Addition and Deletion of Input Sources

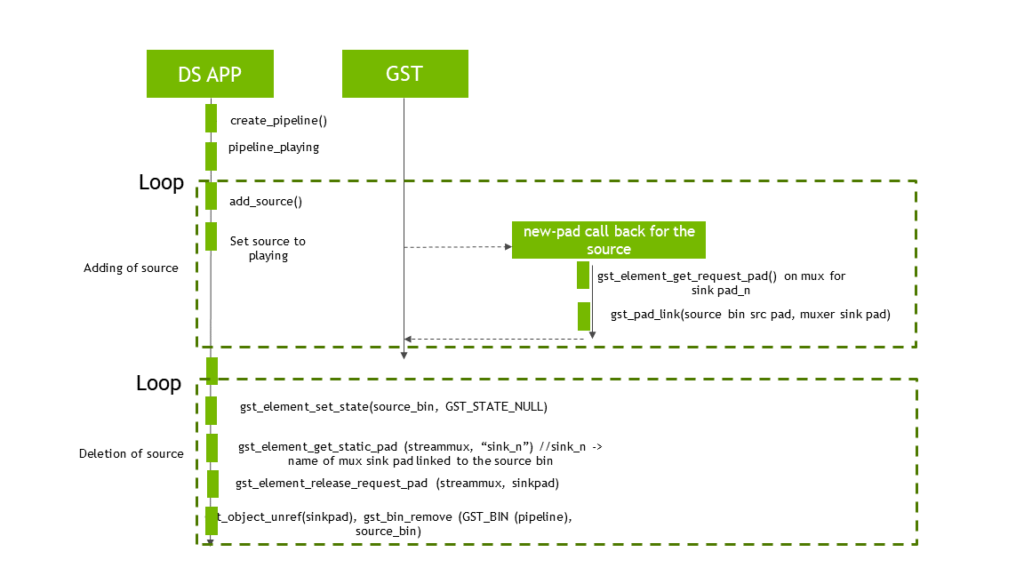

DeepStream 3.0 supports the addition and removal of input sources on the fly.

Developers can easily create new source-bins to add streams and integrate them into the application. These sources bins are then connected to nvstreammux plugin’s sink pads.

The nvstreammux plugin now can create an event to notify downstream components of the new addition. The plugin supports an inverse flow for deleting a source from a live pipeline. Figure 2 shows this process.

Dynamic Resolution Change, Variable Frame Rate, Dewarping

DeepStream 3.0 can handle resolution changes during runtime. The video decoder reconfigures and notifies the change to downstream components to reinitialize as needed for the new resolution set.

The application supports variable frame rate through a batched push timeout property value. The nvstreammux plugin can time out for slow sources and collect more buffers from fast sources.

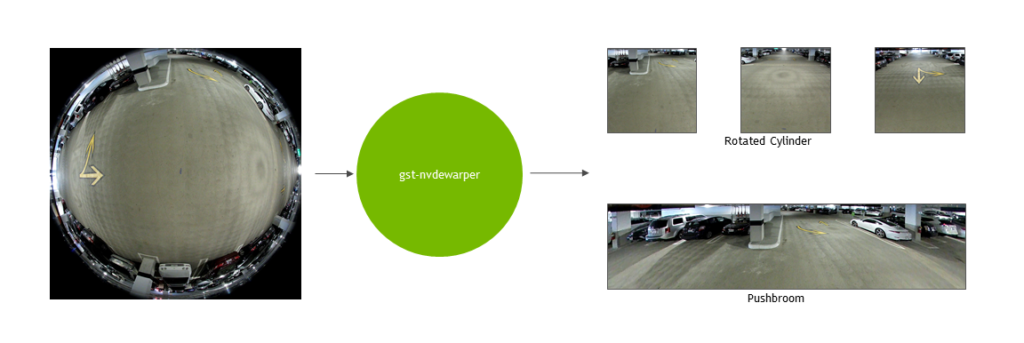

DeepStream 3.0 SDK also supports the use of 360-degree cameras with fisheye lenses. The gst-nvdewarper plugin included in the SDK provides hardware-accelerated solutions to dewarp and transform an image to a planar projection, as figure 3 shows. Reducing distortion makes these images more suitable for processing with existing deep learning models and viewing. The current plugin supports pushbroom and vertically panned radical cylinder projections.

Inferencing Capability

DeepStream 3.0 builds on heterogeneous concurrent deep neural network capabilities for even more complex use cases. The gst-nvinfer plugin that implements TensorRT-based inferencing now allows an unrestricted number of items in output layers. The number of output classes are configurable to suit specific application needs. Grouping using an algorithm based on density-based spatial clustering of applications with noise (DBSCAN) has been added to cluster bounding box outputs from detectors.

Applications can now access the input and output buffers from any inference layer from the gst-nvinfer plugin. This allows extracting features from intermediate layers of the networks to connect to downstream deep learning networks or implement custom plugins to visualize the activation maps across the network.

The SDK also allows users to define custom functions for parsing outputs of object detectors. This helps when post processing results of a new object detection model that uses a different output format.

Adding custom layers using the IPluginV2 interface defined by TensorRT 5.0 enhances the SDK’s inferencing flexibility. Using this features is illustrated through implementation of SSD and faster RCNN-based networks. The inference plugin can also accept models in the ONNX format and those generated by the TAO Toolkit.

Metadata Generation and Customization

As DeepStream applications analyze each video frame, plugins extract information and store it as part of cascaded metadata records, maintaining the record’s association with the source frame. The full metadata collection at the end of the pipeline represents the complete set of information extracted from the frame by the deep learning models and other analytics plugins. This information can be used by the DeepStream application for display or transmitted externally as part of a message for further analysis or long term archival.

DeepStream 3.0 supports two main metadata types:

- Metadata for object identified by the object detection networks,

NvDsObjectParams - Information about events,

NvDsEventMsgMeta

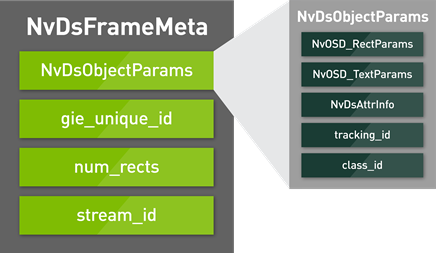

NvDsObjectParams is part of the per-frame NvDsFrameMeta structure as shown in Figure 4. It defines various attributes associated with detected objects in the frame, including:

- Bounding box to mark the coordinates and size of an object.

- Text label used to overlay the object’s class and attribute information on the screen.

- An open-ended field for the application to populate attributes about the detected object, such as make, model and color of a detected car.

NvDsEventMsgMeta defines various event attributes including event type, timing location and source.

A key feature of DeepStream 3.0 is a customizable metadata definition supported by the SDK, enabling user-defined extensions for the developer’s custom neural networks and proprietary algorithms. This custom data is plug-and-play with DeepStream’s pipeline architecture.

We recommend a couple of techniques for implementing metadata:

- Use DeepStream defined attributes

NvDsAttrInfostructure for simple appends like strings and integer metadata. - Use DeepStream defined Metadata API functions for create and attach custom metadata. New custom metadata type can be added to the

NvDsMetaTypeenum and define new structure for custom metadata. Allocate memory to custom metadata, fill metadata information and attach it to the gstreamer buffer usinggst_buffer_add_nvds_metaAPI.

Details for these can be found in the DeepStream 3.0 Plugin manual.

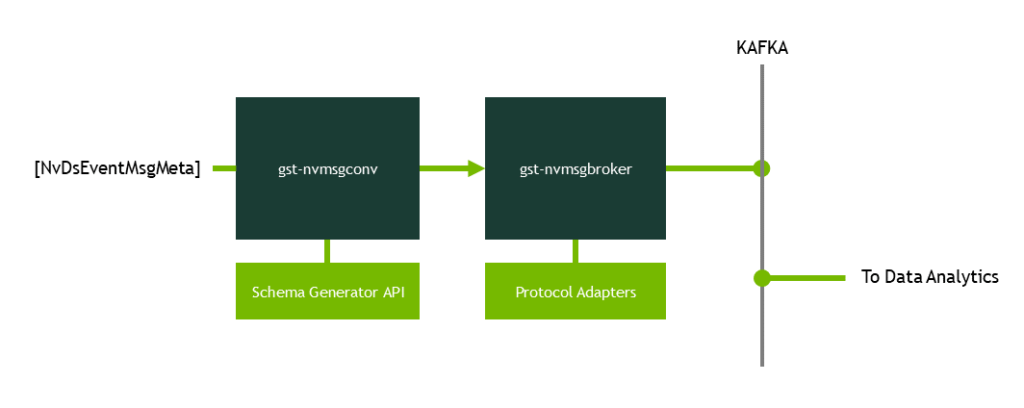

Metadata to Message Conversion

DeepStream 3.0 provides the ability to encapsulate generated metadata as messages and send them for further analysis. This analysis capability is useful for detecting anomalies, building long term trends on location and movement, information dashboards in cloud for remote viewing, and more.

Two new plugins are provided as part of the SDK — the gst-nvmsgconv plugin accepts a metadata structure of NvDsEventMsgMeta type and generates the corresponding message payload. A comprehensive JSON-based schema description has been defined that specifies events based on associated objects, location and time of occurrence, and underlying sensor information while specifying attributes for each of these event properties.

By default, the NvDsEventMsgMeta plugin generates messages based on the DeepStream schema description. However, it also allows the user to register their own metadata-to-payload converter functions for additional customizability. Using custom metadata descriptions in combination with user-defined conversion functions gives the user the ability to implement a fully custom event description and messaging capability that perfectly meets their needs.

Scalable Messaging with Backend Analysis Systems

Complementing message generation is the gst-nvmsgbroker plugin that provides out-of-the-box message delivery using the Apache Kafka protocol to Kafka message brokers. Kafka serves as a conduit into a backend event analysis systems, including those executing in the cloud. The high message throughput support, combined with reliability offered by the Kafka framework, enables the backend architecture to scale to support numerous DeepStream applications continuously sending messages.

The gst-nvmsgbroker plugin is implemented in a protocol agnostic manner and leverages protocol adapters in the form of shared libraries. These can be modified to support any user defined protocol. The SDK’s open interface makes it very flexible for scaling and adapting to specific deployment requirements.

Figure 5 illustrates the message transformation and broker plugins working in combination to deliver messages encapsulating detected events to backend analysis systems.

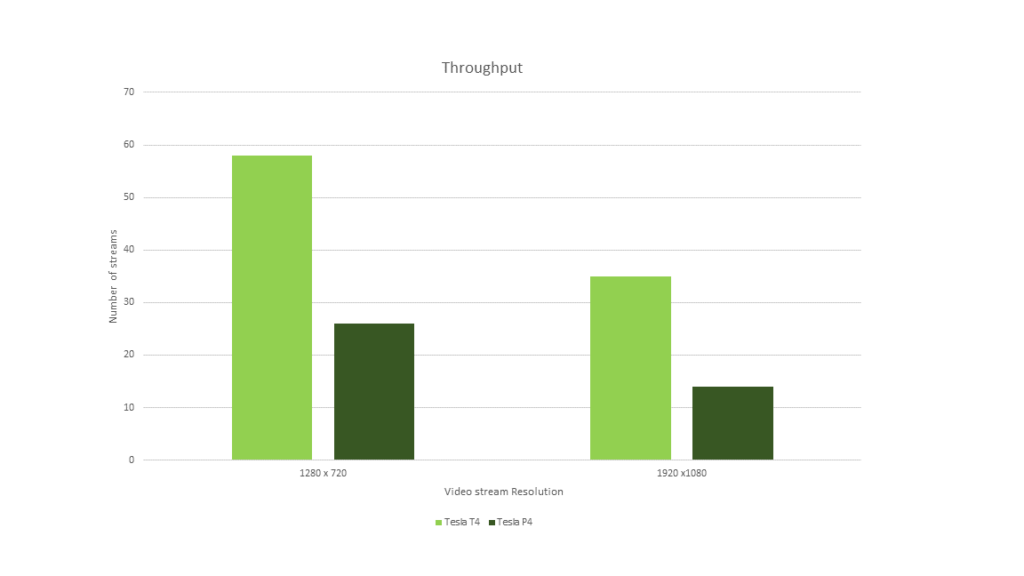

Performance

Performance results for the deepstream-app reference application included as part of the release package highlights performance improvements with Turing. Figure 6 shows that DeepStream 3.0-based applications on the Tesla T4 platform can deliver more than double the performance compared to the previous generation Tesla P4, while consuming the same amount of power. equal to number of streams.

The application includes a primary detector, three classifiers, and a tracker, shown in the flow diagram in figure 7. The batch size for primary detection is equal to the number of streams.

Scalable Video Analytics

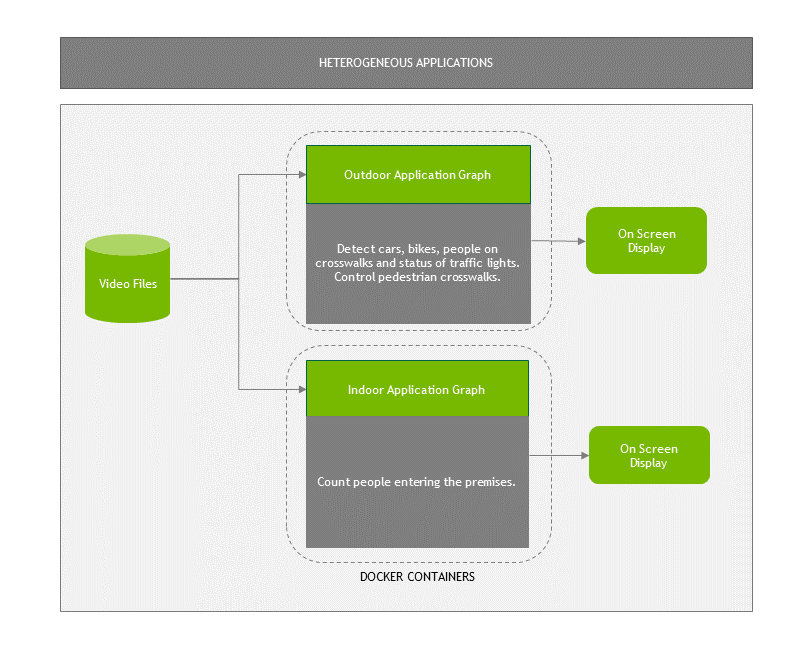

Deployment at scale is achieved at various levels of the system hierarchy with DeepStream- based applications. The latest SDK offers real-time multi-stream processing capability with the latest Tesla T4, further increasing the number of supported streams. Multi-GPU capability enables DeepStream to target all the available GPUs within a system, further scaling the overall number of streams and the complexity of use cases supported by a system. The superior decoding capabilities of Tesla GPUs, together with their low power consumption, enables high stream densities within a datacenter.

DeepStream in containers offers flexibility of deployment on the edge and in the datacenter, dynamically responding to demands when adding more video streams or changes in the analytics workload for those streams occur, as shown in Figure 8.

Deployment in Containers

Applications built with DeepStream can now be deployed via a Docker container, enabling incredibly flexible system architectures, straightforward upgrades, and improved system manageability. These containers are available on the NVIDIA GPU Cloud (NGC).

DeepStream 3.0 for Your IVA Applications

Leveraging a data analytics backbone to implement sophisticated analysis based on larger time windows and more complicated models opens up opportunities to build data services. You can have multiple instances of a DeepStream application streaming events to a central backend system. This enables correlating various streams from seamless deployment of large networks of cameras for better situational awareness.

Download DeepStream SDK 3.0 today to start building the next-generation of powerful intelligent video applications.