NVIDIA VRWorks 360 is no longer available or supported. For more information about other VRWorks products, see NVIDIA VRWorks Graphics.

An ecosystem of camera systems and video processing applications surround us today for professional and consumer use, be it, for film or home video. The ability to enhance and optimize this omnipresent stream of videos and photos has become an important focus in consumer and prosumer circles. The real-world use cases associated with 3DoF 360 video have grown in the past few years, requiring more robust platform support. Live streaming to YouTube, uploading and share on Facebook, and Vimeo all support 360 surround-video.

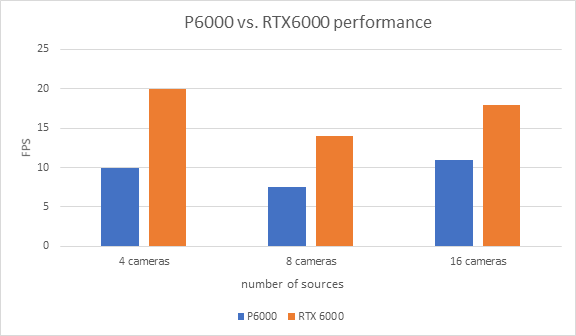

The VRWorks 360 Video SDK 2.0 addresses the need for acceleration in these use cases while allowing specific enhancements for use cases most requested by the developer community. The updated SDK offers up to 90% faster performance for stereo and 2x performance on real-time mono when using the new NVIDIA RTX GPUs (see figure 1), breathing new life into immersive video applications that demand high performance.

Let’s take a closer look at some of the newer features.

Depth-Aligned Mode for Monoscopic Stitch

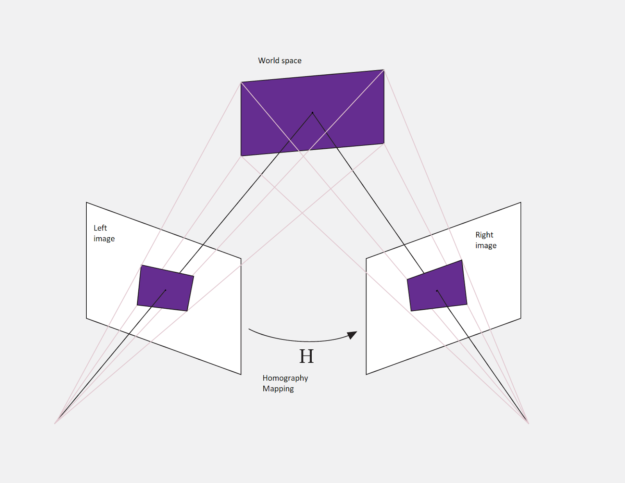

The biggest challenge with stitching monoscopic images is perfecting the alignment between camera image pairs. This alignment can be achieved by accurately estimating the 2D transformation between images, typically represented by a matrix called the homography, shown in figure 2.

Methods used to estimate the homography rely on the accurate identification and mapping of distinct correspondences/key points/features between images. This accuracy requirement often conflicts with practical challenges since the physical properties of the cameras used and environmental factors cannot always be controlled. For instance, exposure differences, occlusions, and other factors could result in matching up features incorrectly. These factors contribute to inaccuracies in the homography estimated and result in misaligned images.

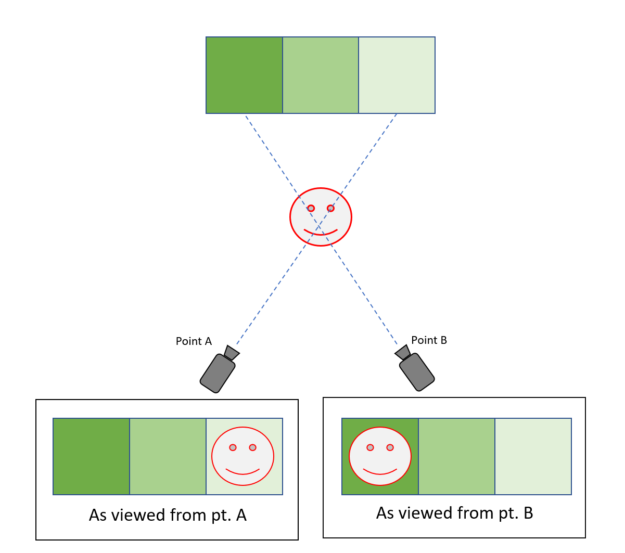

In addition to this, homography by itself does not account for the fact that the images may not have been captured from the same source viewpoint. The shift in the source viewpoint introduces parallax as demonstrated in figure 3. Blending these images without accounting for parallax results in ghosting and other errors. The greater the parallax, the more severe the errors.

|  |

Methods such as seam cutting, which rely on energy minimization, are often used to avoid these artifacts. However, they can be computationally expensive. Methods that work best address the problem by using a multi-tiered approach. Global alignment in the scene ensures distant objects with minimal parallax are aligned while local alignment selectively aligns nearby objects, which suffer more noticeable parallax.

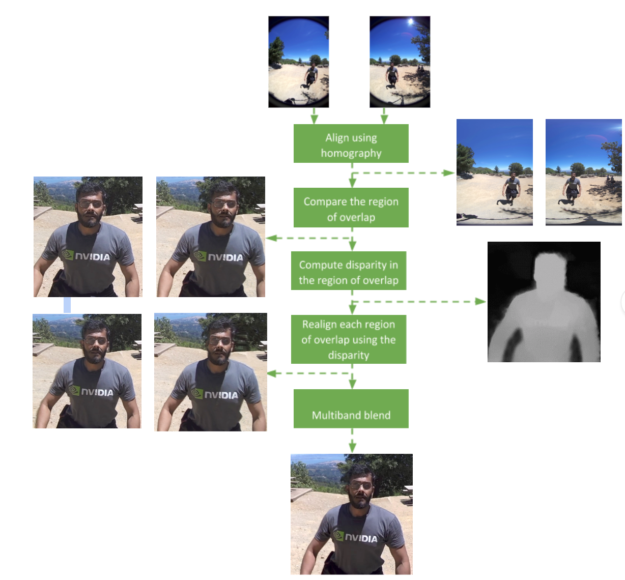

VRWorks 360 Video SDK 2.0 introduces depth-based alignment stitch mode. Scene depth used in combination with homography accounts for parallax while aligning images before stitching, as shown in figure 4.

Figure 5 highlights the logical structure behind depth-based alignment. The estimation of depth in this case leverages our proprietary search algorithm also used in stereoscopic stitching to estimate stereo disparity. The disparity computed applies a spatially varying warp in the region of overlap to better align the images. This improved alignment results in a better quality stitch when objects are close to the camera or when parallax resulting from non-colocated cameras is a concern.

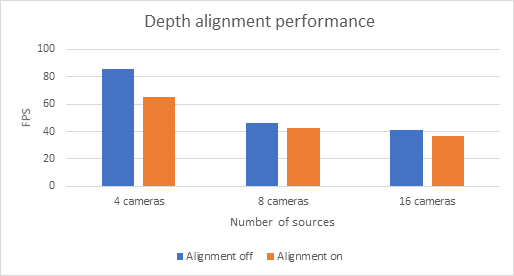

While the depth estimation and alignment step does increase the per frame set stitch execution time relative to multiband blending, optimizations in CUDA 10 and the Turing architecture mitigate the negative impact on the performance of real-time stitching. Depth alignment has a minimal impact on performance, with performance disparities diminishing as the number of cameras increases, shown in figure 6.

To enable this mode, you must use the mono_eq pipeline and set the depth-based alignment flag as shown below:

nvssVideoStitcherProperties_t stitcher_props{};

stitcher_props.version = NVSTITCH_VERSION;

...

stitcher_props.pipeline = NVSTITCH_STITCHER_PIPELINE_MONO_EQ;

stitcher_props.mono_flags |= NVSTITCH_MONO_FLAGS_ENABLE_DEPTH_ALIGNMENT;

Moveable Seams

Figure 7 shows that parallax increases as the distance from the source viewpoint decreases, hence the misalignment resulting from parallax is most apparent on nearby objects. Judicious placement of the seam line in the region of overlap can avoid nearby objects and hence reduce or eliminate such artifact in the stitched output.

The VRWorks 360 Video SDK 2.0 facilitates dynamic seam placement with an API that allows developers to:

- Query the current placement of the seam lines

- Query the size and location of the overlaps in the equirectangular output panorama

- Specify the seam placement in the region of overlap for each camera pair

The current implementation supports straight seam lines, which are specified by a horizontal and vertical offset. The code snippet below demonstrates the ability to move all seams by the amount specified in seam_offset (in pixels).

// Get the number of the overlaps in the current configuration uint32_t num_overlaps{}; nvstitchGetOverlapCount(stitcher, &num_overlaps); for (auto overlap_idx = 0u; overlap_idx < num_overlaps; ++overlap_idx) { // Get the current seam and overlap info nvstitchSeam_t seam{}; nvstitchOverlap_t overlap{}; nvstitchGetOverlapInfo(stitcher, overlap_idx, &overlap, &seam); // Shift seam by seam_offset and clamp to [0, width] const auto width = int(overlap.overlap_rect.width); const auto offset = int(seam.properties.vertical.x_offset) + seam_offset; seam.properties.vertical.x_offset = uint32_t(std::clamp(offset, 0, width)); nvstitchSetSeam(stitcher, overlap_idx, &seam); }

Seams can be moved any number of times during stitching when using the low level API. Stitching applications leveraging the VRWorks 360 Video SDK 2.0 can use this feature to define the optimal seam location for each pair of frame or to allow users to interactively adjust the seams and adapt to changes in the captured scene.

Improved Mono stitch pipeline with Multi-GPU scaling

Most camera systems compress captured footage with H.264 or other algorithms to optimize ingest or streaming bandwidth. This means decode is often the first stage in a video stitching pipeline. Decode execution time per stream is directly proportional to the resolution and refresh rate of the encoded source video. Decode time also depends on the number of source streams and the compression format.

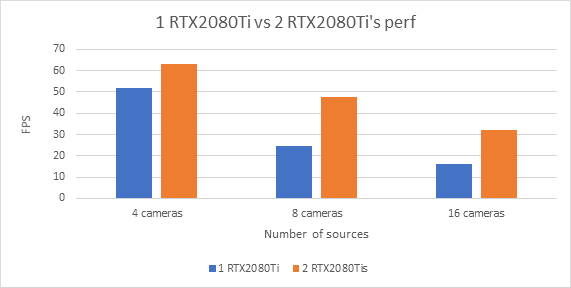

With the number of cameras increasing in 360-degree video capture systems, the decoding stage can become a bottleneck. VRWorks SDK’s leverages NVIDIA’s decode engine to optimize and parallelize decode workloads. VRWorks 360 Video SDK 2.0 introduces a new monoscopic stitching pipeline for rigs with cameras in an equatorial configuration. The pipeline also supports multi-GPU scaling for the first time. This feature allows for scaling decode performance as well as compute workloads when using multiple GPUs. As figure 8 shows, situations which process more than 4 video streams simultaneously yields up to 2x performance scaling when adding a second Geforce RTX 2080 Ti.

mono_eq stitching pipeline when using of single- and dual-RTX 2080 Tis.Custom Region of Interest Stitch

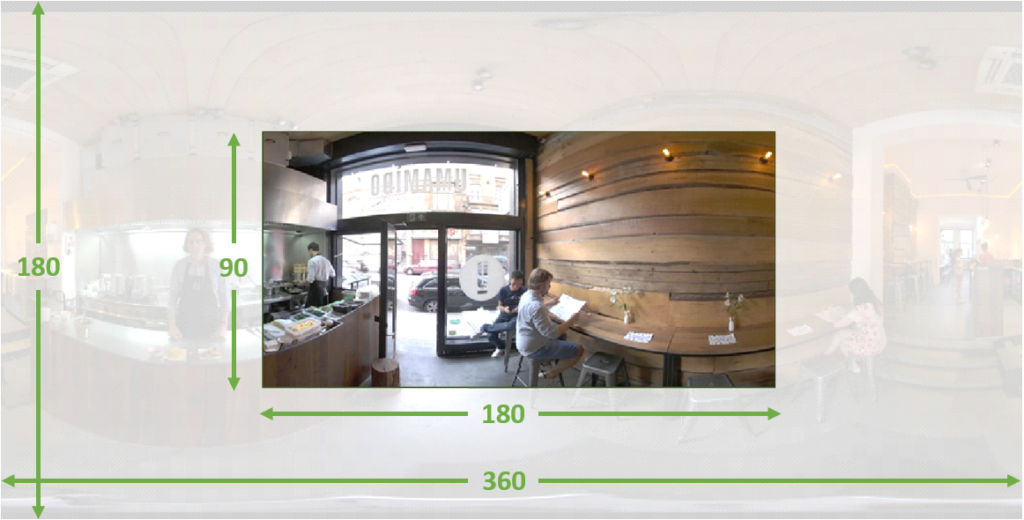

The average human eye has a monocular field-of-view (FOV) of about 200-220 degrees and a binocular FOV of about 114 degrees. Such human vision considerations have guided research into how to maintain visual fidelity within practical rendering limits given the FOV in head mounted displays (HMD). VR videos with < 360 FOV also capitalize on the same principles to save processing time. Depending on the use cases, streaming bandwidth efficiency improves. VRWorks 360 Video SDK 2.0 allows you to explicitly specify the region of interest and stitch only the relevant part of the source input, as shown in figure 9. This avoids redundant computation, minimizes the memory footprint, and improves end-to-end execution time.

The custom region of interest can also be used to improve the quality of 180-degree video by stitching the cropped region at a higher resolution (increased angular pixel density) without the overhead of keeping the full 360 panorama in memory. This also benefits cases in which the output resolution is limited.

The nvss_video API has been expanded to allow users to specify the output viewport of the panorama. The nvssVideoStitcherProperties_t structure now includes an output_roi data member, which specifies the viewport coordinates. This must be specified at initialization, when the stitcher instance is created. The region cannot be changed during a stitch session with the current implementation.

The code below demonstrates how this property can be used to create a stitcher instance which would output a 180 x 90 degree viewport at the center of the full 360 panorama as depicted in figure 9 above.

nvssVideoStitcherProperties_t stitcher_props{ NVSTITCH_VERSION };

stitcher_props.pano_width = 3840;

stitcher_props.pano_height = 1920;

...

stitcher_props.output_roi = nvstitchRect_t{960, 480, 1920, 960}; // left, top, width, height

nvssVideoHandle stitcher;

nvssVideoCreateInstance(&stitcher_props, ¶ms->rig_properties, &stitcher);

Ambisonic Audio

Along with stereoscopic video, when users perceive audio in an HMD that imitates real-world sounds, immersion increases. The human brain relies on the change in audio intensity and timing to localize sound. This localization of sound yields a relative sense of direction in the real world. For instance, you turn around if someone calls your name from behind you even before you see them. Ambisonic audio is a format that helps retain this directionality of sounds in the environment after they have been captured.

VRWorks 360 Video SDK 2.0 takes immersion to a new level with the introduction of first order B format ambisonic audio support, in which the directionality of the sound source relative to the user is preserved in the output stitched video (see figure 10). This paves the way for better sound perception and allows for audio cues that can lead and guide the users experience in VR. This could not be done with head-locked stereo. Users perceive head-locked stereo sounds emanating from within the user’s head. Support for spatial audio allows the user to experience the sound sources as being part of the environment, similar to sounds in the real world.

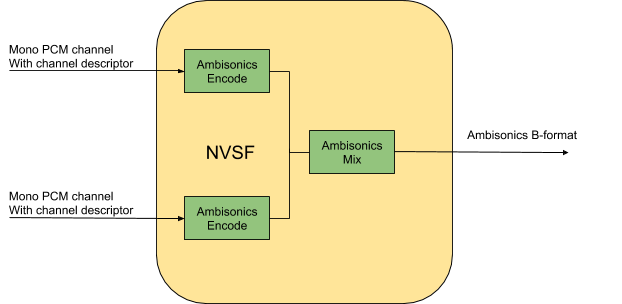

The SDK supports first order B format ambisonic in the NVSF library with this release. The microphone array used to capture the input may be part of the camera rig or it may be a separate apparatus such as a tetramic or an eigenmic. Ambisonic encode in NVSF converts each input into its ambisonic representation. The Ambisonic mix then combines two or more of these signals in their ambisonic representation. The video clip highlights the effect of 360- degree video with first order B format ambisonic audio (ACN ordering) .

Warp 360

In prior releases, VRWorks 360 Video SDK supported the equirectangular format for 360-degree panoramas. While this format works well for 360-degree video, other use cases such as streaming, VR 180, and so on, could benefit from different formats that better cater to the performance and quality needs of these use cases. Warp 360 is a new capability introduced in the VRWorks 360 Video SDK 2.0 with tremendous potential to address a variety of use-case specific customizations. It provides CUDA-accelerated conversion from Equirectangular, Fisheye, and Perspective input formats to output formats such as Equirectangular, Fisheye, Perspective, Cylindrical, Rotated Cylindrical, Panini, Stereographic, and Pushbroom. The projection format, the camera parameters, and the output viewport can all be updated to generate interesting views. Figure 11 shows two examples of what you can do with Warp 360 and an equirectangular panorama..

The videos below showcase Warp360 in action. The first video shows a stereo 360-degree stitch with a wide field-of-view (click and drag to look around).

This next video now shows a guided tour with a fixed viewport and is no longer 360-degrees for different delivery targets and use cases.

Warp 360 includes a library, header, source, and binary for a sample app, and script files that illustrate the usage of the sample app.

Try Out VRWorks 360 Video

VRWorks 360 Video SDK 2.0 is an exciting update which significantly benefits immersive video applications. Learn more about this new technology on the VRWorks 360 Video SDK developer page.

The VRWorks 360 Video SDK 2.0 requires NVIDIA graphics driver version 411.63 or higher. The VRWorks 360 Video SDK 2.0 release includes the API and sample applications along with programming guides for NVIDIA developers.

If you’re not currently an NVIDIA developer and want to check out VRWorks, signing up is easy — just click on the “join” button at the top of the main NVIDIA Developer Page.