NVIDIA VRWorks 360 is no longer available or supported. For more information about other VRWorks products, see NVIDIA VRWorks Graphics.

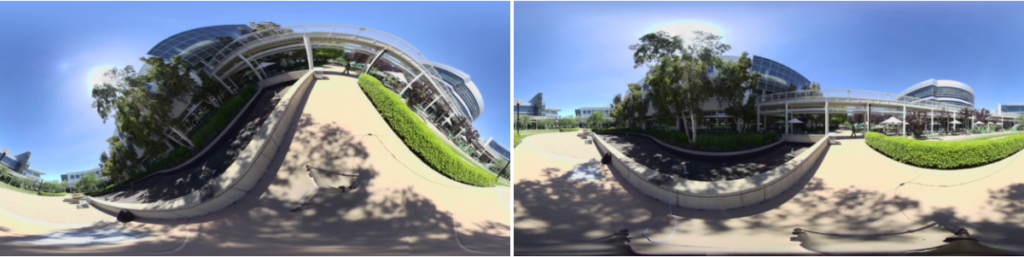

There are over one million VR headsets in use this year, and the popularity of 360 video is growing fast. From YouTube to Facebook, most social media platforms support 360 video and there are many cameras on the market that simplify capturing these videos. You can see an example still image in Figure 1 and there is an interactive 360 video result at the end of this post.

The quality of 360 videos varies widely depending on the resolution of the cameras used to capture them. The need for higher quality captures has given rise to many solutions, ranging from 3D printed rigs for GoPro cameras all the way up to professional custom-built 360 camera systems. One particularly difficult task is to align multiple videos from an array of cameras and stitch them together into a perfectly uniform sphere, removing lens distortion and matching color and tone. In an effort to simplify many of the intricacies of generating video with 360 camera systems, NVIDIA provides the free NVIDIA VRWorks 360 Video SDK, which is being adopted into many production applications supplied by 360 camera makers. This article introduces 360 video camera calibration and the new features available in the latest version of the SDK.

The NVIDIA VRWorks 360 Video SDK version 1.1 offers a calibration module that determines the configuration parameters of a multi-camera rig. Calibration is designed to work in real-world settings, not just in the lab. It uses the correlation between scenes observed by several cameras to produce an accurate mapping between the camera coordinate system and the world coordinate system. The SDK supports a wide range of cameras and rig configurations, as long as the rig is reasonably compact (smaller than 0.5 meter diameter).

In addition to multi-camera video calibration, version 1.1 of the VRWorks 360 Video SDK includes a number of other improvements.

- The SDK can accept estimates of camera parameters and rig properties from the user to improve the rate of calibration convergence.

- Automatic calibration mode: calibration with few or no estimates of rig parameters including camera orientations and lens properties.

- Nonhomogeneous rig support: different camera resolutions or lenses in a single rig.

- Support for normal perspective cameras and wide-angle fisheye camera lenses.

- Built-in rig balancing along the horizon for equatorial ring camera rigs.

- Built-in cross-correlation-based quality measurement of calibration accuracy.

What is Camera Calibration?

Camera rig calibration is the process of deriving the mapping between points in the camera coordinate system and corresponding points in the world coordinate system. This mapping is represented by two types of parameters: intrinsics are parameters of the lens of a single camera, while extrinsics pertain to the orientation of the cameras in the camera system or rig. The mapping is derived iteratively using an error metric to measure convergence.

Camera Intrinsics

The camera intrinsics include:

- Focal length (angular pixel resolution)

- Principal point (center of projection)

- Lens distortion (deviation from ideal perspective or fisheye)

VRWorks intrinsics calibration optimizes for the unique focal length and principal point of each camera. It optimizes camera distortion for fisheye cameras with two radial coefficients and for perspective cameras with three radial and two tangential coefficients using the Brown model. The distortion characteristics in cameras of the same model are essentially identical. VRWorks assumes this is true to make the optimization more robust.

Camera Extrinsics

The extrinsic parameters are the orientation and position of the camera with respect to the rig. The orientation is represented by Euler angles (Yaw, Pitch, Roll) or by using a rotation matrix. The relative position is represented by X,Y,Z translation from the rig center.

VRWorks extrinsics calibration optimizes the camera orientation, yaw, pitch, and roll of each camera. It allows specifying translation but does not currently support optimization for translation.

How Calibration Works

Theoretical estimates are available for some or all of the camera parameters for known configurations. The VRWorks SDK can consume these estimates for faster and more accurate convergence. The SDK contains documentation, sample code and helper functions which demonstrate how these estimates can be fed into the pipeline.

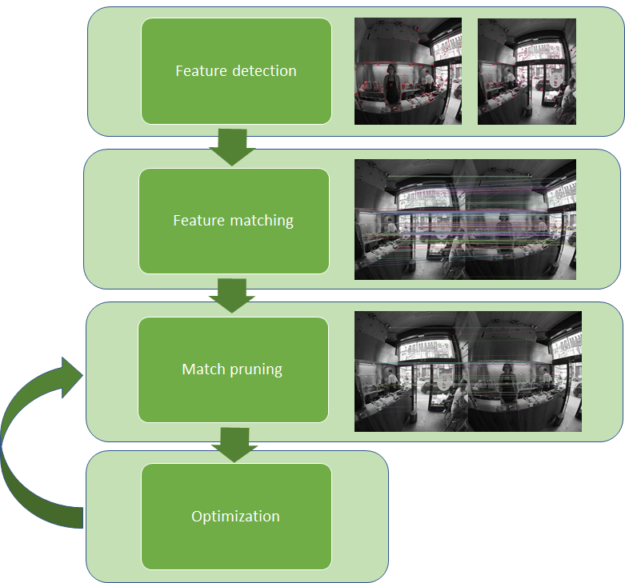

The pipeline implements classic feature-based calibration as Figure 2 shows.

Step 1: Feature Detection

This stage finds features that are strong patterns or corners that can be easily identified and tracked across multiple camera images.

Images from cameras often have exposure differences as well as radial and perspective distortion that make the same scene appear different across cameras in the rig. VRWorks uses robust feature detection algorithms (in particular, ORB ) as they have a good tolerance to brightness, contrast, rotation, scaling, and to some extent, distortion. VRWorks 360 calibration uses a GPU-accelerated feature detector that gives speed as well as good accuracy.

Detected features are characterized by the key point location, radius, orientation and descriptor (explained below) that are used for matching and pruning the features.

Step 2: Feature Matching

Each detected feature has a feature descriptor that stores the information relevant to the feature point. The descriptor is a vector of binary or floating point numbers and can range in size from 32 to 256 bytes. Features descriptors are compared using hamming distance for binary descriptors and L2 normalized distance for floating point descriptors. The best and second-best matches (or correspondences) are extracted for each feature. Matches with a large distance between their descriptors are discarded. As Figure 4 shows, there may be incorrect matches or outliers that may need further pruning.

Step 3: Match Pruning

Matches selected purely based on descriptor distances are prone to false matching as the scene may have patterns or regions that appear similar. The VRWorks SDK prunes these outliers based on homography constraints and heuristics. For instance, if an estimate of camera parameters is available and relative spatial positions of the cameras are known, then spurious matches between cameras with no overlap can be pruned out. Similarly, with reliable known estimates, the epipolar constraint can be used to efficiently weed out gross outliers.

The outcome of the pipeline at this stage is a pruned set of reliable correspondences and their associated descriptors, as Figure 5 shows. The next stage is iterative optimization. The initially selected parameters may not be optimal, but the match pruning step may be repeated as more accurate camera parameters become available during optimization.

Step 4: Parameter Optimization

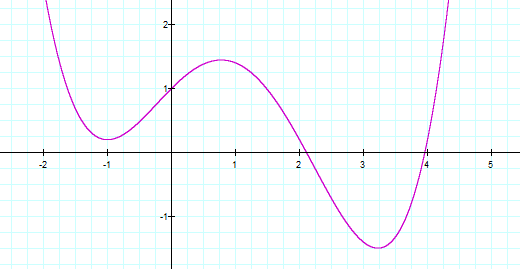

Optimization is part art and part science. The science part deals with the mathematics of following derivatives (gradients) in a direction to reduce error. The error is a function of several parameters (orientation, focal length, etc.), and together the error and its parameters make up an error landscape. The optimization is done in multiple steps, changing directions in parameter space at every step in order to descend downhill in the error landscape.

A common problem is that of local minima, as Figure 6 shows. If we start going downhill from the left, we will get stuck in the local minimum at -1, instead of proceeding to the global minimum around +3.

This is where the “art” comes into play. There are various heuristics that can avoid these problems. One method is to start by optimizing over a small number of parameters in one phase, then increase the number or flexibility of parameters in the subsequent phases. This is called relaxation.

Another heuristic is to use alternation, where we alternate between optimization of one set of parameters and another. This avoids the interference that can sometimes occur between the two sets when they are far from the optimum.

VRWorks calibration tries to optimize the rig parameters so that the feature correspondences coincide when individual camera images are stitched together into a spherical image. The error for each correspondence is the angular difference between rays. The error function (or objective function in optimization parlance) is the sum of all of the angular differences for each correspondence.

Step 5: Iterate Over Pruning and Optimization

To ensure the most accurate set of matches are selected for the optimization, VRWorks calibration performs multiple iterations of match pruning followed by parameter optimization.

VRWorks 360 Video SDK 1.1: New and Improved Features

Version 1.1 of the VRWorks 360 SDK brings many new features that will simplify the 360 stitching process by introducing more robust automated calibration as well as significant performance improvements with support for Volta GPUs.

Automatic Calibration

Camera calibration with few estimates or without estimates is a challenging problem, since optimization software relies on initial guesses being reasonably close to the actual parameters; otherwise it will converge to a local minimum with different parameters. The VRWorks 360 Calibration SDK tackles this problem by automatically applying a series of heuristics, and follows up on the parameters that seem promising. This involves automatic fisheye radius detection, focal length probing, and assumptions about common configurations of cameras in a rig. Various initial parameters are supplied to the standard calibration pipeline using feature detection and matching, iterative pruning, and optimization, choosing the parameters that yield the best result. If the user supplies some initial parameters, the calibration API takes advantage of these to converge more quickly.

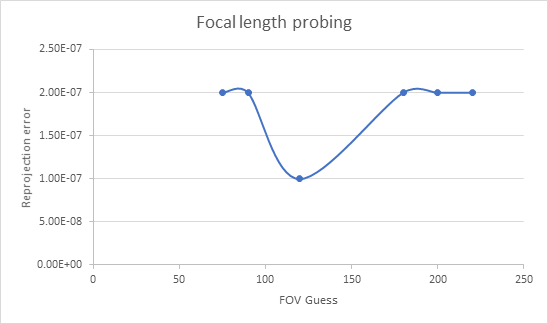

Figure 7 shows a technique called Focal Length Probing used for automatic focal length computation. Several guesses of the focal length are taken (covering various fields of view in the range of 75 to 220 degrees) while optimizing for the other camera parameters such as rotation. The focal length with the minimum residual error is then selected as the initial estimate.

Rig Balancing

This feature automatically detects a dominant axis and orients the up vector of the calibrated rig to that axis; if no axis is sufficiently dominant, the first camera is used as the up axis. The assumption is that many of the cameras are more heavily distributed around the equator, but have some tilt. Averaging (inertial balancing) can eliminate wobble. Note that rig balancing does not perform horizon detection: the balancing is purely a function of the distribution of the cameras in the rig, not the content captured by those cameras.

The default auto-balancing can be overridden in the calibration API, by choosing the freeze first camera value for orientation rather than auto balance:

//uint32_t orientationType = NVCALIB_ORIENT_PANO_AUTO_BALANCE; // default uint32_t orientationType = NVCALIB_ORIENT_PANO_FREEZE_FIRST_CAMERA; nvcalibResult result = nvcalibSetOption(hInstance, NVCALIB_OPTION_PANORAMA_ORIENTATION, NVCALIB_DATATYPE_UINT32, 1, &orientationType);

Automatic Fisheye Radius Detection

If the fisheye radius has not been provided, the SDK aims to derive it from the source images. This works reliably for full-frame fisheye and cropped fisheye sources. We have observed a high degree of accuracy in our experiments, although lens flares, vignetting and other lighting characteristics of the source images can affect the accuracy of detection.

Results and Conclusions

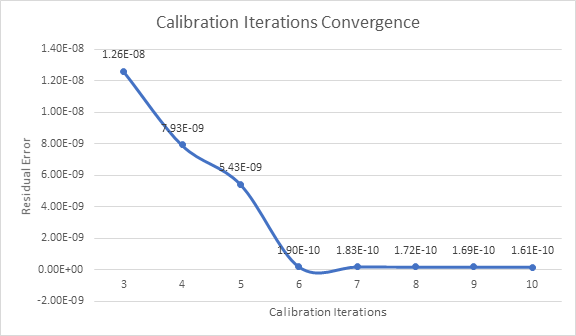

The table below illustrates how calibration in the VRWorks 360 SDK V1.1 converges given no initial estimates for the ramen shop footage in Figure 10.

As seen from the above results in Figure 9, the residual error decreases to less than 2e-10 after six iterations.

For example, the residual error after iteration 3 is 1.26e-08 indicating the camera parameters are not well calibrated yet. This is apparent from the misaligned overlap in the left image as Figure 10 shows.

After ten iterations, the residual error is reduced to 1.6087e-10 and the calibration parameters are now accurate, resulting in a much more accurate overlap. The following video shows the final 360 video result (note you can pan the view around with the mouse).

Try NVIDIA VRWorks 360 Video SDK 1.1 Today!

Are you building your own camera rig or creating editing software? Would you like to add 360 stitching to your toolset? NVIDIA VRWorks 360 video SDK 1.1 is available now free for registered developers. Look forward to future updates, and feel free to send us feedback at nvstitch-support@nvidia.com or reach out using the comments below!