This post was originally published on the Mellanox blog.

At Red Hat Summit 2018, NVIDIA Mellanox announced an open network functions virtualization infrastructure (NFVI) and cloud data center solution. The solution combined Red Hat Enterprise Linux cloud software with in-box support of NVIDIA Mellanox NIC hardware. Our close collaboration and joint validation with Red Hat yielded a fully integrated solution that delivers high performance and efficiency, and which is easy to deploy. The solution includes open source datapath acceleration technologies, including Data Plane Development Kit (DPDK) and Open vSwitch (OvS) acceleration.

Private cloud and communication service providers are transforming their infrastructure to achieve the agility and efficiency of hyperscale public cloud providers. This transformation is based on two fundamental tenets: disaggregation and virtualization.

Disaggregation decouples the network software from the underlying hardware. Server and network virtualization drive higher efficiencies through the sharing of industry-standard servers and networking gears using a hypervisor and overlay networks. These disruptive capabilities offer benefits such as flexibility, agility, and software programmability. However, they also impose significant network performance penalties due to kernel-based hypervisor and virtual switching that inefficiently consumes host CPU cycles for network packet processing. Over-provisioning of CPU cores to solve degraded network performance leads to high CapEx, defeating the goal to gain hardware efficiency through server virtualization.

To address these challenges, Red Hat and NVIDIA Mellanox brought to market a highly efficient, hardware-accelerated, and tightly integrated NFVI and cloud data center solution combining Red Hat Enterprise Linux OS with NVIDIA Mellanox ConnectX-5 network adapters running DPDK and the Accelerated Switching and Packet Processing (ASAP2) OvS offload technologies.

ASAP2 OvS offload acceleration

An OvS hardware offload solution accelerates the slow software-based virtual switch packet performance by an order of magnitude. Essentially, OvS hardware offloads offer the best of both worlds: hardware acceleration of the data path along with an unmodified OVS control path for flexibility and programming of match-action rules. NVIDIA Mellanox is a pioneer of this groundbreaking technology and has led the open architecture needed to support this innovation within the OvS, Linux kernel, DPDK, and OpenStack open-source communities.

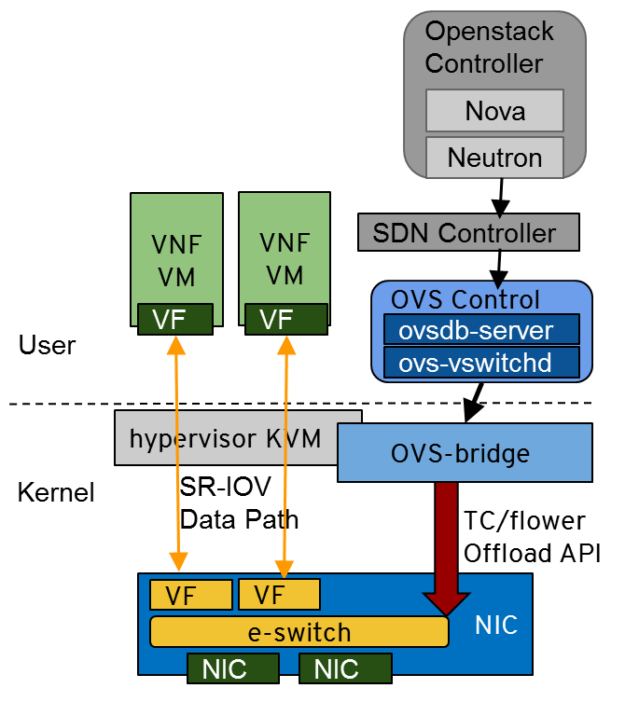

Figure 1 shows the NVIDIA Mellanox open ASAP2 OvS offload technology. It fully and transparently offloads virtual switch and router datapath processing to the NIC embedded switch (e-switch). NVIDIA Mellanox contributed to the upstream development of the core framework and APIs such as TC Flower, making them available in the Linux kernel and OvS versions. These APIs dramatically accelerate networking functions such as overlays, switching, routing, security, and load balancing.

As verified during the performance tests conducted in Red Hat labs, NVIDIA Mellanox ASAP2 technology delivered near 100 G line rate throughput for large virtual extensible LAN (VXLAN) packets without consuming any CPU cycles. For small packets, ASAP2 boosted the OvS VXLAN packet rate by 10X, from 5 million packets per second using 12 CPU cores to 55 million packets per second consuming zero CPU cores.

Cloud communications service providers, and enterprises can achieve total infrastructure efficiency from an ASAP2-based, high-performance solution while freeing up CPU cores for packing more virtual network functions (VNFs) and cloud-native applications on the same server. This benefits you by reducing server footprint and achieving substantial CapEx savings. ASAP2 has been available as a tech preview from OSP 13 and RHEL 7.5 and in general availability starting with OSP 16.1 and RHEL 8.2.

OVS-DPDK acceleration

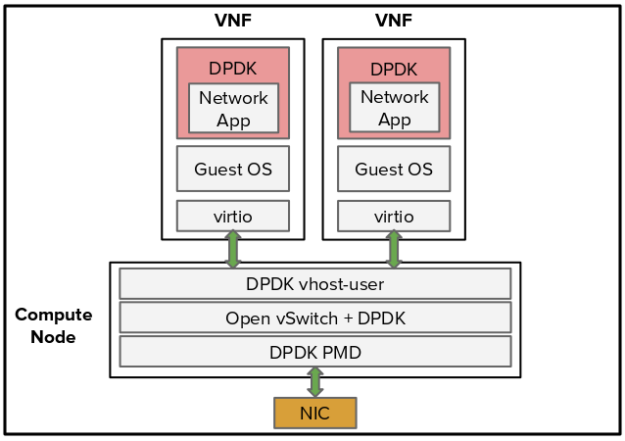

If you want to maintain the existing slower OvS virtio data path but still need some acceleration, you can use the NVIDIA Mellanox DPDK solution to boost OvS performance. Figure 2 shows that the OvS over DPDK solution uses DPDK software libraries and the poll mode driver (PMD) to substantially improve the packet rate at the expense of consuming CPU cores.

Using open source DPDK technology, NVIDIA Mellanox ConnectX-5 NICs deliver the industry’s best bare-metal packet rate of 139 million packets per second for running OvS, VNF, or cloud applications over DPDK. They are fully Red Hat–supported for RHEL 7.5

Network architects are often faced with many options when choosing the best technology that fits their IT infrastructure needs. When it comes to deciding between ASAP2 and DPDK, the decision making is thankfully much easier due to substantial benefits of ASAP2 technology over DPDK.

Due to the SR-IOV data path, ASAP2 OvS offloads achieve dramatically higher performance than OvS over DPDK, which uses the traditional, slower virtio data path. Further, ASAP2 saves CPU cores by offloading flows to the NIC where as DPDK consumes CPU cores to sub-optimally process the packets. Like DPDK, ASAP2 OvS offload is an open-source technology that’s fully supported in the open source communities and is gaining wider adoption in the industry.

Summary

NVIDIA Mellanox is an open networking company and is among the top 10 contributors in the Linux kernel community. Through our cutting-edge NIC technologies, and joint innovation with open software leader such as Red Hat, we have eliminated the performance barriers associated with deploying modern cloud DC and NFV solutions. These groundbreaking performance numbers are achieved without sacrificing valuable server resources or ease of deployment. The intelligence and parallel flow processing capabilities of NVIDIA Mellanox ConnectX family of Ethernet Adapters imposes minimal burden on precious CPU and memory resources, empowering NFV platforms to do what they are supposed to do: network services and application processing, rather than handling packet I/O.

For more information, see the following resources: