NVIDIA Aerial CUDA-Accelerated RAN

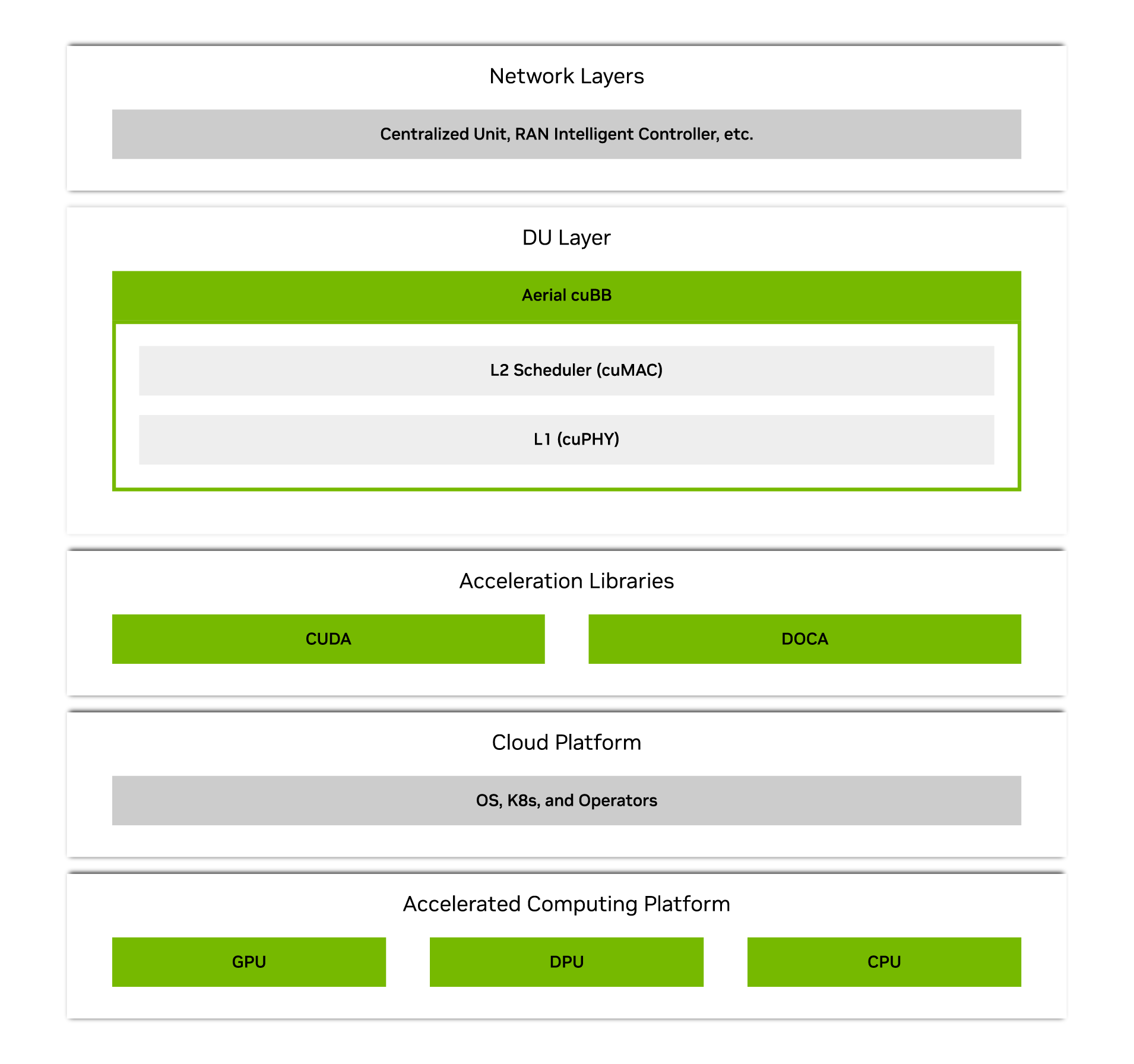

NVIDIA Aerial™ CUDA®-Accelerated RAN is an application framework for building commercial-grade, software-defined, GPU-accelerated, cloud-native 5G and 6G networks. The platform supports full-inline GPU acceleration of layers 1 (L1) and 2 (L2) of the 5G stack. The Aerial CUDA-Accelerated RAN platform is the key building block for the accelerated 5G virtualized distributed unit (vDU) and has been deployed in commercial and research networks.

Join the NVIDIA 6G Developer Program to access Aerial CUDA-Accelerated RAN software code and documentation.

Key Features

100% Software Defined

The NVIDIA Aerial CUDA-Accelerated RAN platform is a fully software-defined, scalable, and highly programmable 5G RAN acceleration platform for L1 and L2+ layers.

High Performance and AI Ready

With GPU-accelerated processing, complex computations run faster than on non-GPU solutions. Performance is improved for L1 and L2 functions, and future AI RAN techniques will run more efficiently on the same GPU platform.

Scalable and Multi-Tenancy

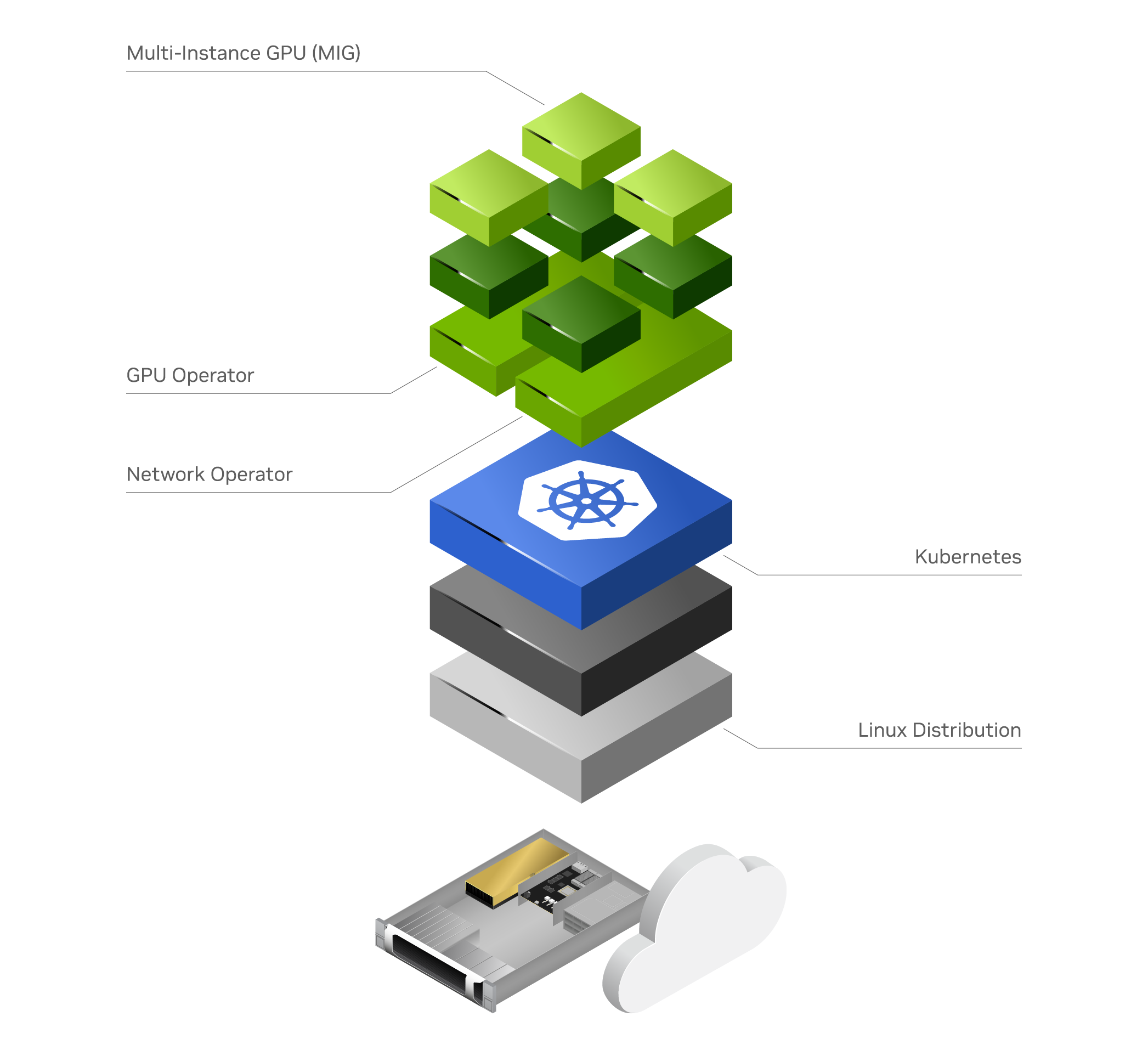

The platform is scalable, based on Kubernetes, and deployable in the same shared infrastructure as generative AI applications. This optimizes resource utilization and enables cost-effective, energy-efficient deployment.

Key Features

100% Software Defined

High Performance and AI Ready

Scalable and Multi-Tenancy

Platform Components

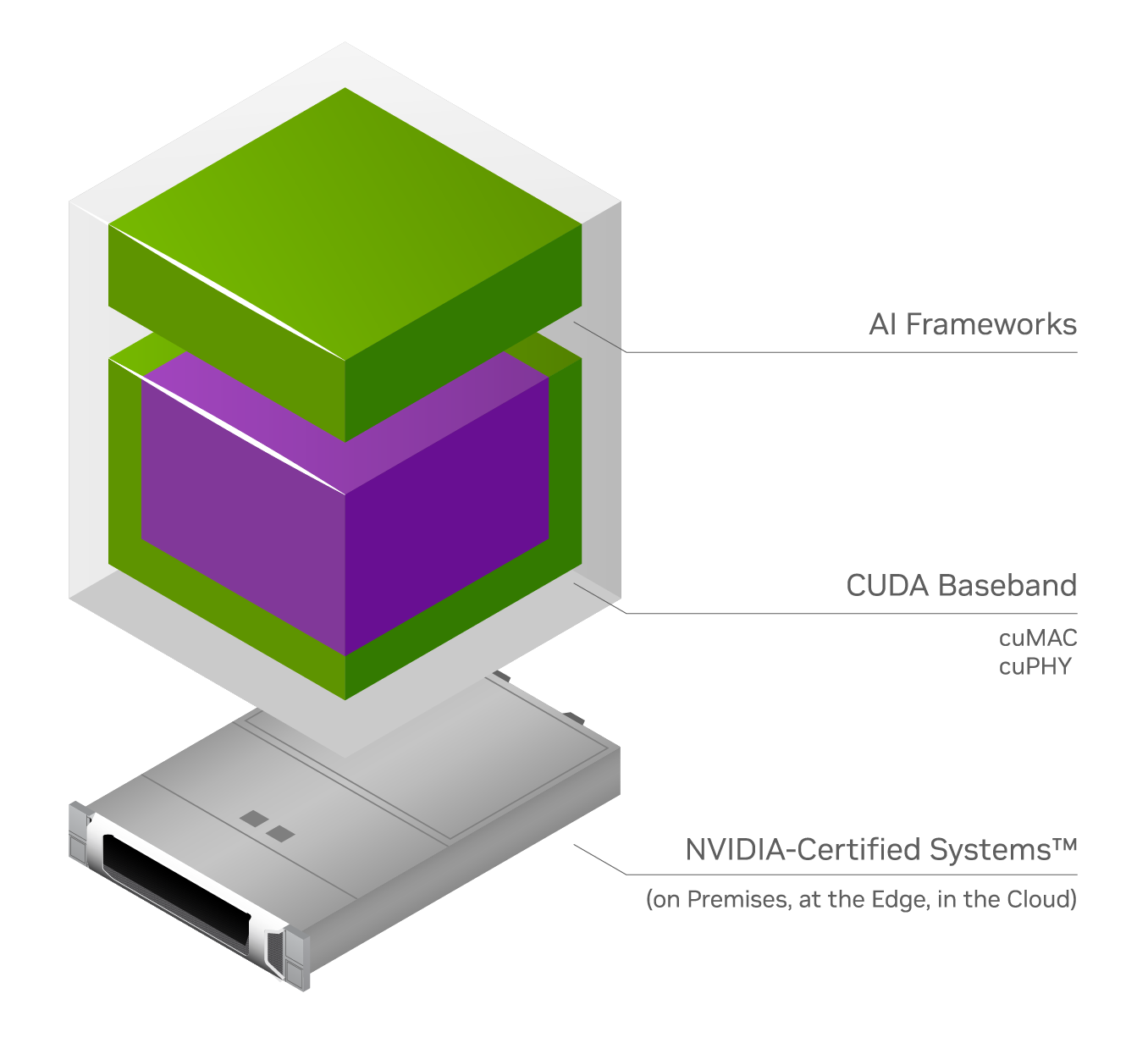

CUDA Baseband (cuBB)

cuBB provides a GPU-accelerated baseband L1 and L2. It delivers unprecedented throughput and efficiency by keeping all physical (PHY) layer processing within the high-performance GPU memory. cuBB supports 5G today and will evolve to 6G in the future. cuBB includes two GPU-accelerated libraries:

cuPHY is a 3GPP-compliant, real-time, GPU-accelerated, full-inline implementation of the data and control channels for the RAN PHY L1. It provides an L1 high-PHY library, which offers unparalleled scalability by using the GPU’s massive computing power and high degree of parallelism to process the compute-intensive parts of the L1. It also supports fronthaul extension and multi-user massive input massive output (MU-MIMO) technology.

cuMAC is a CUDA-based platform for offloading scheduler functions from the media access control (MAC) L2 stack in centralized and distributed units (CU/DUs) and accelerating the functions with GPUs. cuMAC provides a C/C++ API to enable implementations of advanced scheduler algorithms that improve overall spectral efficiency for both standard MIMO and massive MIMO RAN deployments. The cuMAC extends GPU-accelerated processing beyond L1 to enable coordinated multicell scheduling (CoMS) in the 5G MAC scheduler.

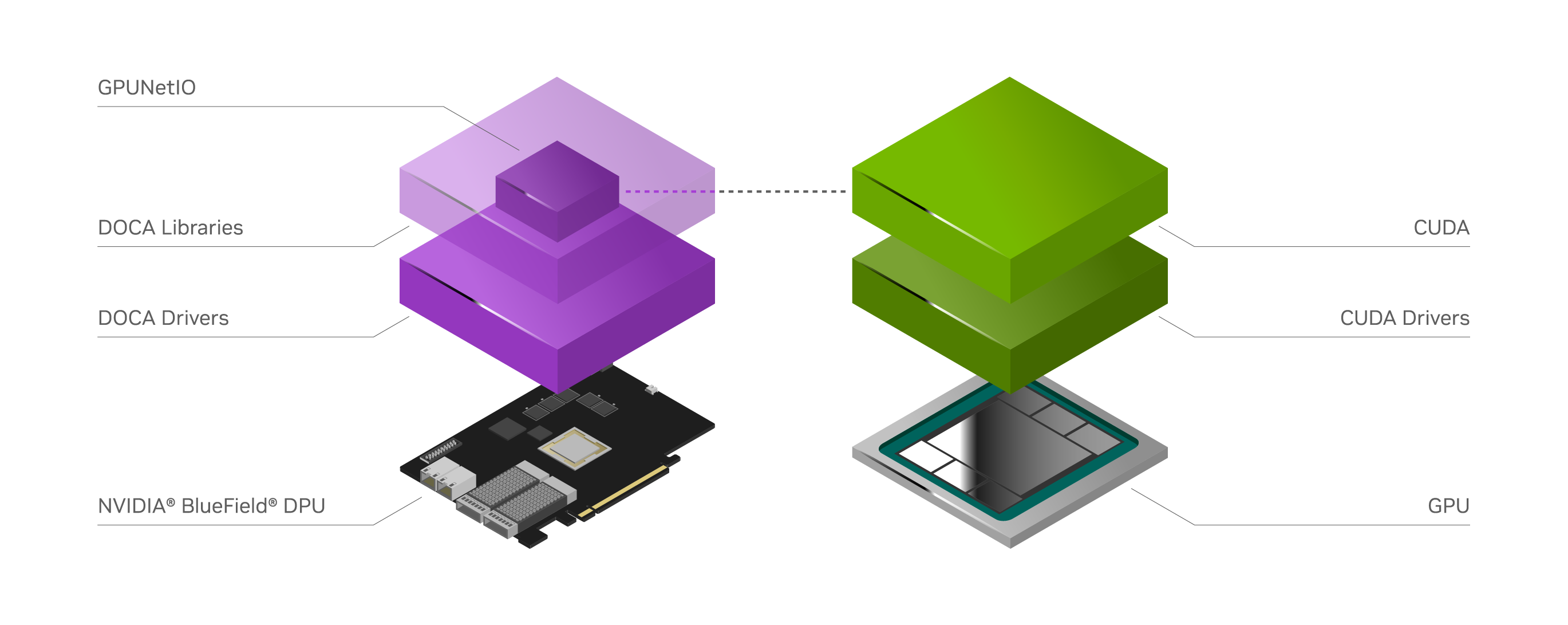

DOCA GPUNetIO

DOCA GPUNetIO enables GPU-centric solutions that remove the CPU from the critical path. Used in Aerial, it provides optimized IO and packet processing by exchanging packets directly between GPU memory and a GPUDirect®-capable NVIDIA DPU. This enables fast IO processing and direct-memory access (DMA) technology to unleash the full potential of inline acceleration.

Read Blog: DOCA GPUNetIO for Aerial Inline Processing.

Aerial Cloud-Native Technologies

The NVIDIA Aerial platform’s cloud-native architecture allows RAN functions to be realized as microservices in containers orchestrated and managed by Kubernetes.

The NVIDIA GPU Operator uses the operator framework within Kubernetes to automate the management of all NVIDIA software components needed to provision a GPU. The NVIDIA Network Operator works with the GPU Operator to enable GPUDirect RDMA on compatible systems. The goal of the Network Operator is to manage the networking-related components, while enabling execution of RDMA and GPUDirect RDMA workloads in a Kubernetes cluster.

Cloud-native vDU and vCU RAN software suites are designed to be fully open and automated for deployment and consolidated operation, supporting 3GPP and O-RAN interfaces on private, public, or hybrid cloud infrastructure. It leverages the benefits of cloud-native architecture, including horizontal and vertical scaling, autohealing, and redundancy.

Use Cases

Public Telco vRAN

Public telco vRAN is a full-stack solution built on NVIDIA Aerial CUDA-Accelerated RAN and running on NVIDIA accelerated computing hardware. This high-performance, software-defined, fully programmable, cloud-native, AI-enabled solution can support multiple O-RAN configurations with the same hardware.

Private 5G for Enterprises

Using NVIDIA’s Aerial CUDA-Accelerated RAN platform, enterprises and CSPs can deploy AI on 5G, simplifying deployment of AI applications over private 5G networks. NVIDIA accelerated computing provides a unified platform that brings together developments in AI and 5G at the edge to accelerate the digital transformation of enterprises across all industries.

AI and 5G Data Center

The Aerial CUDA-Accelerated RAN platform supports multi-tenant workloads like generative AI and 5G in the same infrastructure. With this solution, telcos and CSPs can improve utilization by running gen AI and RAN on the same data center. This realizes the vision of “RAN-in-the-Cloud,” delivering the best flexibility, total cost of ownership, and return on investment.

6G Research

Aerial CUDA-Accelerated RAN is part of the NVIDIA 6G Research Cloud Platform, which provides access to a suite of tools and frameworks for academic and industry-based researchers. A primary focus for the 6G Research Cloud Platform is to facilitate research that brings AI and machine learning to all layers of the telecom stack, enabling research spanning RAN L1, L2, and core nodes.

Resources

Join the 6G Developer Program

Developers and researchers in the 6G Developer Program get access to software, documentation, and resources.