NVIDIA AI Aerial

NVIDIA AI Aerial™ is a suite of accelerated computing platforms, software libraries, and tools to build, train, simulate, and deploy AI-native wireless networks. It enables developers and researchers to go from rapid prototyping to commercial development of AI-RAN solutions for 5G and 6G that telcos can deploy.

NVIDIA AI Aerial Software

Explore open-source software libraries and tools for building, training, simulating, and deploying AI-native wireless networks. Access to NVIDIA Aerial software is available on GitHub. Access for NVIDIA Sionna™ is available via the Sionna Developer page.

NVIDIA Sionna

A GPU-accelerated, differentiable, open-source library for 5G and 6G communications research. It features a lightning-fast ray tracer for radio propagation, a link-level simulator, and system-level simulation capabilities.

Read Sionna DocumentationNVIDIA Aerial Framework

A framework for generating high-performance, CUDA®-accelerated 5G/6G pipelines from Python or MATLAB. It includes a runtime for executing the pipelines on NVIDIA Aerial™ RAN computer platforms.

Read Aerial Framework DocumentationNVIDIA Aerial CUDA-Accelerated RAN

NVIDIA CUDA libraries for layer 1 (L1) and layer 2 (L2) RAN, to build commercial-grade and software-defined 5G and 6G radio access networks.

Read Aerial CUDA-Accelerated RAN DocumentationNVIDIA Aerial Omniverse Digital Twin

A platform that uses NVIDIA Omniverse™ to create next-generation network digital twins, enabling physically accurate virtual 5G and 6G wireless networks, from single towers to full cities.

Hardware Platforms

NVIDIA AI Aerial Research Platforms

These systems enable the building, training, and over-the-air testing of AI-native wireless innovations for 5G and 6G.

Sionna Research Kit

Built on an open-source foundation and powered by NVIDIA’s state-of-the-art, GPU-accelerated libraries, the Sionna Research Kit makes rapid prototyping, training, and deployment of cutting-edge 5G and 6G algorithms achievable for everyone—from seasoned professionals to students.

Aerial Testbed (ARC-OTA)

An end-to-end system that includes Aerial CUDA-Accelerated RAN combined with open-source software (OAI L2+ and 5G Core) running on NVIDIA GH200 or DGX Spark, over-the-air. Used for product development,, and performance optimization of commercial-grade and software-defined AI-RAN solutions.

Learn More About ARC-OTANVIDIA AI Aerial Deployment Platforms

The Aerial RAN Computer (ARC) family delivers high-performance, scalable, and accelerated computing platforms for telecom networks, enabling commercial AI-RAN deployments.

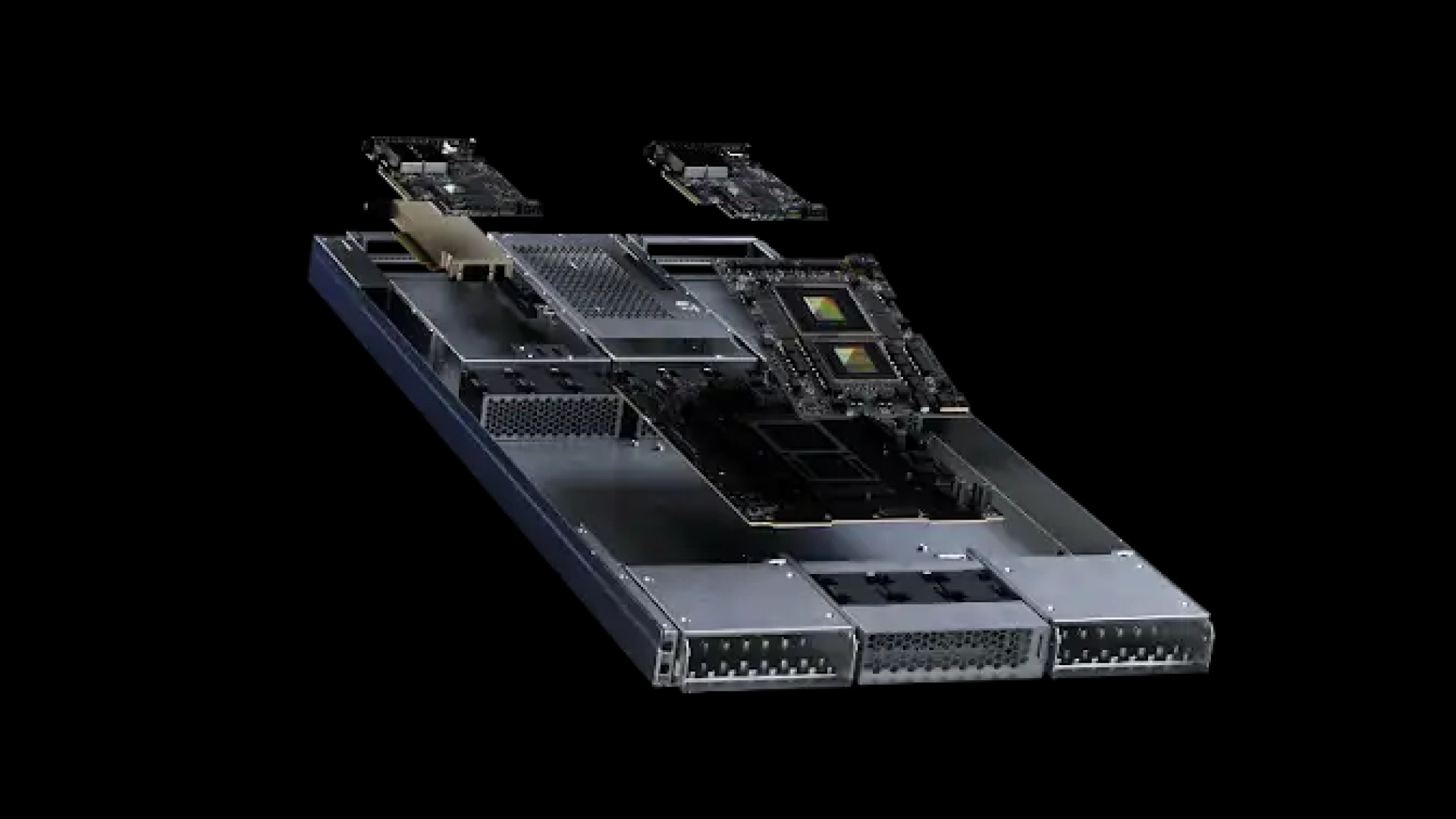

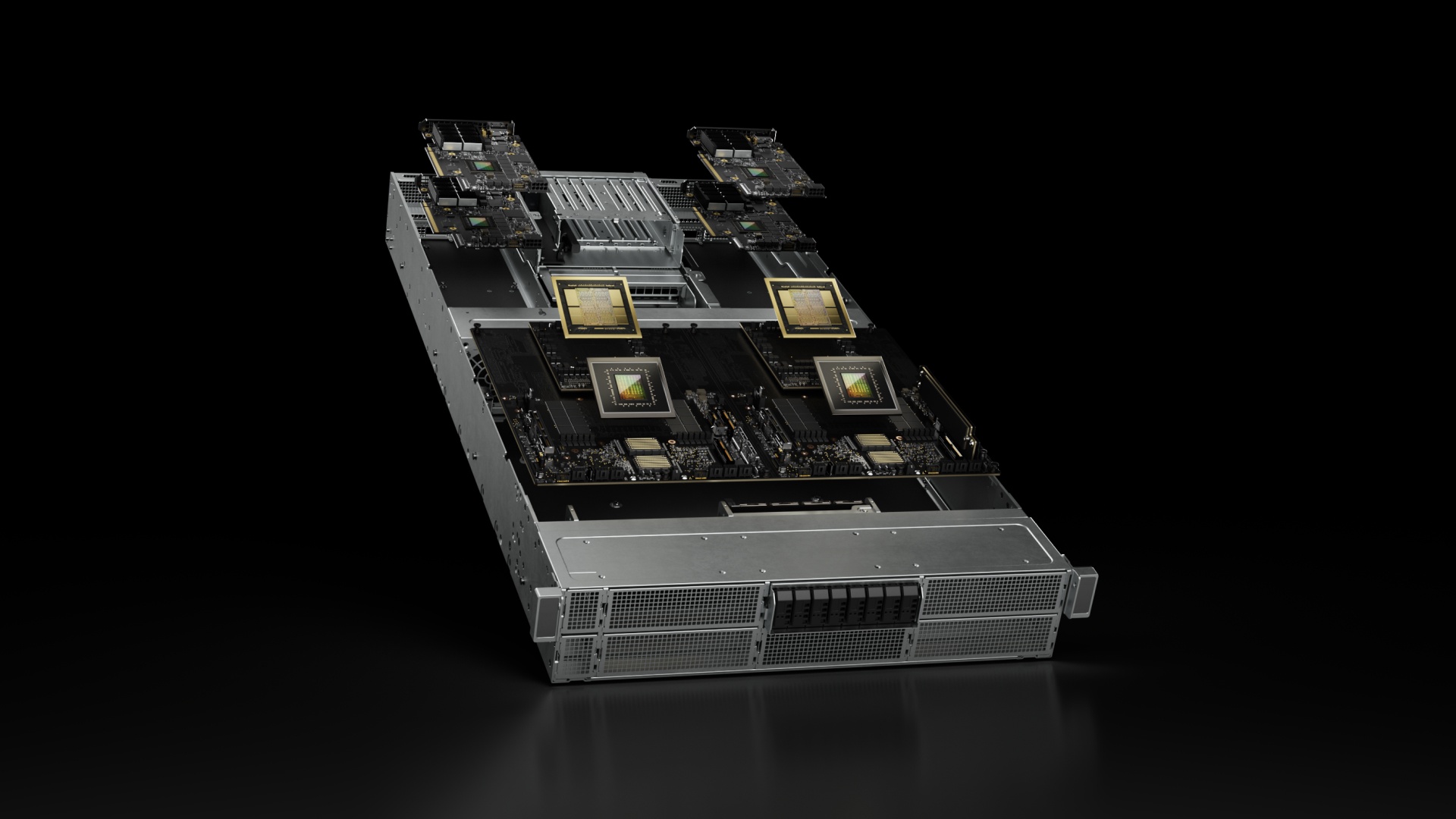

Aerial RAN Computer-1

A modular and high-performance AI-RAN platform for high-density deployments, suited for AI-centric workloads and designed to scale from distributed RAN (D-RAN) to centralized RAN (C-RAN) at mobile switching offices.

Learn More About Aerial RAN Computer-1

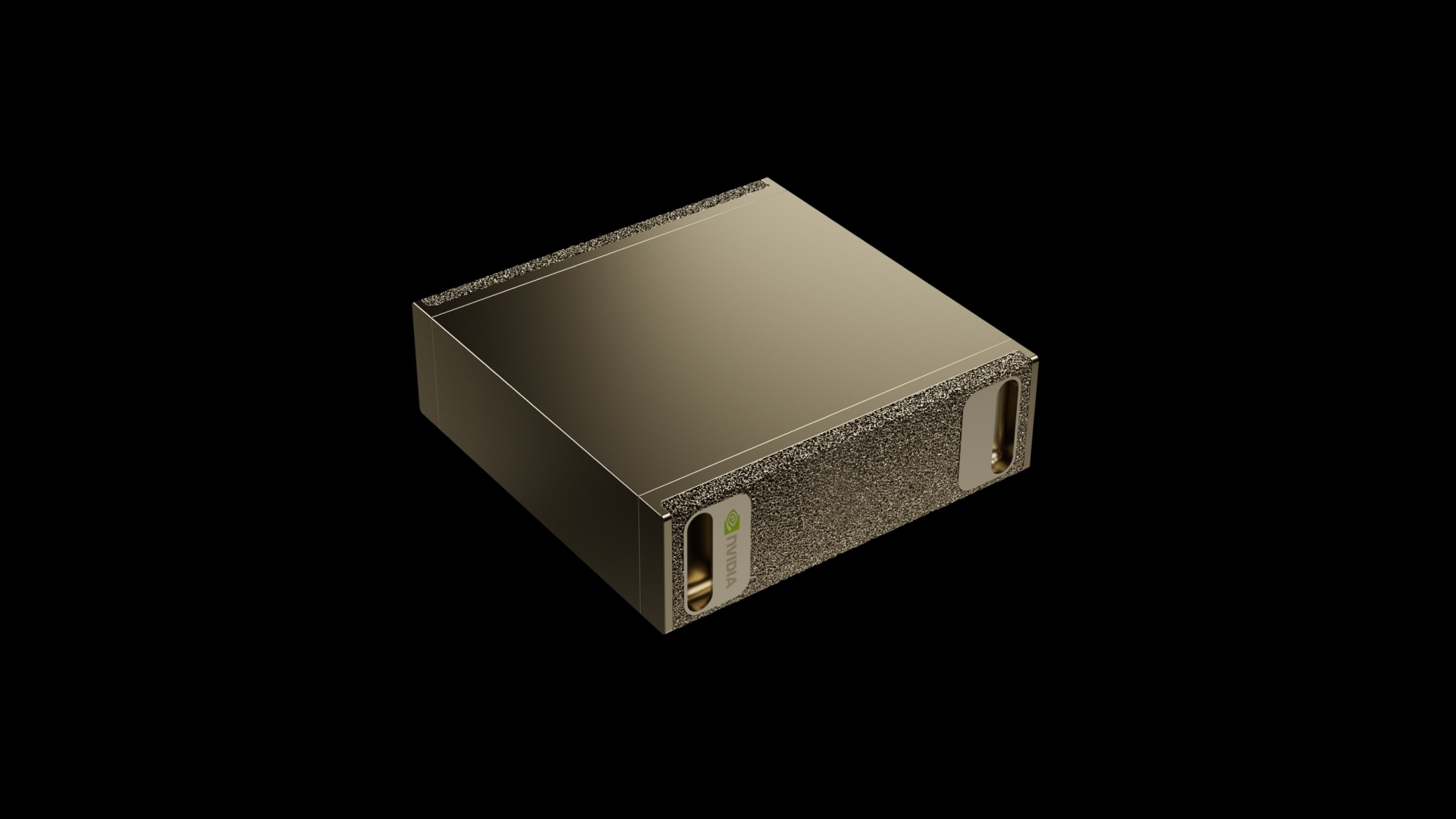

ARC-Compact

An energy-efficient and high-performance AI-RAN platform for cell sites, suited for RAN-centric workloads and designed to meet the form-factor and environmental requirements for distributed RAN deployments.

Learn More About ARC-Compact.jpg)

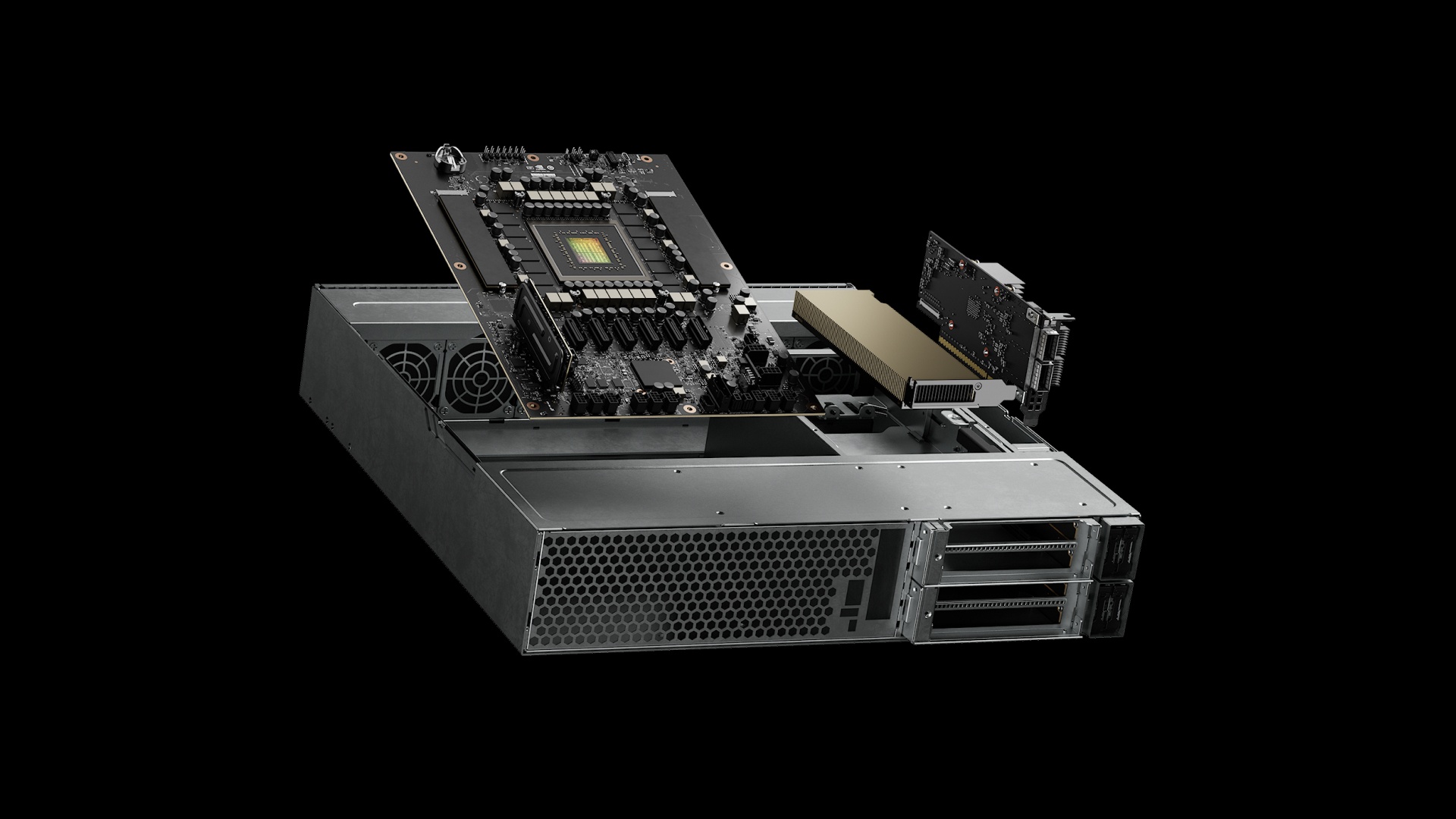

ARC-Pro

A high-performance, energy-efficient AI-RAN platform featuring NVIDIA Blackwell RTX PRO™ GPUs, designed for on-ramping to AI-native 5G and 6G with advanced computing, connectivity, and sensing. Telco-optimized form factor, suitable for upgrading existing sites or greenfield deployments.

Learn More About ARC-ProGet Started With NVIDIA AI Aerial

Build and Train

Build and train AI/ML models for RAN, physical (PHY), and media access control (MAC) layers.

Simulate

Run large-scale, photorealistic 5G and 6G wireless network scenarios

Deploy

Implement and validate in live and edge networks at scale.

Learning Library

NVIDIA AI Aerial Ecosystem

Academia and industry leaders are collaborating to advance AI-native wireless networks and drive 6G research.

.svg)

Next Steps

Join the 6G Developer Program

Get access to NVIDIA AI Aerial software resources.

NVIDIA AI Aerial FAQ

NVIDIA AI Aerial is a suite of accelerated computing platforms, software libraries, and tools for building, training, simulating, and deploying AI-native wireless networks. AI Aerial supports both advanced wireless research and commercial-grade cellular deployment. It enables developers to go from rapid prototyping to commercial development of AI-RAN solutions for 5G and 6G that telcos can deploy.

AI Aerial includes a variety of hardware and software resources for wireless research and commercial deployment. Hardware ranges from small to large accelerated computing platforms, comprising various GPUs, CPUs and Networking components. Software libraries range from open-source Sionna for rapid prototyping to Aerial CUDA-Accelerated RAN, Aerial Framework and Aerial Omniverse Digital Twin for building CUDA-accelerated software-defined RAN.

Developers and researchers can access Aerial CUDA-Accelerated RAN Aerial Framework on GitHub. Access Aerial Omniverse Digital Twin by joining the NVIDIA 6G Developer Program. For Sionna, access is available via the Sionna Developer page. Documentation, SDKs, and hardware platforms are available for rapid onboarding and experimentation.

Compatible hardware includes Aerial Testbed (ARC-OTA), Aerial RAN Computer-1, ARC-Compact, ARC-Pro, and the Sionna Research Kit. These are built using various accelerated computing platforms such as NVIDIA Jetson Orin, DGX Spark, Grace Hopper 200 for research and Grace Blackwell 300, L4 GPUs and RTX Pro GPUs for commercial deployments.

AI Aerial supports centralized and distributed AI-RAN deployments for public and private 5G and future 6G networks. Aerial CUDA-Accelerated RAN is O-RAN 7.2x compliant and supports O-RAN Distributed Unit (O-DU) functionality.