NVIDIA NeMo Retriever

NVIDIA NeMo™ Retriever is a collection of industry-leading Nemotron RAG models delivering 50% better accuracy, 15x faster multimodal PDF extraction, and 35x better storage efficiency, enabling enterprises to build retrieval-augmented generation (RAG) pipelines that provide real-time business insights. NeMo Retriever, part of the NVIDIA NeMo software suite for managing the AI agent lifecycle, ensures data privacy and seamlessly connects to proprietary data wherever it resides, empowering secure, enterprise-grade retrieval. NeMo Retriever serves as a core component for NVIDIA AI-Q—a blueprint for building intelligent AI agents—and the NVIDIA RAG blueprint, enabling access to knowledge from enterprise AI data platforms. It provides a reliable foundation for scalable, production-ready retrieval pipelines supporting advanced AI applications.

NeMo Retriever microservices set a new standard for enterprise RAG applications, leading the industry with first-place performance across three top visual document retrieval leaderboards (ViDoRe V1, ViDoRe V2, MTEB, and MMTEB VisualDocumentRetrieval).

Documentation

Build world-class information retrieval pipelines and AI query engines with scalable data extraction and high-accuracy embedding and reranking.

Ingestion

Rapidly ingest massive volumes of data and extract text, graphs, charts, and tables at the same time for highly accurate retrieval.

Embedding

Boost text question-and-answer retrieval performance, providing high-quality embeddings for many downstream natural language processing (NLP) tasks.

Reranking

Enhance retrieval performance further with a fine-tuned reranking model, finding the most relevant passages to provide as context when querying a large language model (LLM).

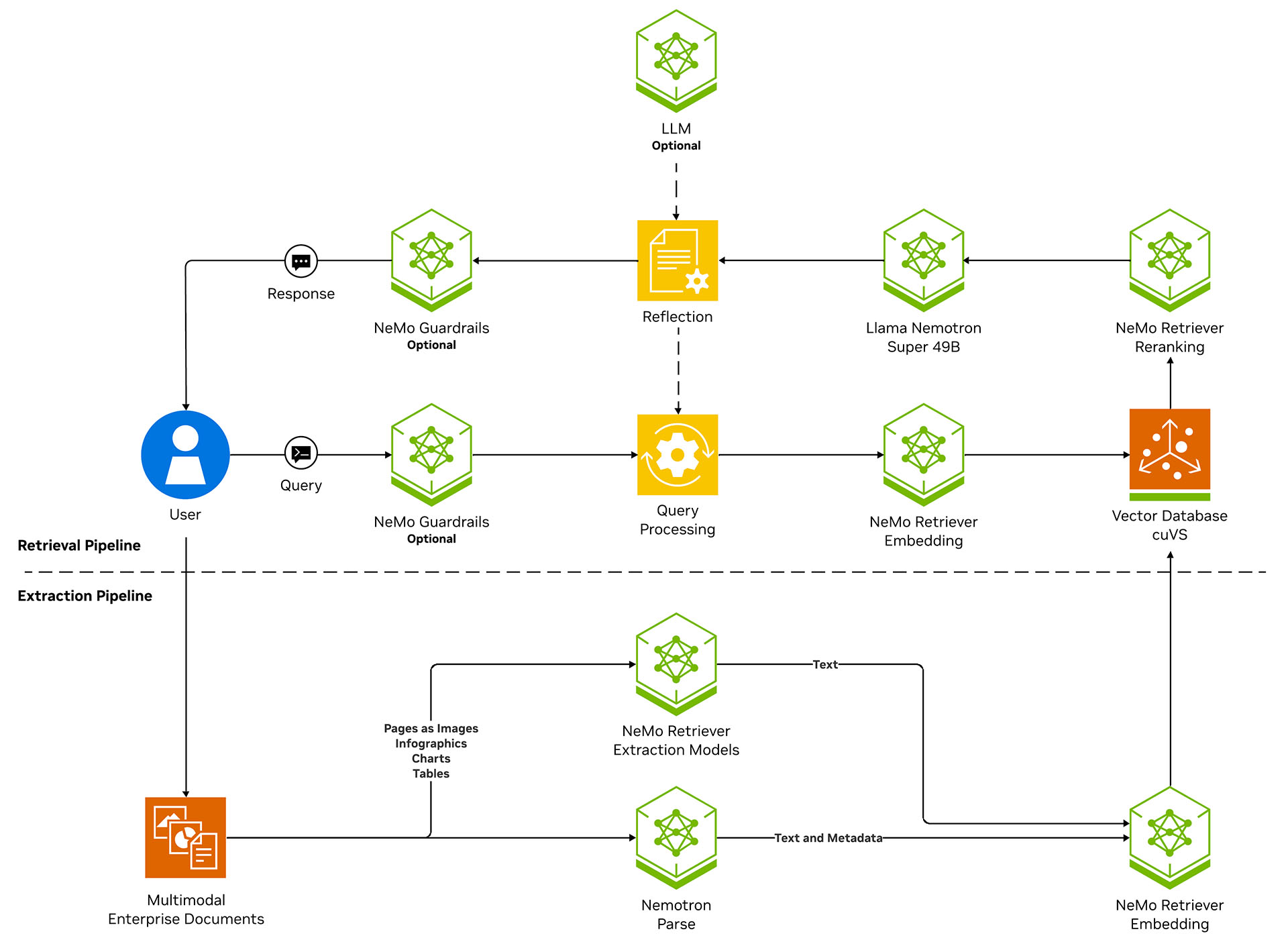

How NVIDIA NeMo Retriever Works

NeMo Retriever provides components for building data extraction and information retrieval pipelines. The pipeline extracts structured and unstructured data (ex. text, charts, tables), converts it to text, and filters out duplicates. A NeMo Retriever embedding NIM converts the chunks into embeddings and stores them in a vector database, accelerated by NVIDIA cuVS, for enhanced performance and speed of indexing and search.

When a query is submitted, the system retrieves relevant information using vector similarity search, and then a NeMo Retriever reranking NIM reranks the results for accuracy. With the most pertinent information, an LLM NIM generates a response that’s informed, accurate, and contextually relevant. You can use various LLM NIM microservices from the NVIDIA API catalog to enable additional capabilities, such as synthetic data generation.

Introductory Resources

Learn more about building efficient information-retrieval pipelines with NeMo Retriever.

Introductory Blog

Understand the function of embedding and reranking models in information retrieval pipelines, top considerations, and more.

Introductory Webinar

Improve the accuracy and scalability of text retrieval for production-ready generative AI pipelines and deploy at scale.

AI Blueprint for RAG

Learn best practices for connecting AI apps to enterprise data using industry-leading embedding and reranking models.

Introductory GTC Session

Learn about the latest models, tools, and techniques for creating agentic and RAG pipelines for multimodal data ingestion, extraction, and retrieval.

World-Class Information-Retrieval Performance

NeMo Retriever microservices accelerate multimodal document extraction and real-time retrieval with lower RAG costs and higher accuracy. They support reliable, multilingual, and cross-lingual retrieval, and optimize storage, performance, and adaptability for data platforms – enabling efficient vector database expansion.

50% Fewer Incorrect Answers

NeMo Retriever Multimodal Extraction Recall@5 Accuracy

compared with NeMo Retriever Off: open-source alternative: HW - 1xH100

3X Higher Embedding Throughput

NeMo Retriever Llama 3.2 Multilingual Text Embedding

15X Higher Multimodal Data Extraction Throughput

NeMo Retriever Extraction NIM Microservices

35x Improved Data Storage Efficiency

Multilingual, Long-Context, Text Embedding NIM Microservice

.svg)

Ways to Get Started With NVIDIA NeMo Retriever

Use the right tools and technologies to build and deploy generative AI applications that require secure and accurate information retrieval to generate real-time business insights for organizations across every industry.

Download

Experience NeMo Retriever NIM microservices through a UI-based portal for exploring and prototyping with NVIDIA-managed endpoints, available for free through NVIDIA’s API catalog and deployed anywhere.

Try

Jump-start building your AI solutions with NVIDIA Blueprints, customizable reference applications, available on the NVIDIA API catalog.

Starter Kits

Start building information retrieval pipelines and generative AI applications for multimodal data ingestion, embedding, reranking, retrieval-augmented generation, and agentic workflows by accessing NVIDIA Blueprints, tutorials, notebooks, blogs, forums, reference code, comprehensive documentation, and more.

AI Agent for Enterprise Research

Develop AI agents that continuously process and synthesize multimodal enterprise data, reason, plan, and refine to generate comprehensive reports.

Enterprise RAG

Connect secure, scalable, reliable AI applications to your company’s internal enterprise data using industry-leading embedding and reranking models for information retrieval at scale.

Streaming Data to RAG

Unlock dynamic, context-aware insights from streaming sources like radio signals and other sensor data.

Evaluating and Customizing RAG Pipelines

Evaluate pretrained embedding models on data and queries similar to your users’ needs using NVIDIA NeMo microservices to optimize RAG performance.

NVIDIA NeMo Retriever Learning Library

More Resources

Ethical AI

NVIDIA’s platforms and application frameworks enable developers to build a wide array of AI applications. Consider potential algorithmic bias when choosing or creating the models being deployed. Work with the model’s developer to ensure that it meets the requirements for the relevant industry and use case; that the necessary instruction and documentation are provided to understand error rates, confidence intervals, and results; and that the model is being used under the conditions and in the manner intended.