NVIDIA Metropolis for Developers

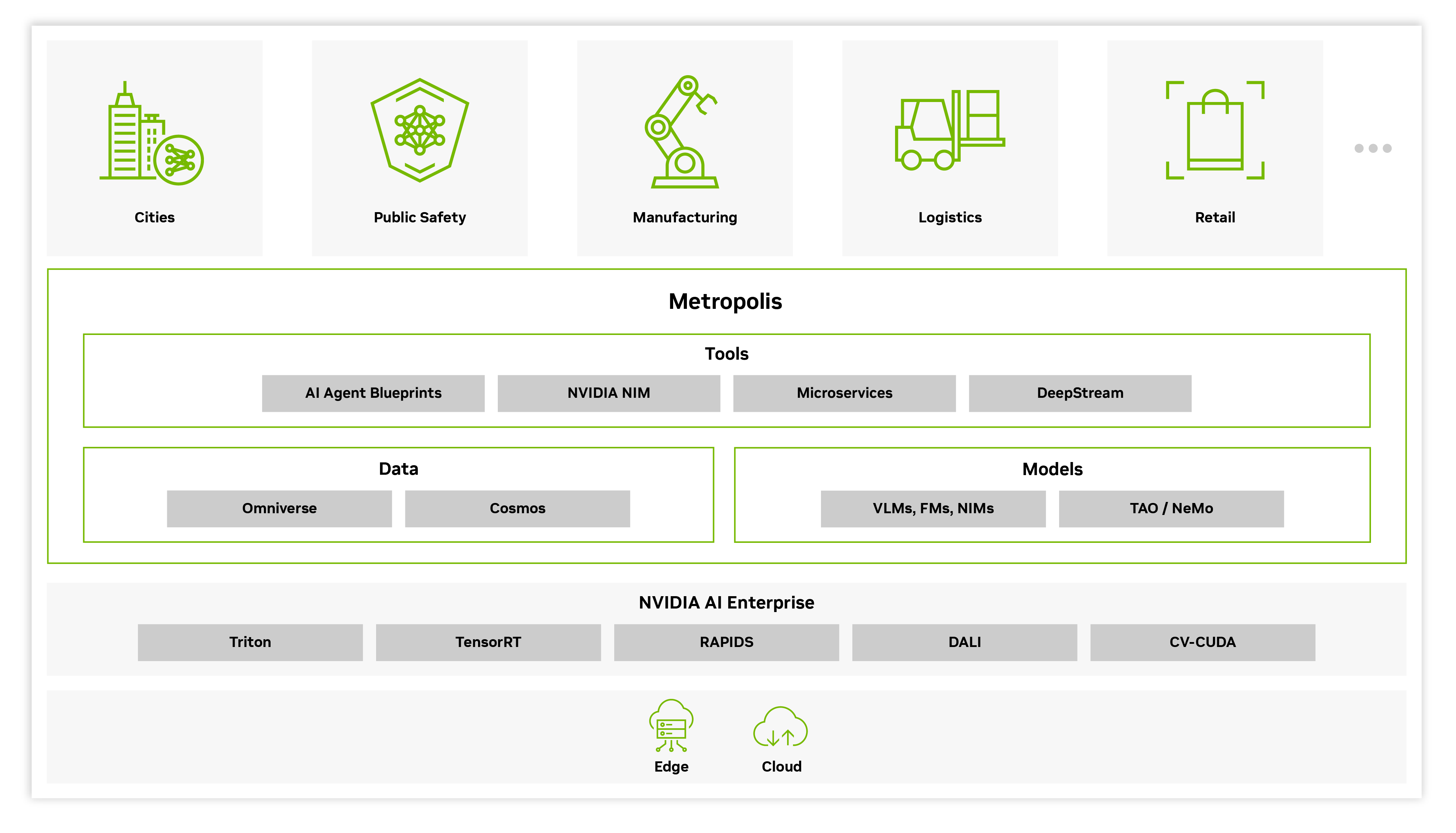

Discover an advanced collection of developer blueprints, AI models, and tools that deliver exceptional scale, throughput, cost-effectiveness, and faster time to production. It provides everything you need to build, deploy, and scale vision AI agents and applications, from the edge to the cloud.

Get Started

Explore All the Benefits

Faster Builds

Use and tune high-performance vision language models and vision foundation models to streamline AI training for your unique industry. NVIDIA Blueprints and cloud-native modular microservices are designed to help you accelerate development.

Lower Cost

Powerful SDKs—including NVIDIA TensorRT™, DeepStream, and TAO—reduce overall solution cost. Generate synthetic data, boost accuracy with model customization, and maximize inference throughput on NVIDIA infrastructure.

More Flexible Deployments

Deploy with flexibility using NVIDIA Inference Microservices (NIM™), cloud-native Metropolis microservices, and containerized applications offering options for on-premises, cloud, or hybrid deployments.

Powerful Tools for

AI-Enabled Video Analytics

The Metropolis suite of SDKs provides a variety of starting points for AI application development and deployment.

State-of-the-Art Vision Language Models and Vision Foundation Models

Vision language models (VLMs) are multimodal, generative AI models that can understand and process video, images, and text. Computer vision foundation models, including vision transformers (ViTs), analyze and interpret visual data to create embeddings or perform tasks like object detection, segmentation, and classification.

Cosmos Reason offers you an open and fully customizable world foundation model designed for video reasoning. It enables efficient training data curation for robotics and autonomous vehicles (AVs), and powers spatio-temporal understanding to accelerate automation across smart cities and industrial environments.

Explore

.jpg)

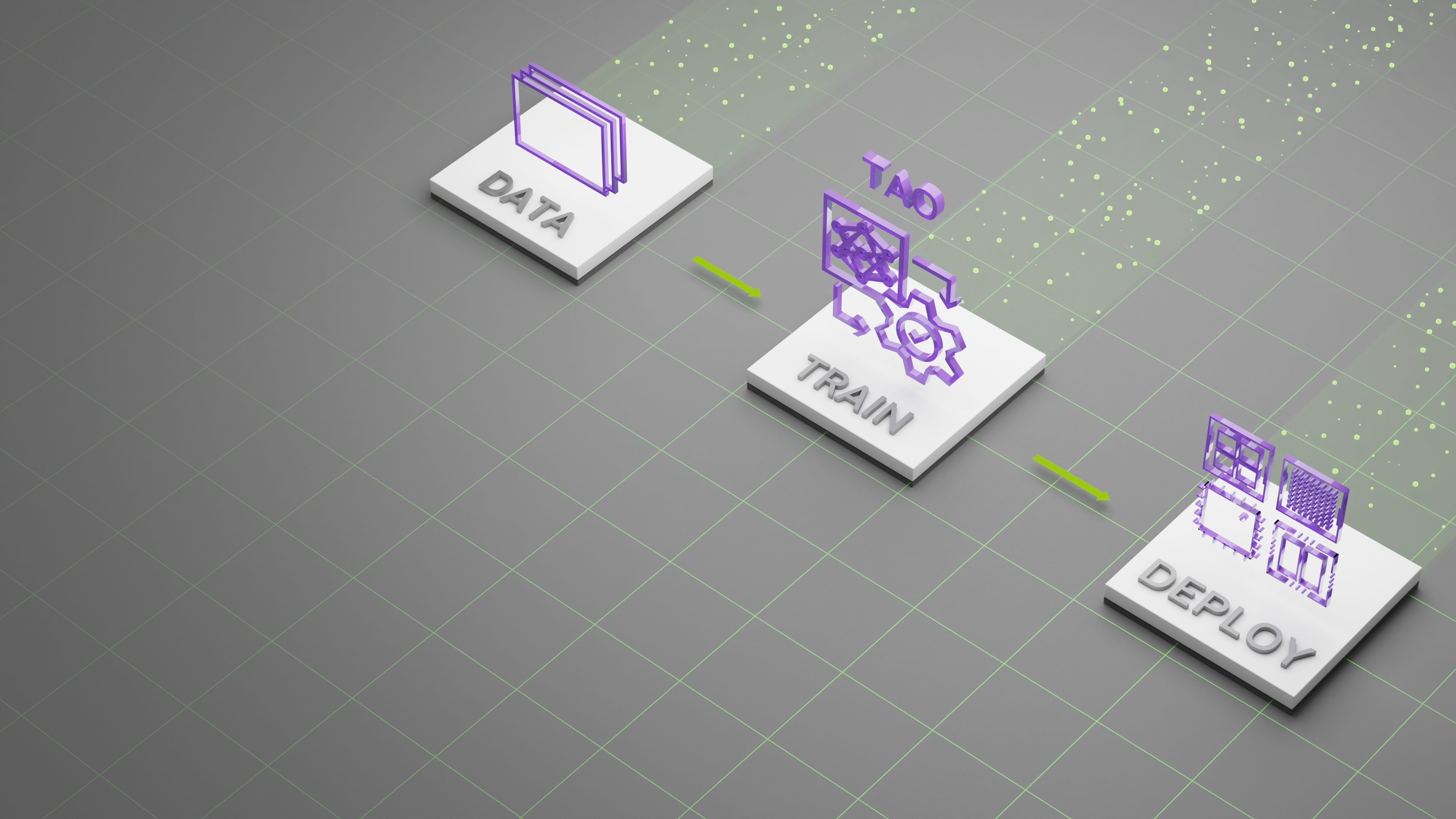

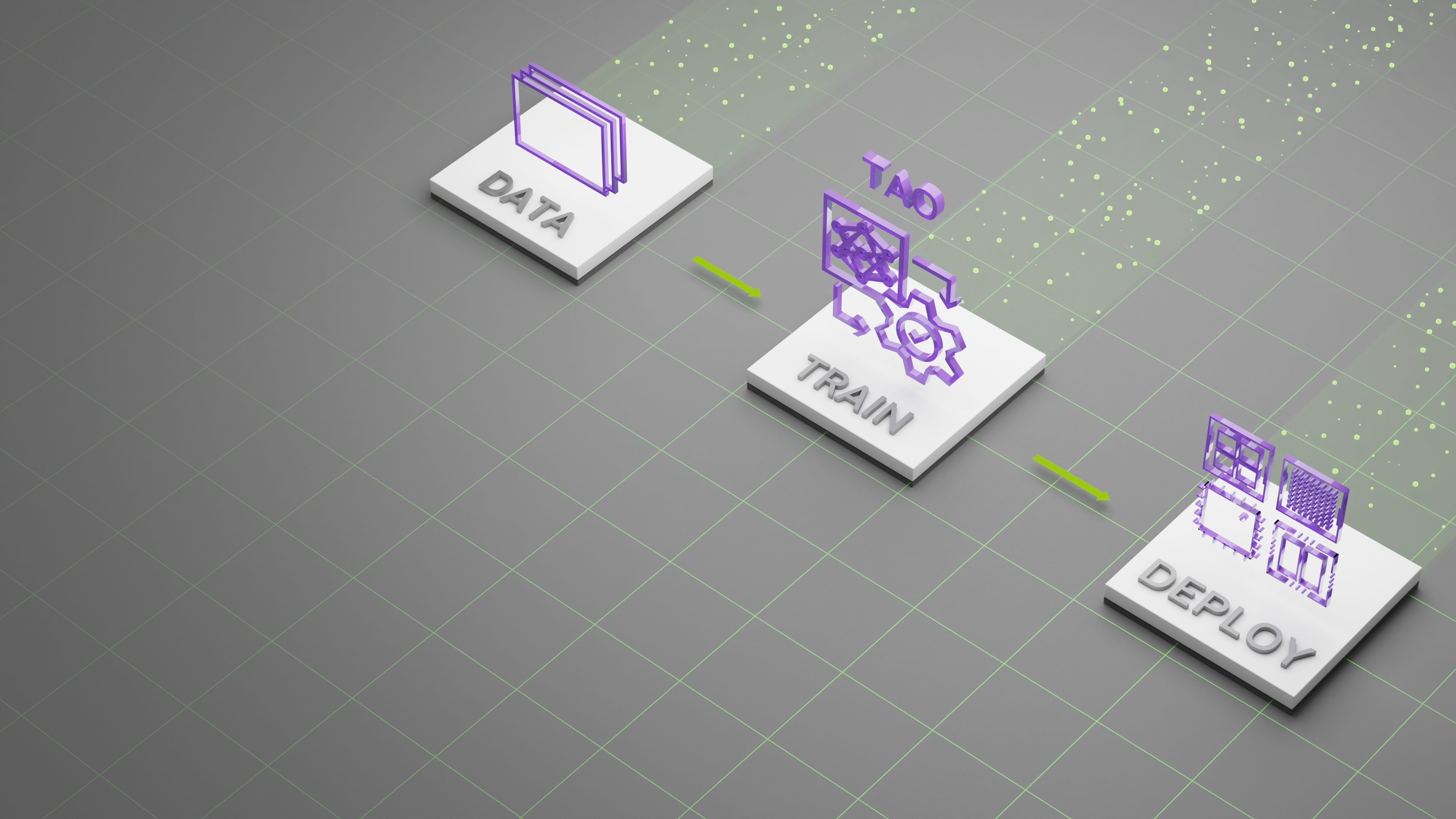

TAO

The Train, Adapt, and Optimize (TAO) toolkit is a low-code AI model development solution for developers. It lets you use the power of transfer learning to fine-tune NVIDIA computer vision models and vision foundation models with your own data and optimize for inference—without AI expertise or a large training dataset.

Learn More About TAO

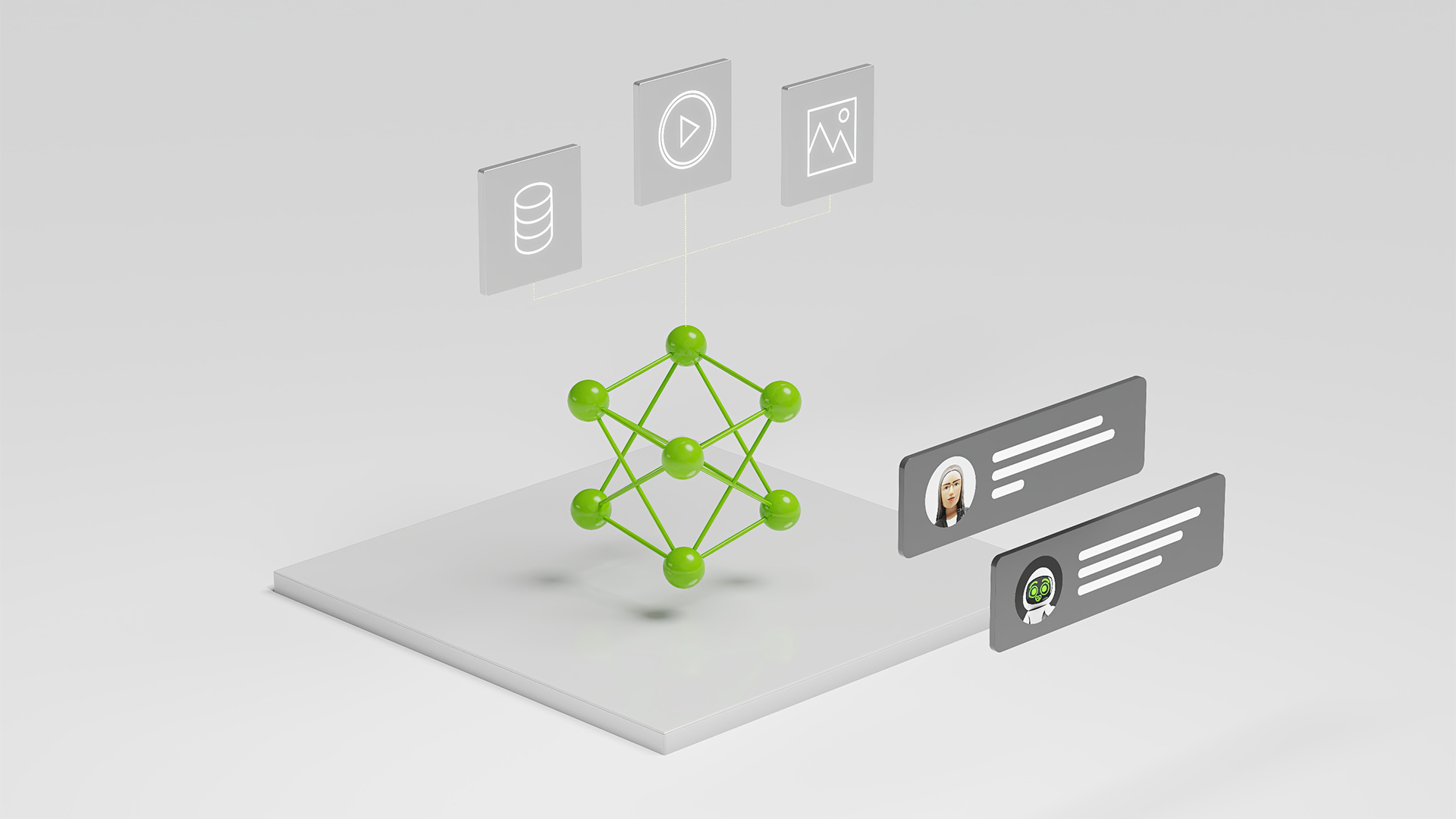

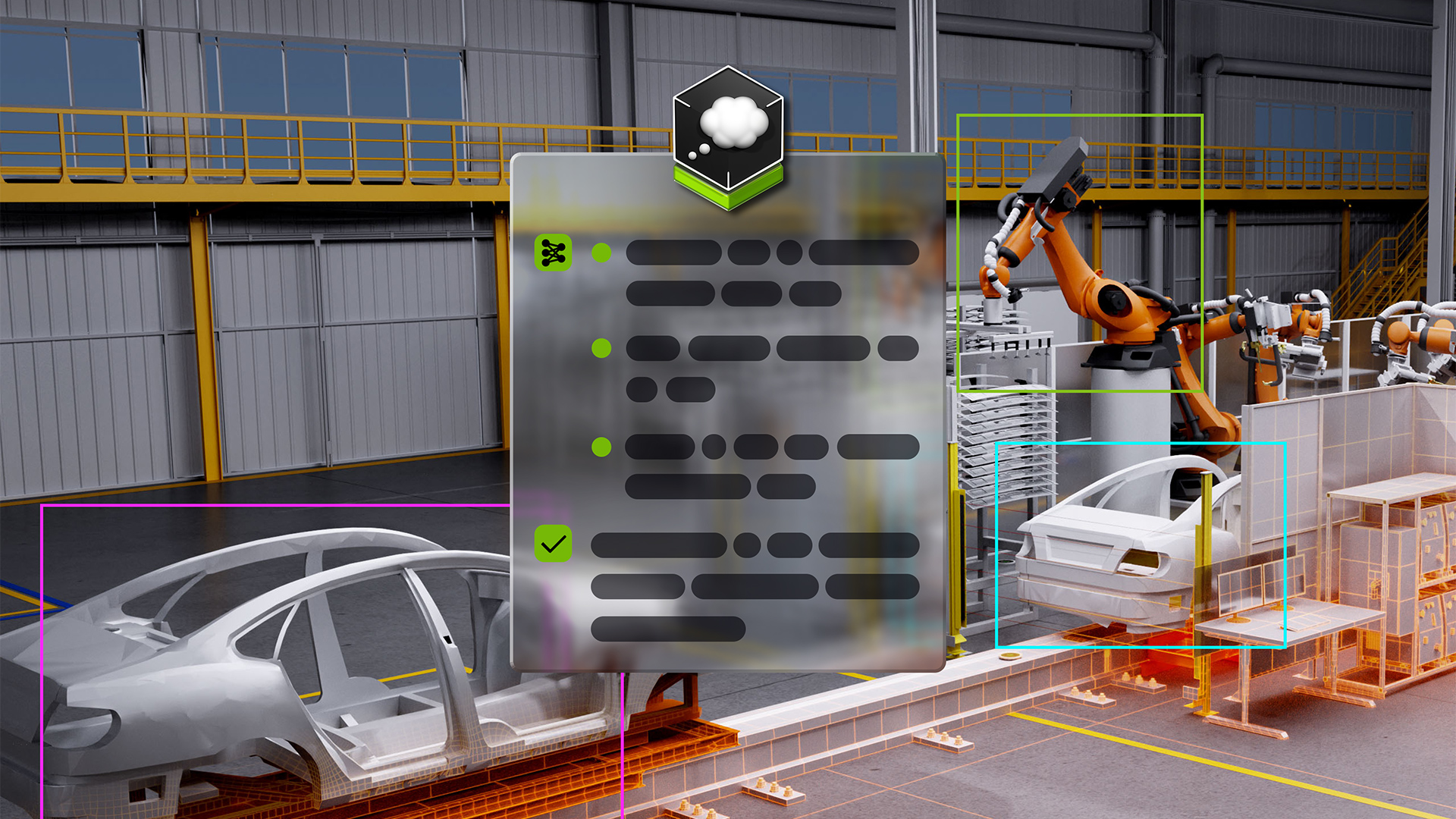

AI Agent Blueprints

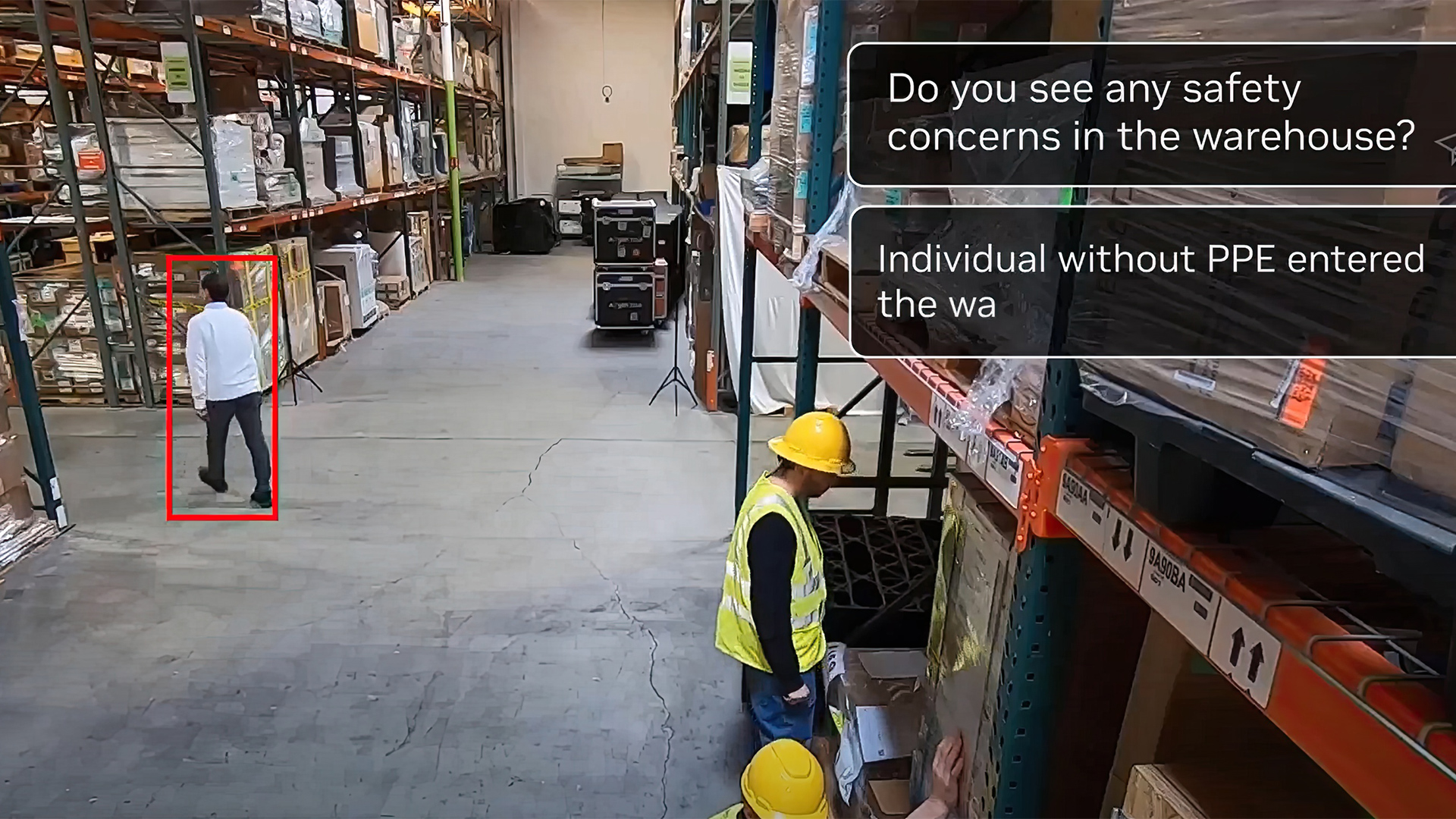

The NVIDIA AI Blueprint for video search and summarization (VSS) makes it easy to build and customize video analytics AI agents using generative AI, VLMs, LLMs, and NVIDIA NIM. The video analytics AI agents are given tasks through natural language and can analyze, interpret, and process vast amounts of video data to provide critical insights that help a range of industries optimize processes, improve safety, and cut costs.

VSS enables seamless integration of generative AI into existing computer vision pipelines—enhancing inspection, search, and analytics with multimodal understanding and zero-shot reasoning. Easily deploy from the edge to the cloud on platforms including NVIDIA RTX PRO™ 6000, DGX™ Spark, and Jetson Thor™.

Explore NVIDIA AI Blueprint for Video Search and Summarization

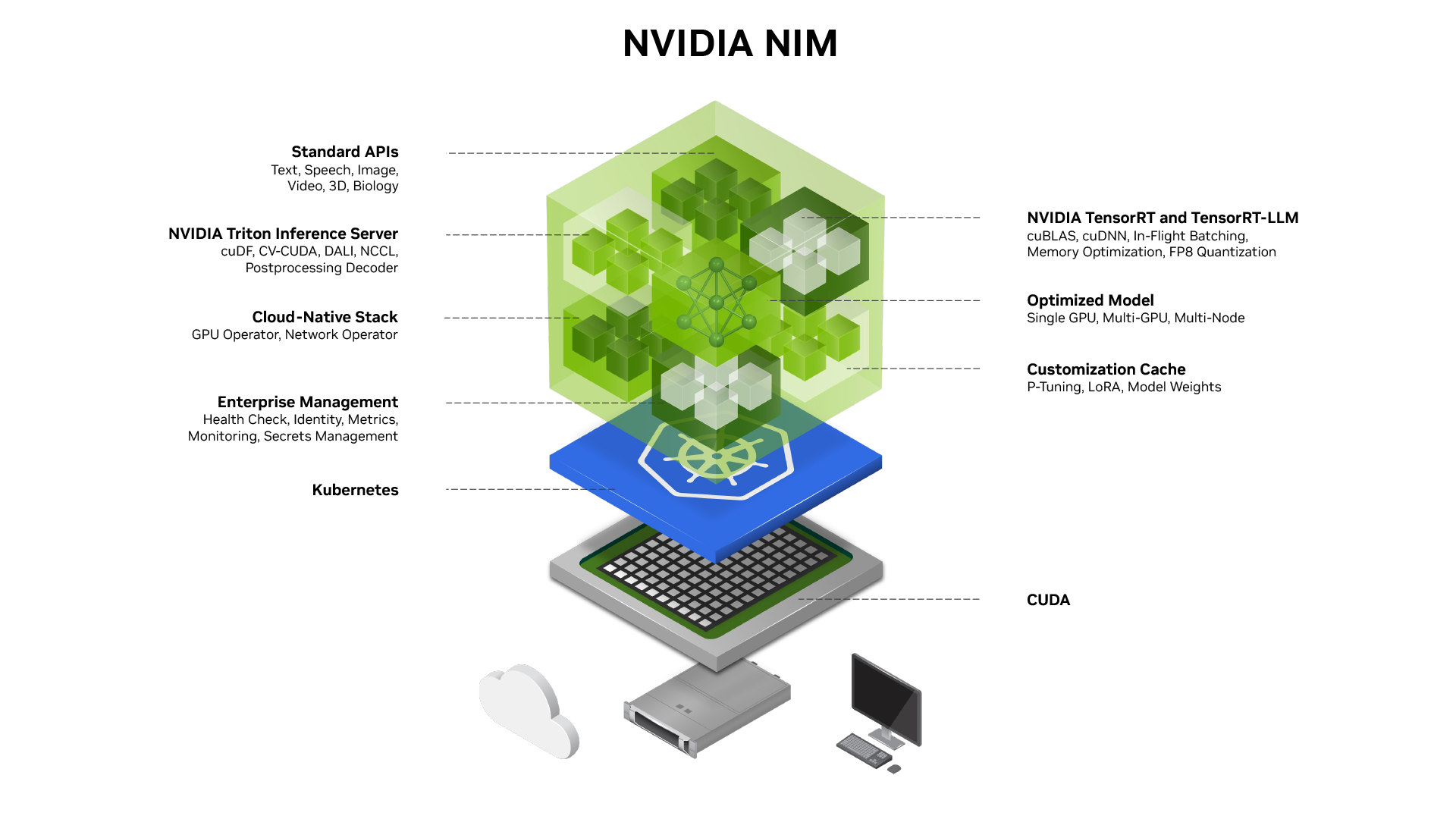

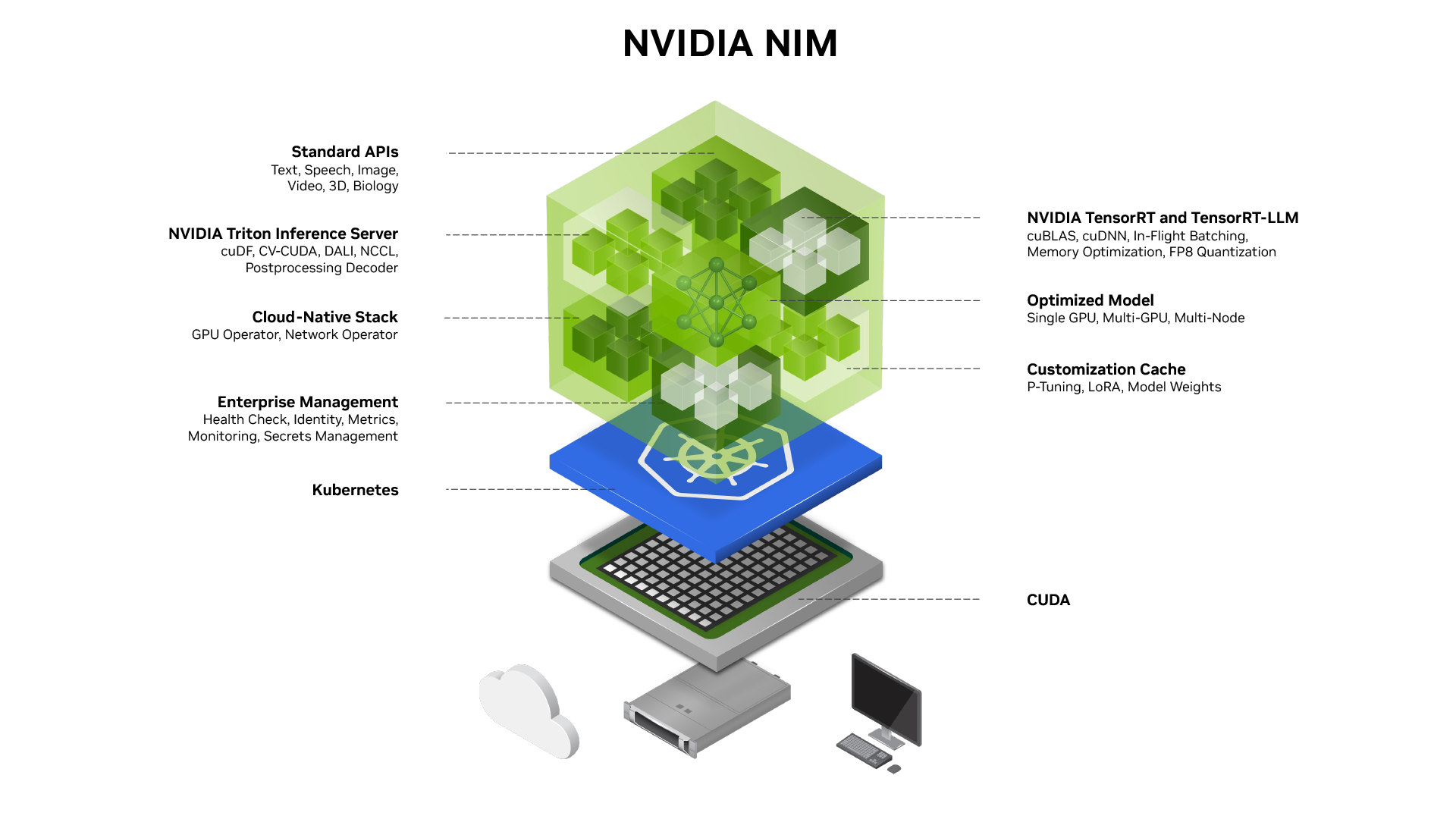

NVIDIA NIM

NVIDIA NIM is a set of easy-to-use microservices designed for secure, reliable deployment of high-performance AI model inferencing across the cloud, data center, and workstations. Supporting a wide range of AI models—including foundation models, LLMs, VLMs, and more—NIM ensures seamless, scalable AI inferencing, on-premises or in the cloud, using industry-standard APIs.

Explore NVIDIA NIM for Vision

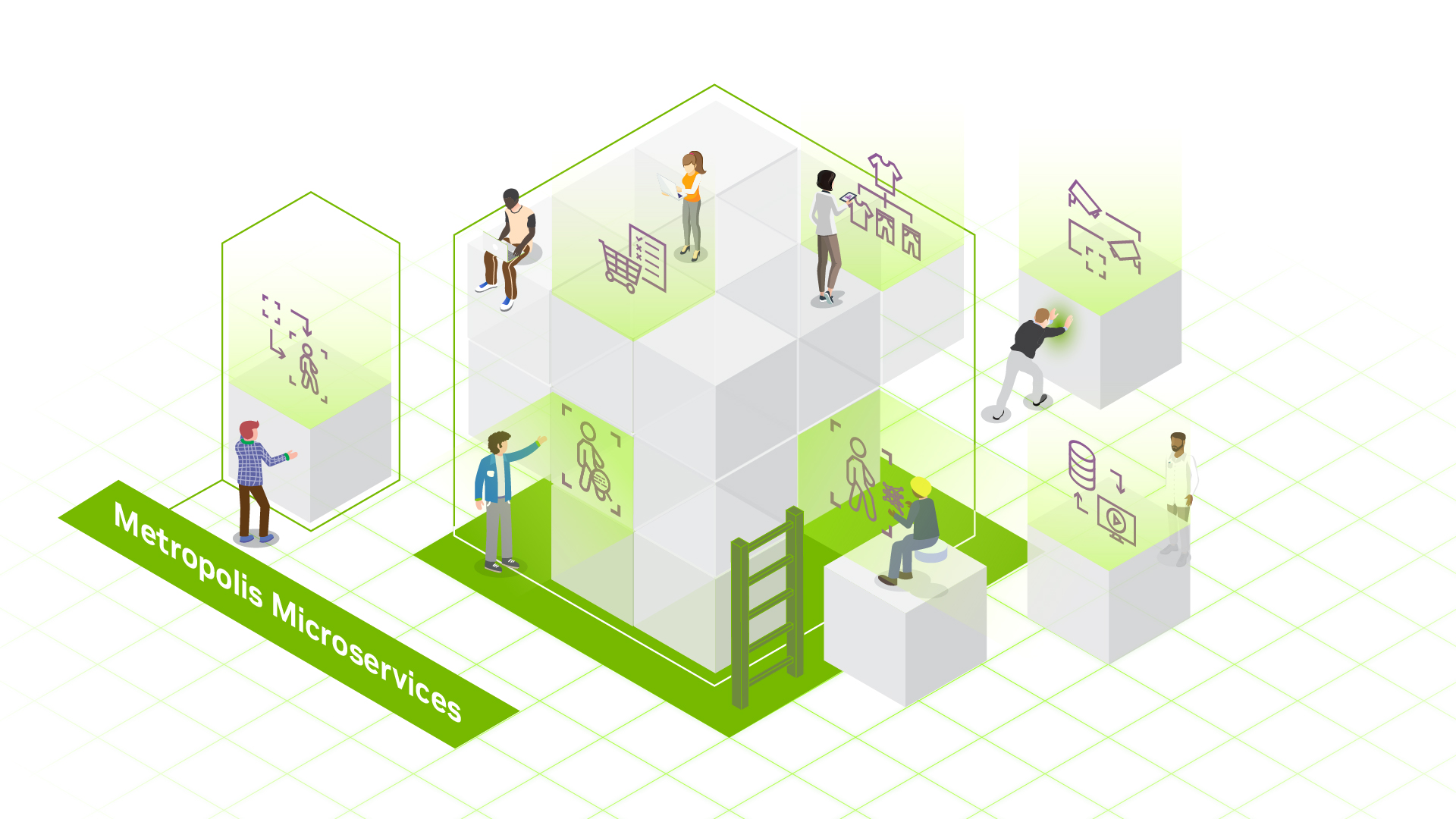

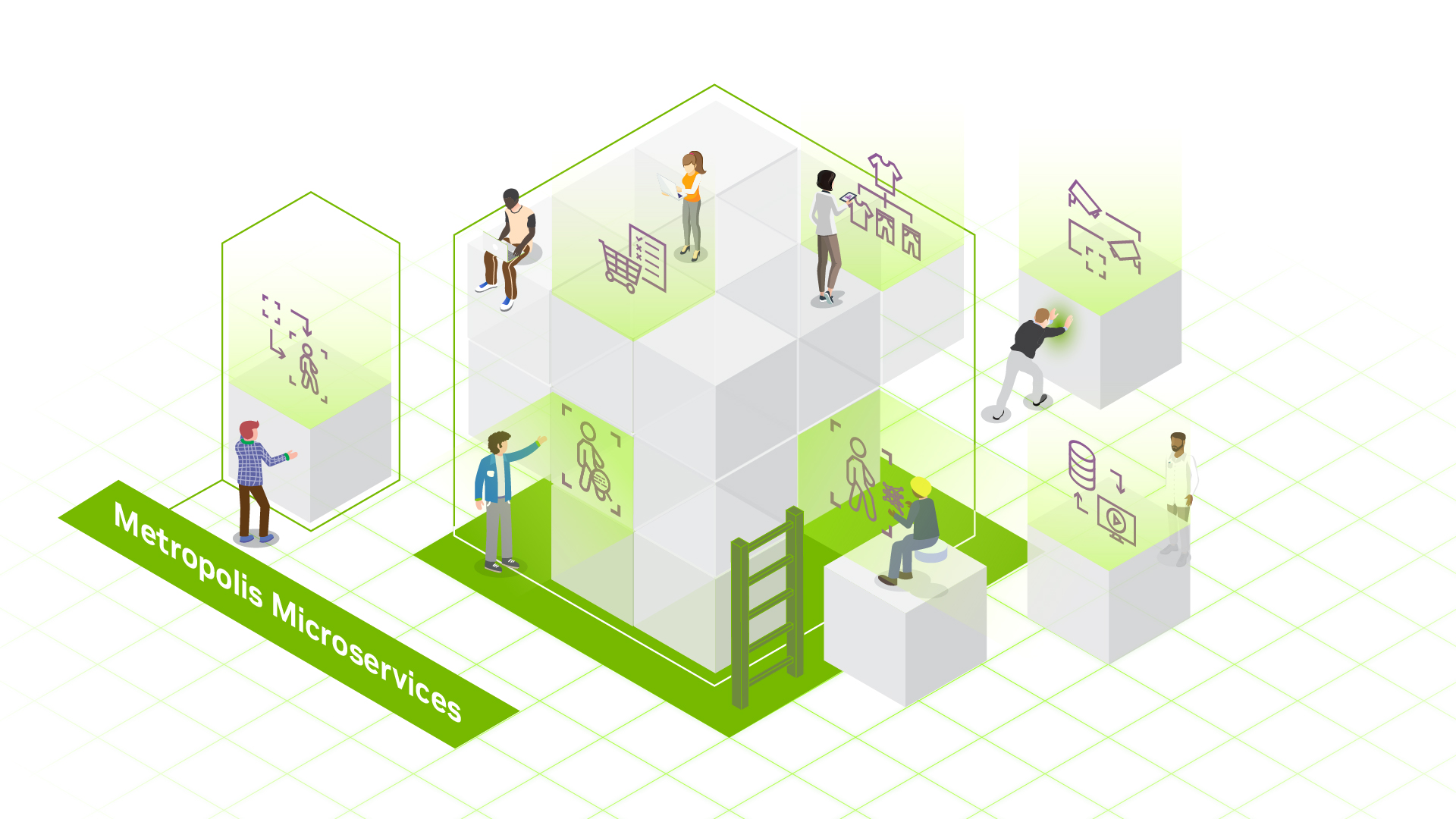

Metropolis Microservices

Metropolis microservices provide powerful, customizable, cloud-native building blocks for developing vision AI agents, applications, and solutions. They’re built to run on NVIDIA cloud and data center GPUs, as well as the NVIDIA Jetson Orin™ edge AI platform.

Learn More

DeepStream SDK

NVIDIA DeepStream SDK is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services. DeepStream 8.0 will include multi-camera tracking and a low-code inference builder.

Learn More About DeepStream SDK

NVIDIA Omniverse

NVIDIA Omniverse™ helps you integrate OpenUSD, NVIDIA RTX™ rendering technologies, and generative physical AI into existing software tools and simulation workflows to develop and test digital twins. You can use it with your own software for building AI-powered robot brains that drive robots, Metropolis perception from cameras, equipment, and more for continuous development, testing, and optimization.

Omniverse Replicator makes it easier to generate physically accurate 3D synthetic data at scale, or build your own synthetic data tools and frameworks. Bootstrap perception AI model training and achieve accurate Sim2Real performance without having to manually curate and label real-world data.

Learn More About Omniverse Replicator

NVIDIA Cosmos

NVIDIA Cosmos™ is a platform of state-of-the-art generative world foundation models (WFMs), advanced tokenizers, guardrails, and an accelerated data processing and curation pipeline. It's purpose-built to accelerate the development of physical AI systems.

Learn More About NVIDIA Cosmos

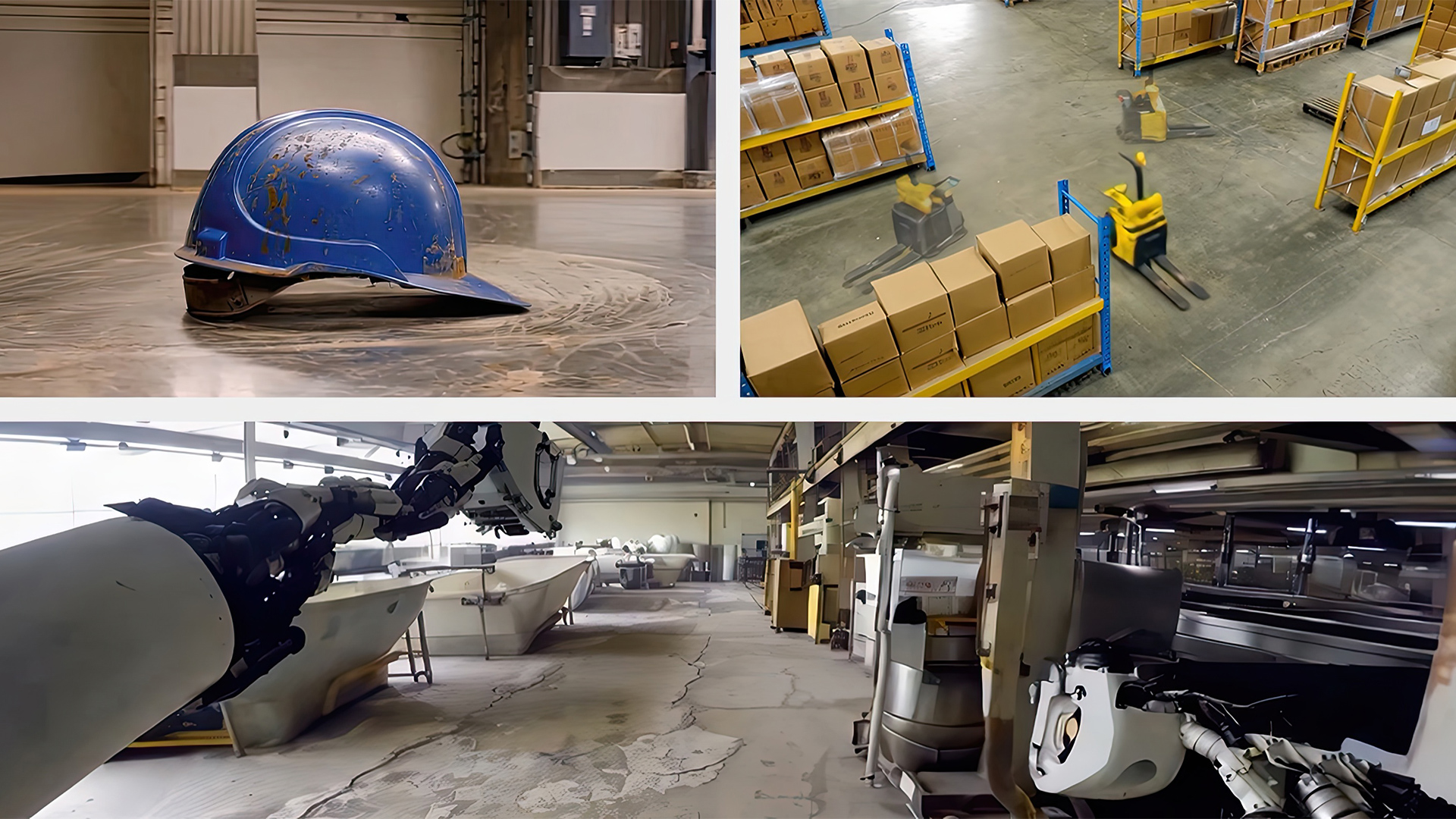

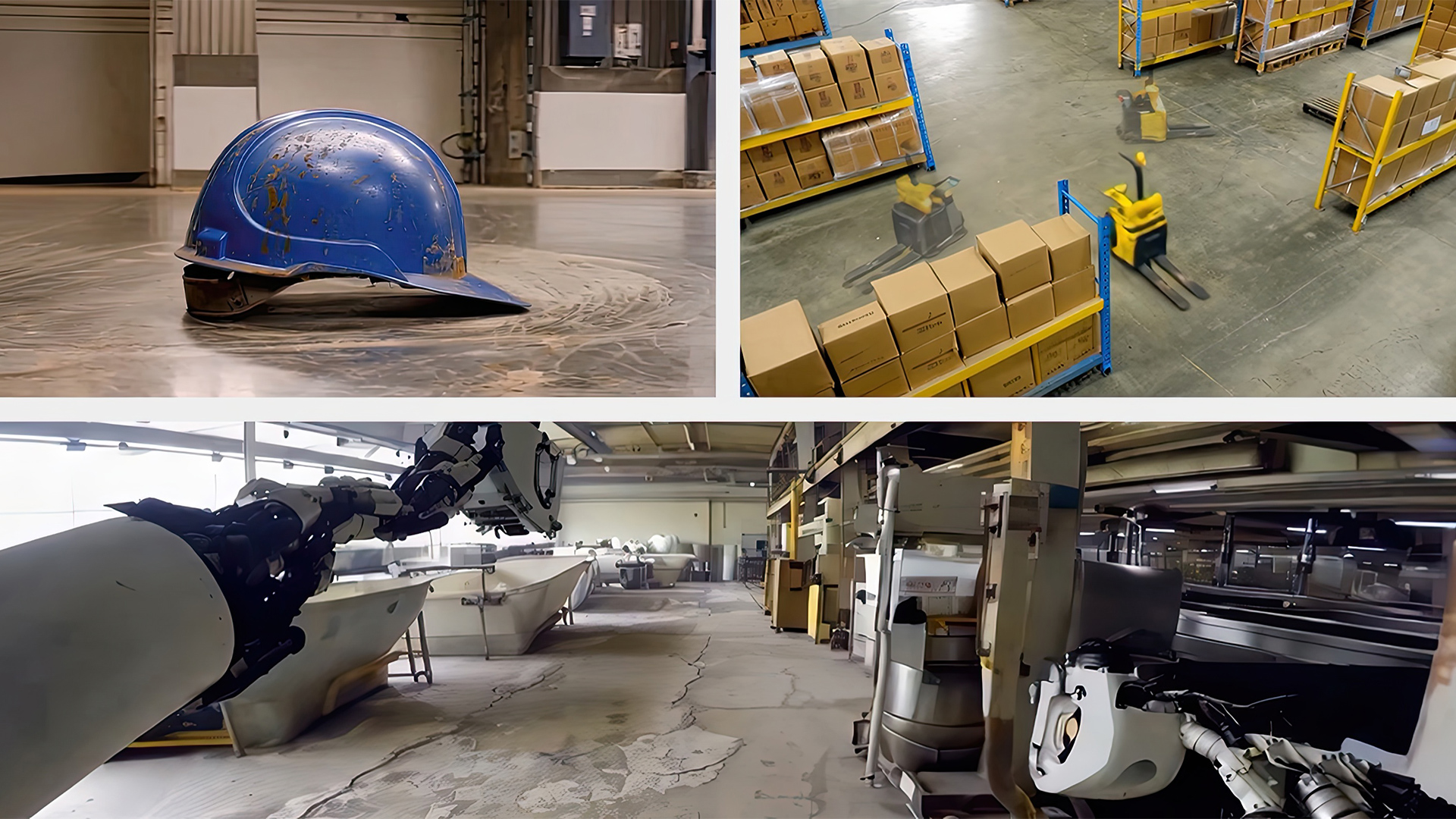

NVIDIA Physical AI Dataset

Unblock data bottlenecks with this open-source dataset for training vision AI applications to understand industrial facilities, smart cities, robots, and autonomous vehicle development. The unified collection is composed of validated data used to build NVIDIA physical AI solutions—now available for free to developers on Hugging Face.

Explore the NVIDIA Physical AI Dataset

NVIDIA Isaac SIM

Developers need training data that mimics what cameras would capture in complex, dynamic 3D spaces such as industrial facilities and smart cities. Action and Event Data Generation is a reference application on NVIDIA Isaac Sim™. It lets developers generate synthetic image and video data in a physically accurate virtual environment to train custom vision AI models.

These include tools to simulate actors like humans and robots, create objects with domain randomization, and generate incident-based scenarios for various vision AI models. Use the VLM scene captioning tool to automatically generate image-caption pairs and accelerate the annotation process

Get started With Event and Actor Generation on Isaac SIM

Use and Fine-Tune Optimized AI Models

State-of-the-Art Vision Language Models and Vision Foundation Models

Vision language models (VLMs) are multimodal, generative AI models that can understand and process video, images, and text. Computer vision foundation models, including vision transformers (ViTs), analyze and interpret visual data to create embeddings or perform tasks like object detection, segmentation, and classification.

Cosmos Reason offers you an open and fully customizable world foundation model designed for video reasoning. It enables efficient training data curation for robotics and autonomous vehicles (AVs), and powers spatio-temporal understanding to accelerate automation across smart cities and industrial environments.

Explore

.jpg)

TAO

The Train, Adapt, and Optimize (TAO) toolkit is a low-code AI model development solution for developers. It lets you use the power of transfer learning to fine-tune NVIDIA computer vision models and vision foundation models with your own data and optimize for inference—without AI expertise or a large training dataset.

Learn More About TAO

Build Powerful AI Applications

AI Agent Blueprints

The NVIDIA AI Blueprint for video search and summarization (VSS) makes it easy to build and customize video analytics AI agents using generative AI, VLMs, LLMs, and NVIDIA NIM. The video analytics AI agents are given tasks through natural language and can analyze, interpret, and process vast amounts of video data to provide critical insights that help a range of industries optimize processes, improve safety, and cut costs.

VSS enables seamless integration of generative AI into existing computer vision pipelines—enhancing inspection, search, and analytics with multimodal understanding and zero-shot reasoning. Easily deploy from the edge to the cloud on platforms including NVIDIA RTX PRO™ 6000, DGX™ Spark, and Jetson Thor™.

Explore NVIDIA AI Blueprint for Video Search and Summarization

NVIDIA NIM

NVIDIA NIM is a set of easy-to-use microservices designed for secure, reliable deployment of high-performance AI model inferencing across the cloud, data center, and workstations. Supporting a wide range of AI models—including foundation models, LLMs, VLMs, and more—NIM ensures seamless, scalable AI inferencing, on-premises or in the cloud, using industry-standard APIs.

Explore NVIDIA NIM for Vision

Metropolis Microservices

Metropolis microservices provide powerful, customizable, cloud-native building blocks for developing vision AI agents, applications, and solutions. They’re built to run on NVIDIA cloud and data center GPUs, as well as the NVIDIA Jetson Orin™ edge AI platform.

Learn More

DeepStream SDK

NVIDIA DeepStream SDK is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services. DeepStream 8.0 will include multi-camera tracking and a low-code inference builder.

Learn More About DeepStream SDK

Augment Training With Simulation and Synthetic Data

NVIDIA Omniverse

NVIDIA Omniverse™ helps you integrate OpenUSD, NVIDIA RTX™ rendering technologies, and generative physical AI into existing software tools and simulation workflows to develop and test digital twins. You can use it with your own software for building AI-powered robot brains that drive robots, Metropolis perception from cameras, equipment, and more for continuous development, testing, and optimization.

Omniverse Replicator makes it easier to generate physically accurate 3D synthetic data at scale, or build your own synthetic data tools and frameworks. Bootstrap perception AI model training and achieve accurate Sim2Real performance without having to manually curate and label real-world data.

Learn More About Omniverse Replicator

NVIDIA Cosmos

NVIDIA Cosmos™ is a platform of state-of-the-art generative world foundation models (WFMs), advanced tokenizers, guardrails, and an accelerated data processing and curation pipeline. It's purpose-built to accelerate the development of physical AI systems.

Learn More About NVIDIA Cosmos

NVIDIA Physical AI Dataset

Unblock data bottlenecks with this open-source dataset for training vision AI applications to understand industrial facilities, smart cities, robots, and autonomous vehicle development. The unified collection is composed of validated data used to build NVIDIA physical AI solutions—now available for free to developers on Hugging Face.

Explore the NVIDIA Physical AI Dataset

NVIDIA Isaac SIM

Developers need training data that mimics what cameras would capture in complex, dynamic 3D spaces such as industrial facilities and smart cities. Action and Event Data Generation is a reference application on NVIDIA Isaac Sim™. It lets developers generate synthetic image and video data in a physically accurate virtual environment to train custom vision AI models.

These include tools to simulate actors like humans and robots, create objects with domain randomization, and generate incident-based scenarios for various vision AI models. Use the VLM scene captioning tool to automatically generate image-caption pairs and accelerate the annotation process

Get started With Event and Actor Generation on Isaac SIM

Developer Resources

VLM Reference Workflows

Check out advanced workflows for building multimodal visual AI agents.

VLM Prompt Guide

Learn how to effectively prompt a VLM for single-image, multi-image, and video-understanding use cases.

Post-train NVIDIA Cosmos Reason

Learn how to fine-tune NVIDIA Cosmos Reason VLM for physical AI and robotics.

View all Metropolis technical blogs

Explore NVIDIA GTC Talks On-Demand

Develop, deploy, and scale AI-enabled video analytics applications with NVIDIA Metropolis.