NVIDIA DGX Cloud for Developers

NVIDIA DGX Cloud accelerates AI workloads in the cloud, supporting a variety of workloads including pretraining, fine-tuning, inference, and the deployment of physical and industrial AI applications.

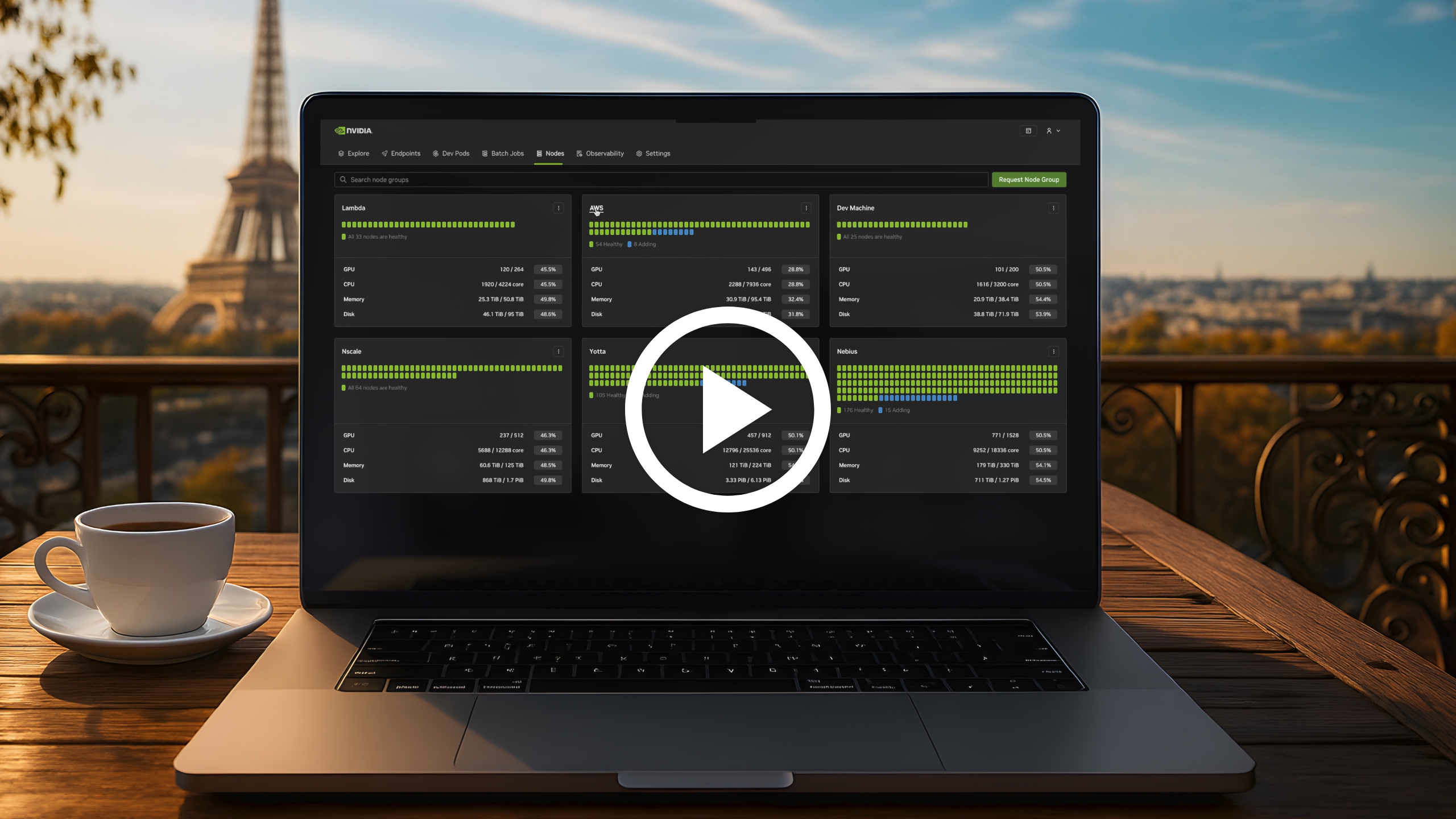

See a Part of DGX Cloud in Action

NVIDIA DGX Cloud Lepton, now available for early access, provides an integrated platform for development (SSH, Jupyter, VS Code), training, fine-tuning (running batch jobs), and scalable inference (NVIDIA NIM™ endpoints).

How NVIDIA DGX Cloud Works

DGX Cloud offers a comprehensive suite of cloud-native solutions that include:

- NVIDIA DGX Cloud Lepton: Bring or discover compute from NVIDIA Cloud Partners (NCPs) and Cloud Service Providers (CSPs). Deploy across multiple clouds, decoupled from infrastructure, and focus on building apps from a single UI, using DGX Cloud Lepton’s integrated platform for development, training, fine-tuning, and scalable inference.

- NVIDIA DGX Cloud for CSPs: Build and fine-tune AI foundation models on scalable, optimized GPU infrastructure co-engineered with leading cloud providers, with flexible access to contiguous, preconfigured, high-performance clusters and the latest GPUs.

- NVIDIA Cloud Functions (NVCFs): Scale deployment of AI workloads on a serverless AI inference platform that offers fully managed, auto-scaling, event-driven deployment across multiple clouds, on-prem, and existing compute environments.

- DGX Cloud Benchmarking: Get detailed metrics on end-to-end AI workload performance, scaling efficiency, precision format impact, and multi-GPU behavior to help gauge what platform can deliver the fastest time-to-train and what GPU scale is required to achieve an outcome within a given time period.

- NVIDIA Omniverse™ on DGX Cloud: Deploy streaming applications for industrial digitalization and physical AI simulations on a fully managed platform, utilizing optimized NVIDIA L40 GPUs that deliver NVIDIA RTX™ rendering and low-latency streaming directly to Chromium-based browsers or custom web-based applications.

- NVIDIA Cosmos™ Curator on DGX Cloud: Fine-tune NVIDIA world foundation models with proprietary data, then manage the entire video data pipeline on DGX Cloud with fully GPU-accelerated pipelines for 89x faster processing and 11x higher throughput for captioning—accelerating AI development for robotics, AV, and video AI applications.

NVIDIA DGX Cloud Lepton

Build or deploy AI applications across multi-cloud environments through a unified experience.

NVIDIA NeMo Curator on NVIDIA DGX Cloud

NVIDIA DGX Cloud on CSPs

Build and fine-tune models with a turnkey, full-stack optimized platform on leading cloud providers with flexible term lengths.

NVIDIA Cloud Functions

NVIDIA Cloud Functions (NVCF)

Deploy AI workloads with auto-scaling, cost-efficient GPU utilization, and multi-cloud flexibility.

NVIDIA DGX Cloud Benchmarking

Benchmarking Service

Follow evolving performance optimizations and workload-specific recipes to maximize AI infrastructure.

NVIDIA Omniverse on DGX Cloud

Scale deployment of streaming applications for industrial digitalization and physical AI simulation.

NVIDIA Cosmos Curator on DGX Cloud

Efficiently process, fine-tune, and deploy video and world foundation models with DGX Cloud managed services.

Get Started With NVIDIA DGX Cloud

Provision and operate environments optimized for AI training, fine-tuning, and inference on NVIDIA DGX Cloud.

NVIDIA DGX Cloud Lepton

Tap into global GPU compute to discover, procure, develop, customize, and deploy AI applications across multiple cloud providers.

Sign UpNVIDIA DGX Cloud on CSPs

Access optimized accelerated computing clusters for AI training and fine-tuning on any leading cloud.

Learn More About DGX Cloud on CSPs

NVIDIA Cloud Functions

Easily package, deploy, and scale inference pipelines or data preprocessing workflows in containers optimized for NVIDIA GPUs.

NVIDIA DGX Cloud Benchmarking

Access benchmarking recipes, tools, and services to identify AI workload performance gaps and optimize any NVIDIA AI infrastructure.

NVIDIA Omniverse on DGX Cloud

Scale deployment of streaming applications for industrial digitalization and physical AI simulations on one fully managed platform.

Learn More About Omniverse on DGX CloudNVIDIA Cosmos Curator on NVIDIA DGX Cloud

Efficiently process, fine-tune, and deploy world foundation models on a managed platform for large-scale video curation and model customization.

Explore GitHubNVIDIA DGX Cloud Starter Kits

Start experimenting on build.nvidia.com, accelerated by DGX Cloud, or download DGX Cloud Benchmarking recipes to optimize your workload on NVIDIA GPUs.

AI Development, Customization, and Deployment Across Clouds

DGX Cloud Lepton enables GPU provisioning in specific regions and supports the full AI development lifecycle, including training, fine-tuning, and inference across multi-cloud environments.

Multi-Node AI Training and Fine-Tuning Platform

With DGX Cloud Create, take cloud-native AI training to leading clouds with the latest NVIDIA AI architecture and software.

Auto-Scaled Deployment on NVIDIA GPUs

Package and deploy inference pipelines or data preprocessing workflows in containers optimized for NVIDIA GPUs,without worrying about underlying infrastructure.

NVIDIA DGX Cloud Benchmarking for AI Workloads

Produce AI training and inference performance results from a range of AI models, including Llama and DeepSeek, with recipes provided by DGX Cloud Benchmarking.

Scalable Deployment of Streamed Applications for Physical AI

Stream OpenUSD applications and digital twins directly on NVIDIA Omniverse from a fully man

Large-Scale Video Curation

Efficiently process, fine-tune, and deploy video and world foundation models with NVIDIA Cosmos Curator accelerated by NVIDIA DGX Cloud.

DGX Cloud Learning Library

More Resources

Ethical AI

NVIDIA believes trustworthy AI is a shared responsibility, and we have established policies and practices to support the development of AI across a wide array of applications. When downloading or using this model in accordance with our terms of service, developers should work with their supporting model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

For more detailed information on ethical considerations for this model, please see the Model Card++ Explainability, Bias, Safety & Security, and Privacy Subcards. Please report security vulnerabilities or NVIDIA AI Concerns here.