NVIDIA Isaac ROS

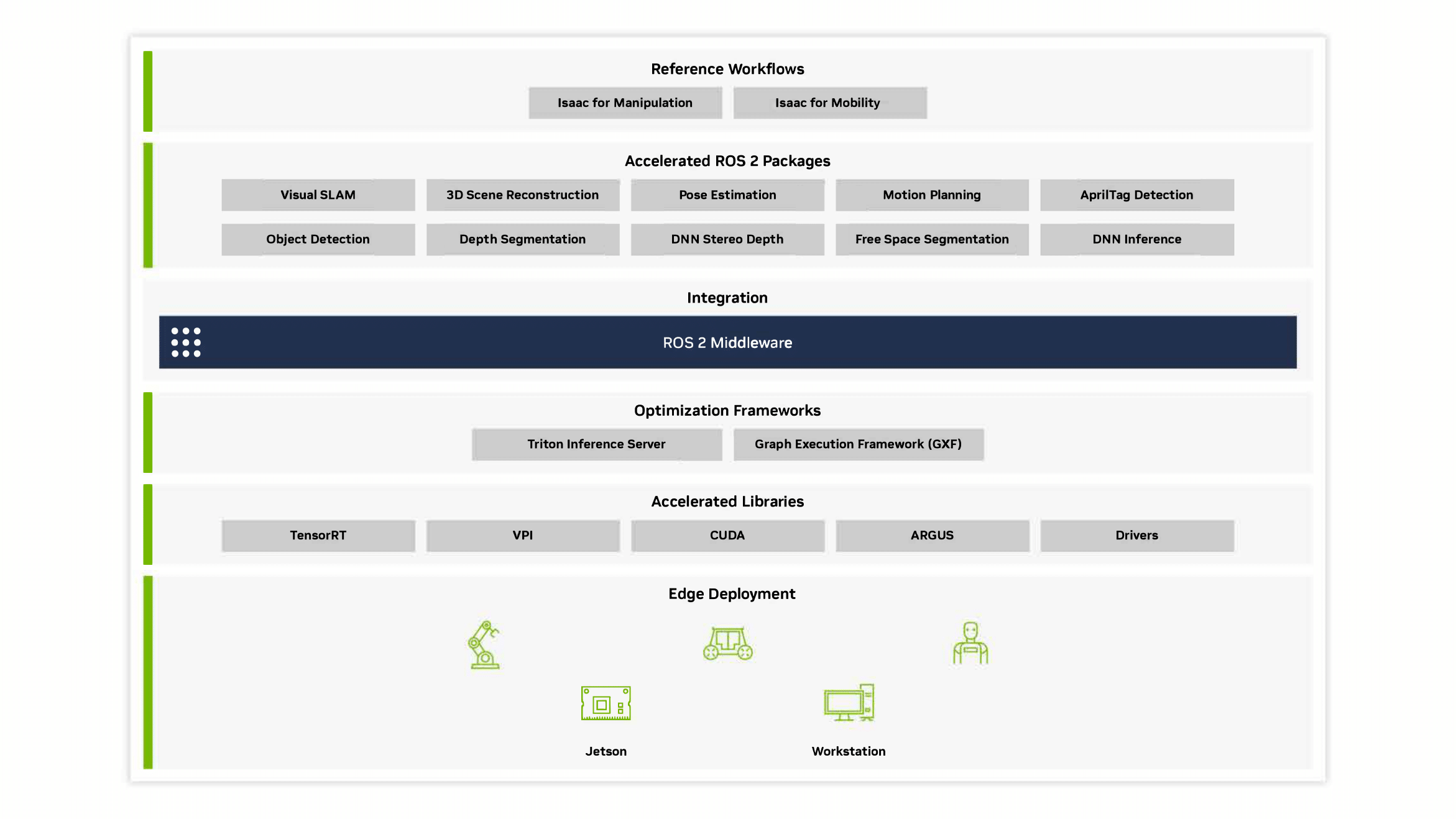

NVIDIA Isaac™ ROS (Robot Operating System) is a collection of NVIDIA® CUDA®-accelerated computing packages and AI models designed to streamline and expedite the development of advanced AI robotics applications.

How NVIDIA Isaac ROS Works

Isaac ROS gives you a powerful toolkit for building robotic applications. It offers ready-to-use packages for common tasks like navigation and perception, uses NVIDIA frameworks for optimal performance, and can be deployed on both workstations and embedded systems like NVIDIA Jetson™.

Quick Start Guide

Learn what you need to get started and how to set up using the Isaac ROS suite to tap into the power of NVIDIA acceleration on NVIDIA Jetson.

Introductory Talk

Isaac ROS offers modular packages for robotic perception and easy integration into existing ROS 2-based applications. This talk covers Isaac ROS GEMs and how to use multiple GEMs in your robotics pipeline.

Introductory Webinars

Check out a series of Isaac ROS webinars covering various topics, from running your own ROS 2 benchmarks to harnessing the power of NVIDIA NITROS.

What’s New—Isaac ROS 3.2

Check out the latest Isaac ROS update to boost your robot’s capabilities with advanced AI-based perception and manipulation

Key Features

ROS is a trademark of Open Robotics

Open Ecosystem

Built on ROS

NVIDIA Isaac ROS is built on the open-source ROS 2™ software framework. This means the millions of developers in the ROS community can easily take advantage of NVIDIA-accelerated libraries and AI models to fast track their AI robot development and deployment workflows.

Hardware Acceleration

NVIDIA Isaac Transport for ROS

The NVIDIA implementation of type adaption and negotiation is called NITROS, which are ROS processing pipelines made up of Isaac ROS hardware-accelerated modules (a.k.a. GEMs). NITROS lets ROS 2 applications take full advantage of GPU hardware acceleration, potentially achieving higher performance and more efficient use of computing resources across the entire ROS 2 graph.

for ROS (Github)

High-Throughput Perception

Isaac ROS delivers a rich collection of individual ROS packages (GEMs) and complete pipelines (NITROS) optimized for NVIDIA GPUs and NVIDIA Jetson™ platforms. This helps you achieve more with reduced development times.

Modular, Flexible Packages

Plug and play with a selection of packages—for computer vision, image processing, robust object detection, collision detection, and trajectory optimization—and easily go to production.

The Power of NVIDIA AI

Isaac ROS is compatible with all ROS 2 nodes, making it easier to integrate into existing applications. Develop robotic applications using NVIDIA AI and pretrained models from robotics-specific datasets for faster development.

Getting Started on NVIDIA Isaac ROS

System Setup

Tap into NVIDIA-accelerated libraries and AI models to speed up your AI robot workflows. Check your system requirements and set up your system.

Plug-and-Play ROS Packages

Read through the Isaac ROS concepts and easily move to production with a selection of advanced packages.

Deployment on the Edge With Partner Kits

NVIDIA Jetson provides hardware acceleration, optimized AI software, a robust ecosystem, and energy efficiency, making it an ideal platform to deploy your Isaac ROS applications. Nova Carter and the Nova Orin™ developer platforms also help you accelerate AMR development.

NVIDIA Isaac for Manipulation

CUDA-accelerated libraries and AI models give you a faster, easier way to develop AI-powered robotic arms that can seamlessly perceive, understand, and interact with their environments.

NVIDIA Isaac for Mobility

Accelerate the development of advanced autonomous mobile robots (AMRs) that can perceive, localize, and operate in unstructured environments like warehouses or factories.

High-Performance Perception With NITROS Pipelines

ROS 2 graphs using NITROS-based, NVIDIA-accelerated Isaac ROS packages can significantly increase performance.

You can find a complete performance summary here.

Node | Input Size | AGX Orin | Orin NX | x86_64 w/ RTX 4090 |

|---|---|---|---|---|

AprilTag Node | 720p | 249 fps 4.5 ms @ 30 Hz | 116 fps 9.3 ms @ 30 Hz | 596 fps 0.97 ms @ 30 Hz |

Freespace Segmentation Node | 576p | 2120 fps 1.7 ms @ 30 Hz | 2490 fps 1.6 ms @ 30 Hz | 3500 fps 0.52 ms @ 30 Hz |

Depth Segmentation Node | 576p | 45.8 fps 79 ms @ 30 Hz | 28.2 fps 99 ms @ 30 Hz | 105 fps 25 ms @ 30 Hz |

TensorRT Node PeopleSemSegNet | 544p | 460 fps 4.1 ms @ 30 Hz | 348 fps 6.1 ms @ 30 Hz | - |

Triton Node PeopleSemSegNet | 544p | 304 fps 4.8 ms @ 30 Hz | 206 fps 6.5 ms @ 30 Hz | - |

DNN Stereo Disparity Node Full | 576p | 103 fps 12 ms @ 30 Hz | 42.1 fps 26 ms @ 30 Hz | 350 fps 2.3 ms @ 30 Hz |

H.264 Decoder Node | 1080p | 197 fps 8.2 ms @ 30 Hz | - | 596 fps 4.2 ms @ 30 Hz |

H.264 Encoder Node I-frame Support | 1080p | 402 fps 13 ms @ 30 Hz | - | 409 fps 3.4 ms @ 30 Hz |

H.264 Encoder Node P-frame Support | 1080p | 473 fps 11 ms @ 30 Hz | - | 596 fps 2.1 ms @ 30 Hz |

Nvblox Node | - | 4.87 fps 35.9 ms | 4.95 fps -1.43 ms | 4.95 fps 195 ms |

Starter Kits

Start developing your robotics and AI application with Isaac ROS with these forums, release notes, and comprehensive documentation.

Localization and Mapping

Isaac ROS Visual SLAM provides a high-performance, best-in-class ROS 2 package for VSLAM (visual simultaneous localization and mapping).

3D Scene Reconstruction

Isaac ROS nvBlox uses RGB-D data to create a dense 3D map, including unforeseen obstacles, to generate a temporal costmap for navigation.

Pose Estimation and Tracking

NVIDIA’s FoundationPose is a state-of-the-art foundation model for 6D pose estimation and tracking of novel objects.

Motion Planning

Isaac ROS cuMotion is an NVIDIA CUDA-accelerated library for solving robot motion planning problems at scale by running multiple trajectory optimizations simultaneously to return the best solution.

Testing and Validation in Simulation

Virtually train, test, and validate robotics systems using NVIDIA Isaac Sim and NVIDIA Isaac Lab.

Isaac ROS Learning Library

More Resources

Get Started

Accelerate your robotic application development and get started today with NVIDIA Isaac ROS.