Deep learning models are making great strides in research papers and industrial deployments alike, but it’s helpful to have a guide and toolkit to join this frontier. This post serves to orient researchers, engineers, and machine learning practitioners on how to incorporate deep learning into their own work. This orientation pairs an introduction to model structure and learned features for general understanding with an overview of the Caffe deep learning framework for practical know-how. References highlight recent and historical research for perspective on current progress.The framework survey points out key elements of the Caffe architecture, reference models, and worked examples. Through collaboration with NVIDIA, drop-in integration of the cuDNN library accelerates Caffe models. Follow this post to join the active deep learning community around Caffe.

Automating Perception by Deep Learning

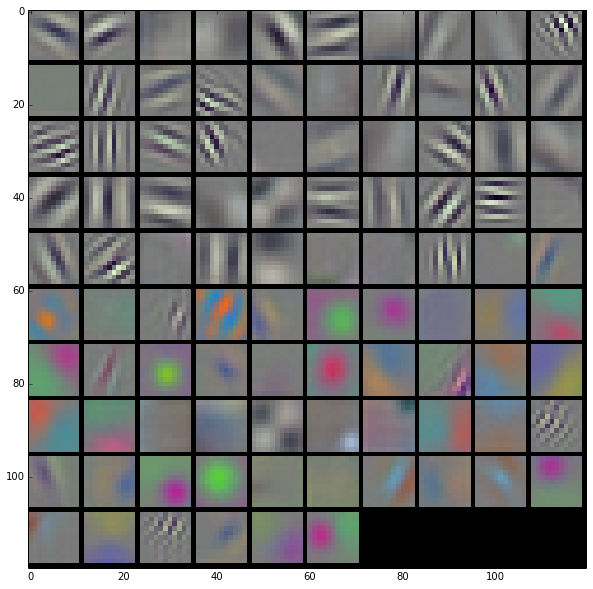

Deep learning is a branch of machine learning that is advancing the state of the art for perceptual problems like vision and speech recognition. We can pose these tasks as mapping concrete inputs such as image pixels or audio waveforms to abstract outputs like the identity of a face or a spoken word. The “depth” of deep learning models comes from composing functions into a series of transformations from input, through intermediate representations, and on to output. The overall composition gives a deep, layered model, in which each layer encodes progress from low-level details to high-level concepts. This yields a rich, hierarchical representation of the perceptual problem. Figure 1 shows the kinds of visual features captured in the intermediate layers of the model between the pixels and the output. A simple classifier can recognize a category from these learned features while a classifier on the raw pixels has a more complex decision to make.

![Figure 1: Visualization of deep features by example. Each 3 x 3 array shows the nine image patches from a standard data set that maximize the response of a given feature from a low-level (left) and high-level (right) layer of the popular Zeiler-Fergus network [8]. Similarly rich features are found in concurrent work by Girshick et al. [4]. The low-level features capture color, simple shapes, and similar textures. The high-level features respond to parts like eyes and wheels, flowers in different colors, and text in various styles.](/blog/wp-content/uploads/2014/10/caffe_dnn_low-high-624x323.png)

![Figure 2: Projection of low-level “shallow” features (left) and high-level “deep” features (right) from a vision model where related objects group together in the deep representation. Points that are close in this visualization are close in the model representation. Each point represents the feature extracted from an image and the color marks the general category of its contents. The model was trained on precise object classes like “espresso” and “chickadee” but learned features that group dogs, birds, and even animals on a whole together despite their visual contrasts [2].](/blog/wp-content/uploads/2014/10/caffe_feature_projection-624x326.png)

Convolutional Neural Networks (CNNs) are a particular type of deep models responsible for many exciting recent results in computer vision. Originally proposed in the 1980’s by Kunihiko Fukushima as the NeoCognitron [3] and then refined by Yann LeCun and collaborators as LeNet [7], CNNs gained fame through the success of LeNet on the challenging task of handwritten digit recognition in 1989 and the comprehensive 1998 journal paper that followed. It took a couple of decades for CNNs to generate another breakthrough in computer vision, beginning with AlexNet [6] in 2012, which won the world-wide ImageNet Large-scale Visual Recognition Challenge (ILSVRC).

In a CNN, the key computation is the convolution of a feature detector with an input signal. Convolution with a collection of filters, like the learned filters in Figure 3, enriches the representation: at the first layer of a CNN the features go from individual pixels to simple primitives like horizontal and vertical lines, circles, and patches of color. In contrast to conventional single-channel image processing filters, these CNN filters are computed across all of the input channels. Convolutional filters are translation-invariant so they yield a high response wherever a feature is detected.

Caffe: a Fast Open-Source Framework for Deep Learning

The Caffe framework from UC Berkeley is designed to let researchers create and explore CNNs and other Deep Neural Networks (DNNs) easily, while delivering high speed needed for both experiments and industrial deployment [5]. Caffe provides state-of-the-art modeling for advancing and deploying deep learning in research and industry with support for a wide variety of architectures and efficient implementations of prediction and learning.

Caffe models and optimization are defined by plain text schema for ease of experimentation. For instance, a convolutional layer for 20 filters of size 5 x 5 is defined using the following text:

layers {

name: "conv1"

type: CONVOLUTION

bottom: "data"

top: "conv1"

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

}

}

Every model layer is defined in this way. The LeNet tutorial included in the Caffe examples walks through defining and training Yann LeCun’s famous model for handwritten digit recognition [7]. It can reach 99% accuracy in less than a minute with GPU training.

Here’s a first sip of Caffe coding that loads a model and classifies an image in Python.

import caffe net = caffe.Classifier(model_definition, model_parameters) net.set_phase_test() # test = inference, train = learning net.set_mode_gpu() # gpu or cpu with the same model scores = net.predict([image])

Caffe includes a general `caffe.Net` interface for working with any Caffe model. As a next step check out the worked example of feature extraction and visualization.

The Caffe Layer Architecture

In Caffe, the code for a deep model follows its layered and compositional structure for modularity. The Net (class definition) has Layers (class definition), and the computations of the Net are delegated to the Layers. All deep model computations are framed as layer types like convolution, pooling, nonlinearities, and so on. By encapsulating the details of the operation, the layer provides modularity since it can be implemented once and then instantiated everywhere. To afford this encapsulation the layers must follow a common protocol:

- Setup and Reshape: the layer setup does one-time initialization like loading parameters while reshape handles the input-output configuration of the layer so that the user does not have to do dimension bookkeeping.

- Forward: compute the output given the input.

- Backward: compute the gradient of the output with respect to the input and with respect to the parameters (if needed).

The Layer class follows this protocol in its public method definitions. Each layer type is an inherited class that declares these same methods, as in the cuDNN convolution class declaration. The actual implementations are found in a combination of .cpp and .cu files. For example the CuDNNConvolutionLayer class Setup / Reshape and CPU-mode Forward / Backward methods are implemented in cudnn_conv_layer.cpp, and the GPU-mode Forward / Backward methods are implemented in cudnn_conv_layer.cu. Note that CuDNNConvolutionLayer is a strategy class for ConvolutionLayer, and does not declare the {Forward,Backward}_cpu variants of Forward / Backward so that it inherits the standard Caffe CPU mode instead. The GPU mode CUDA code is optional for CPU-only layers or prototyping. The GPU mode falls back to CPU implementations with automatic communication between the host and device as needed. All layer classes follow this .cpp + .cu arrangement.

Once implemented, the layer needs to be included in the model schema. This schema is coded in the Protocol Buffer format in the caffe.proto master definition. To include a layer in the schema:

- Register its type in the LayerType enumeration like CONVOLUTION.

- Define a field to hold the layer’s configuration parameters, if any, like the ConvolutionParameter field.

- Define the actual layer parameter message, like the ConvolutionParameter.

While this layer crash course identifies the main points, the Caffe development wiki has a full guide for layer development.

Drop-in Acceleration with cuDNN

Deep networks require intense computation, so Caffe has taken advantage of both GPU and CPU processing from the project’s beginning. A single machine with GPU(s) can train state-of-the-art models quickly without the engineering overhead or cost of a CPU cluster. The bundled Caffe reference models and many experiments were learned and run over millions of iterations and images on NVIDIA GPUs. On a single K40 GPU, Caffe can classify over 60 million images a day with the ILSVRC12-winning AlexNet model [6] and the CaffeNet variant. This level of performance is crucial for exploring new models and tasks.

Deep networks require intense computation, so Caffe has taken advantage of both GPU and CPU processing from the project’s beginning. A single machine with GPU(s) can train state-of-the-art models quickly without the engineering overhead or cost of a CPU cluster. The bundled Caffe reference models and many experiments were learned and run over millions of iterations and images on NVIDIA GPUs. On a single K40 GPU, Caffe can classify over 60 million images a day with the ILSVRC12-winning AlexNet model [6] and the CaffeNet variant. This level of performance is crucial for exploring new models and tasks.

The new cuDNN library provides implementations tuned and tested by NVIDIA of the most computationally-demanding routines needed for CNNs. cuDNN accelerates Caffe 1.38x overall for training and evaluating the CaffeNet model with layer-wise speedups of 1.2-3x as shown in Table 1. The cuDNN paper preprint [1] details the computational approach of the library and its integration in Caffe. Caffe + cuDNN lets you define your models just as before—as plain text—while taking advantage of these computational speedups through drop-in integration. cuDNN or pure Caffe computation can be selected per-layer to pick the fastest implementation for a given architecture. cuDNN integration is now included in the release candidate version of Caffe in its master branch. We are excited for these latest developments and are already using cuDNN to train models faster than before.

| Caffe | Caffe + cuDNN | Speedup | |

| Training | 1325 ms | 960 ms | 1.38 |

| Testing | 100 ms | 66.7 ms | 1.50 |

Brew Your Own Deep Neural Networks with Caffe and cuDNN

Here are some pointers to help you learn more and get started with Caffe. Sign up for the DIY Deep learning with Caffe NVIDIA Webinar (Wednesday, December 3 2014) for a hands-on tutorial for incorporating deep learning in your own work. To start exploring deep learning today, check out the Caffe project code with bundled examples and models on Github. Caffe is a popular framework with an active user and open source development community of over 1,200 subscribers and over 600 forks on GitHub. It is used by universities, industry, and startups, and several participants in this year’s ImageNet Large Scale Visual Recognition Challenge built their submissions on the framework. Subscribe to the caffe-users mailing list for questions on installation, examples, and usage. Welcome to brewing deep networks with Caffe!

References

[1] S. Chetlur, C. Woolley, P. Vandermersch, J. Cohen, J. Tran, B. Catanzaro, and E. Shelhamer. “cuDNN: Efficient primitives for deep learning”. arXiv preprint arXiv:1410.0759, 2014.

[2] J. Donahue, Y. Jia, O. Vinyals, J. Hoffman, N. Zhang, E. Tzeng, and T. Darrell. “DeCAF: A deep convolutional activation feature for generic visual recognition”. ICML, 2014.

[3] K. Fukushima. “Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position”. Biological cybernetics, 1980.

[4] R. Girshick, J. Donahue, T. Darrell, and J. Malik. “Rich feature hierarchies for accurate object detection and semantic segmentation”. CVPR, 2014.

[5] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell. “Caffe: Convolutional architecture for fast feature embedding”. arXiv preprint arXiv:1408.5093, 2014.

[6] A. Krizhevsky, I. Sutskever, and G. Hinton. “Imagenet classification with deep convolutional neural networks”. NIPS, 2012.

[7] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. “Gradient-based learning applied to document recognition”. IEEE, 1998.

[8] M. Zeiler and R. Fergus. “Visualizing and understanding convolutional networks”. ECCV, 2014.