Deep learning has made enormous leaps forward thanks to GPU hardware. But much Big Data analysis is still done with classical methods on sparse data. Tasks like click prediction, personalization, recommendation, search ranking, etc. still account for most of the revenue from commercial data analysis. The role of GPUs in that realm has been less clear. In the BIDMach project (part of the BID Data Project at UC Berkeley), we have been exploring general machine learning with GPUs. The results are remarkable: not only do we see order-of-magnitude speedups for most problems, but our system also outperforms today’s cluster computing systems running up to several hundred nodes on typical workloads. As well as the incentives to adopt GPU technology for deep learning tasks, there is now a strong incentive for organizations to migrate to GPUs for the remainder of their analytics workload.

Roofline Design

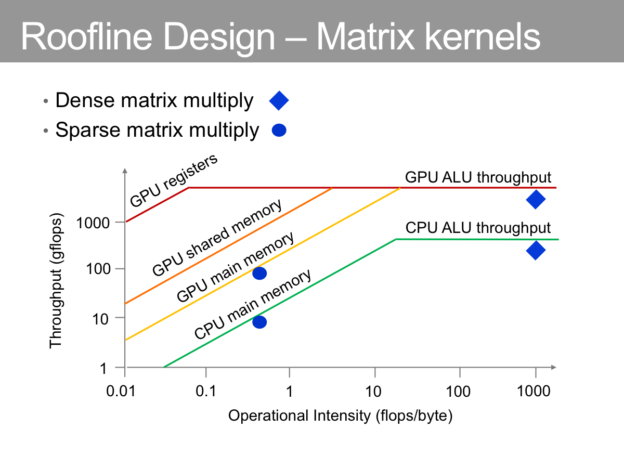

To build the fastest system, we borrowed the approach of roofline design from computer architecture. Roofline design involves designing to fundamental limits (e.g. ALU throughput, memory speed, network speed, I/O speed etc). A rooflined system is fast, and no other system can be much faster, since both have to respect the same hardware limits. A roofline diagram for matrix multiply is shown below:

The y-axis shows the potential throughput in arithmetic operations/second. The x-axis is “operational intensity” which is the number of operations applied to each data value (in units of operations per byte). The intensity is much lower for sparse operations – e.g. sparse matrix multiply typically involves only a multiply and add for each input datum, while dense matrix multiply uses each datum many times. The horizontal lines reflect the maximum ALU throughput for each type of processor (the graph is drawn for Intel i7 and NVIDIA GeForce GTX 680 processors). GPUs have much higher ALU throughput since the GPU chip area is almost entirely ALU. For dense matrix multiply, GPUs are10x faster thanks to this higher computing area.

The diagonal lines reflect memory bandwidth. Since bandwidth is flow in bytes/second it defines a linear relationship between the x-axis (flops/byte) and the y-axis (in flops/sec). A less well-known feature of GPUs is their higher main-memory bandwidth. This leads to a (potential) 10x gap in sparse matrix operations, which are the most important for many machine learning tasks. CuSparse achieves this ceiling for typical scientific data, but we found it was less well fit to very sparse data (text, web logs etc.). We wrote our own sparse kernels and were able to get them close to the roofline limits over a full range of sparseness. These kernels form the basis for the high throughput in most of BIDMach’s algorithms.

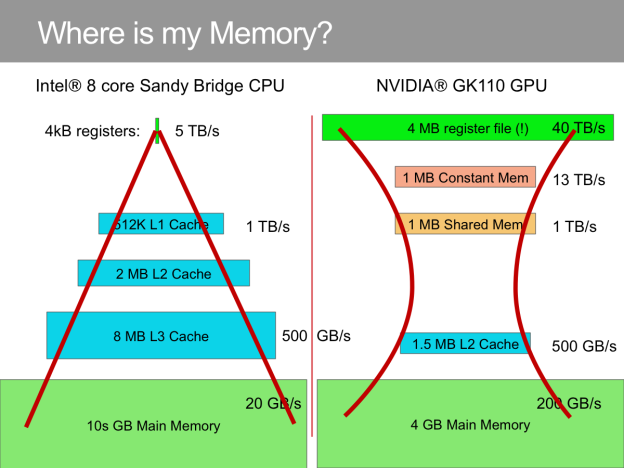

Another important contrast between CPUs and GPUs is memory capacity. A GPU has several forms of memory of varying speeds and capacities. A CPU also has other memory types but they are provisioned only as caches, not directly accessible to the programmer, and enticing the CPU to use them can lead to very complex code. The other memory types in a GPU are exposed to the programmer, and this makes them applicable to a wider variety of situations.

One of the most interesting features for us in NVIDIA GPUs is the massive amount of register storage (4MB in the example). Calculations that rely mostly on registers can run at the full speed of the machine, and this can lead to massive speedups. This is what makes dense matrix operations fast on GPUs, but under the right conditions it can be used for sparse operations as well. In 1 we designed a natural language parser based on the Berkeley Parser, which is one of the most accurate parsers today. The Berkeley parser uses a probabilistic grammar with 1.5 million rules and around 1000 symbols. Applying all the rules involves a tensor product (think of it as a matrix multiply in one dimension higher) whose density is only 0.1%. Nevertheless, we were able to achieve a net throughput of 900 GFLOP/s on NVIDIA GeForce GTX 680 GPUs for this operation. This required some exotic design: the grammar was decomposed into blocks (similar to blocking in dense matrix operations), the symbols were held in registers and constants were complied into the code. Thanks to some cleverness from the compiler, each grammar rule compiled into a single line of assembly code. The resulting program had 10^5^x the throughput compared to the java version of the original parser. It “maxed out” all of the available resources on the GPU: ALUs, registers, main memory, L1 cache, instruction cache and constant memory. We think other register-heavy solutions exist with similar potential speedups.

Benchmarks

In the table below we include some recent benchmarks of BIDMach with other state-of-the-art toolkits. We chose a collection of problems that we felt was a good cross-section of the ML workloads most typical in industry: text classification, click prediction, personalization, clustering, topic models and random forests. Spark is the v1.1 release of Apache Spark, and Graphlab is the public domain system developed at CMU. The commercial version of Graphlab doesn’t yet support distributed computing. Spark-XX is a Spark v1.1 cluster with XX cores, and similarly for GraphLab-XXX. Yahoo-1000 is a 1000-node cluster with an unspecified number of cores, designed expressly for LDA model-building. VW is Vowpal Wabbit running on a single 8-core machine. BIDMach was always run on a single machine with 8 CPU cores and an NVIDIA GeForce GTX 680 GPU or equivalent.

BIDMach’s benchmark page includes many other comparisons. We have tested many different systems, and the table below is just a sketch. We include only one single-machine system in the table: VW, since we found it was faster than any of the other single-machine toolkits.

Table : Performance Comparison between BIDMach and Other toolkits (See https://github.com/BIDData/BIDMach/wiki/Benchmarks for more).

| Systems A/B | Algorithm | Dataset (Size) | Dim | Time (s) | Cost (\$) | Energy(KJ) |

|---|---|---|---|---|---|---|

| Spark-72/BIDMach | Logistic Reg. | RCV1 (0.5GB) | 1/103 | 30/14 | 0.07/0.002 | 120/3 |

| VW/BIDMach | Logistic Reg. | RCV1 (0.5GB) | 103 | 130/14 | 0.02/0.002 | 30/3 |

| Spark-128/BIDMach | Logistic Reg. | Criteo (12 GB) | 1 | 400/81 | 1.00/0.01 | 2500/6 |

| Spark-384/BIDMach | K-Means | MNIST (24GB) | 4096 | 1100/735 | 9.00/0.12 | 22000/140 |

| GraphLab-576/BIDMach | Matrix Fact. | Netflix (4 GB) | 100 | 376/90 | 16/0.015 | 10,000/20 |

| Yahoo-1000/BIDMach | LDA (Gibbs) | News (100 GB) | 1024 | 220k/300k | 40k/100 | 4E10/6E7 |

| Spark-64/BIDMach | RandomForest | YearPred(16GB) | 1 | 280/320 | 0.48/0.05 | 480/60 |

RCV1 is a Reuters News classification task. Criteo is a large-scale click prediction task from Kaggle. MNIST is a handwritten digit dataset. Netflix is the Netflix Prize dataset. News was a synthetic dataset created by the authors of 2. YearPred is a dataset available from UCI.

Costs are based on the cost/time for Amazon EC2 instances (m3.xlarge for Spark, g2.xlarge for BIDMach and c3.8xlarge for Graphlab). The scripts for these benchmarks are all available as part of the BIDMach distribution.

We note that the running times for BIDMach are very competitive, and generally lower than those for mid-to-large sized clusters. Cost is generally two orders of magnitude lower for BIDMach, and the energy consumption ratio is somewhat higher than the cost ratio. For large clusters, these gaps widen to approximately three orders of magnitude.

The difference between GPU and CPU rooflines suggests we might see an order of magnitude difference in the table, but what we observe is much larger. Why is this? The simple answer is that most toolkits (VW is a notable exception) are well below their CPU rooflines. But this still seems surprising. We explore this more in the next section.

The Explosive Growth of O(1)

Most other systems we benchmarked are not close to their CPU roofline limits. It’s interesting to understand why that is. We argue that its due to the “explosive growth of O(1)”. To be more precise, a basic arithmetic operation like this:

C = A + B (1)

would normally be compiled into a single register-to-register instruction. On the other hand, an array access like this:

D = X[i] (2)

would typically involve a (random) main memory access. On current GPU hardware, the difference in execution time for those two instructions is more than 10^5^ (we’re assuming parallelism in the execution of 1, which is not possible for 2). By a slight abuse of notation, we use O(1) to mean this ratio between the slowest and fastest “unit” operations. Our use is deliberately ironic: O(1) is supposed to represent a small constant factor. Both operations are supposed to take constant time, and so their ratio is supposed to be constant as well. But in fact, operation 1 continues to get faster each year (parallelism permitting) thanks to new generations of GPU, while main memory access times are stagnant. So not only is O(1) as we defined it not “reasonably small” but its also growing exponentially with time. Hence the “explosive growth” in our section title.

Programmers are trained to treat those two operations as equivalent, but clearly they have drastically different running times. Much of the hardware in modern CPUs is devoted to trying to mitigate memory access overhead. But as data structures grow, CPU caches overload and the code sees the full main-memory access latency. Using Intel’s VTune analyzer (which allows flop counting for running programs), we have profiled many machine learning systems on large datasets, and typically see throughputs in the range 10-100 MFLOP/s, or 10-100 nsecs per flop. That’s the time frame of main-memory access, not ALU latency, which is 1000x lower on a CPU. The systems that do better than this (like BIDMach and Vowpal Wabbit) rely on matrix kernels applied to blocks of data. One must still take great care that no step in an algorithm becomes an unintentional bottleneck. BIDMach displays estimated GFLOP/s throughput whenever it trains a model. This quickly exposes bottlenecks and allows them to be tracked down.

BIDMach’s Architecture

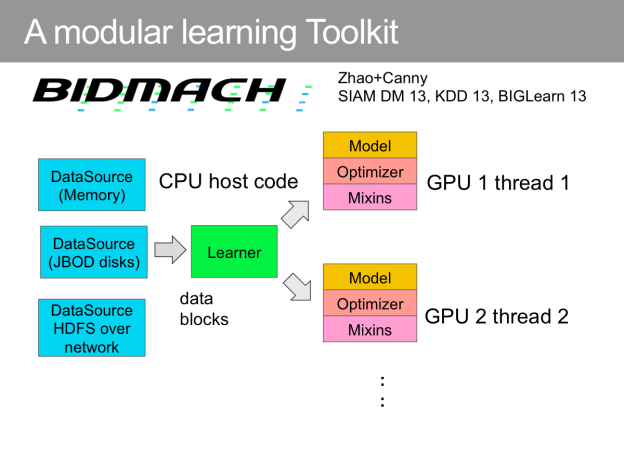

It was important for us to build not just an ML toolkit but a framework for developing and tailoring ML algorithms. We wanted to make it extremely easy to add a new model or modify an existing one. Figure 3 shows the organization of BIDMach. To be able to process very large datasets, we use a datasource abstraction. Data is pulled from the datasource in small blocks, and then processed by one or more worker tasks on either CPU or GPU. The model is normally stored GPU memory so that only data blocks move across the CPU/GPU boundary.

We separate the functions of model, optimizer, regularizers and “mixins”. Regularizers including L1 and L2 can be applied to any model. Optimization is usually done with ADAGRAD, a fast and robust minibatch optimizer. CG (Conjugate Gradient) is used for some models. Mixins are custom likelihood functions that can be combined with the main model and regularizers to further tailor a model. Examples of mixins are cluster quality measures, cluster size, and topic entropy.

Very little new code is required to implement a new learning algorithm in BIDMach. You just need to write a gradient function and an evaluation function to score the model on a data block. Our goal is to allow coding new algorithms in a day or two, and that has been true for several of the most recent additions.

BIDMach has implementations of the following algorithms.

- Logistic and Linear Regression

- Support Vector Classification

- Factorization Machines

- Sparse Factor Analysis (equivalent to Alternating Least Squares)

- Topic Models: Latent Dirichlet Allocation

- Non-negative Matrix Factorization

- Simple Deep Neural Networks

- K-Means

- Random Forests

- IPTW estimators (a causal influence estimator)

We believe BIDMach’s implementation of all of these algorithms is the fastest available, with two exceptions. Our DNN implementation is minimal, and has no performance advantage over e.g. Caffe or Torch which are fully functional DNN packages. (In fact, we are working on a wrapper for Caffe from BIDMach that should be available soon.) Second, BIDMach’s RandomForest implementation is not faster than the fastest CPU in-memory RF algorithms, e.g. Wise-IO.

On the other hand, BIDMach’s RF is a scalable RF implementation. It scans data directly from disk, and can build much larger trees than is possible with in-memory implementations. It is also faster than the fastest cluster implementation we know of on 8 worker nodes. Most BIDMach models can be run on dense or sparse data, on CPU or GPU, in single or double precision. The programmer writes generic code and doesn’t need to be aware of those differences.

Interacting with BIDMach

One interacts with BIDMach at an interactive command prompt, similar to Scipy or Matlab. BIDMach uses the Scala language, which has a number of advantages over alternatives like Python, Lua or Julia. The most important ones for us are:

- Integration with the Java Virtual Machine, and with the entire Java code ecosystem. BIDMach integrates smoothly with Cluster Frameworks like Hadoop and Yarn, which are also JVM-based. Any existing Java class can be used in BIDMach.

- Open syntax. Scala is often used to develop DSLs (Domain-Specific Languages) because of its flexible syntax – almost any character sequence can be used to define new methods. In BIDMach we use Unicode characters like

etc to represent the mathematical operators they normally define. This gives us the most syntactically complete “computational math” tool.

- High performance. Scala at this time is still at least 3x faster than any of the languages we tested (Python, Lua, Julia). For memory-bound operations (e.g. sparse matrix operations), Scala performance is indistinguishable from C code, and we use several sparse Scala matrix kernels for CPU computing.

- Platform portability. Our goal is to support BIDMach on any variant of the major OSes (Windows, Linux and MacOS), and even certain mobile platforms in the future. Java already runs on all these platforms, and provides a standard environment for BIDMach to run on. We still use native code extensively (e.g. CUDA C++ code) but by delegating system functions (I/O, threading etc.), we avoid tricky native code specialization.

From the Scala prompt, loading and training on a dataset looks like this:

scala\> val d = loadFMat(“somedata.txt”) scala\> val (nn, opts) = KMeans.learner(d, 128) scala\> nn.train

The first line loads a dataset, which we assume here is a tab-separated table of ASCII numeric data. The second line creates a Learner nn (a model, optimizer and datasource) with a custom set of options in the opts instance. It also sets the KMeans number of clusters to 128. Other options can be tailored in the opts object. This design makes it easier to do exploratory data analysis. You can run many training runs, modifying a few options each time.

BIDMach also supports notebook interaction using IScala and IPython. Starting BIDMach by typing “bidmach notebook” on a machine that has IPython installed will bring up a notebook within which you can type BIDMach commands interleaved with Markdown text. We have several IScala tutorials in the BIDMach distribution to help you go further. The main project page includes API docs and general documentation.

That’s it. If you have a Fermi-or-later generation GPU, download BIDMach and give it a try. If you use or design machine learning algorithms at small or large scale, you should find that it’s the fastest way to get things done. Enjoy, and tell us how to make it better!

Learn more at the GPU Technology Conference

If you’d like to learn more about BIDMach, come see my talk “Extending the Limits of Machine Learning with GPUs” at the 2015 GPU Technology Conference, on March 18 in Room 210A of the San Jose Convention Center.

With dozens of sessions on Machine Learning and Deep Learning, you’ll find that GTC is the place to learn about machine learning in 2015! Readers of Parallel Forall can use the discount code GM15PFAB to get 20% off any conference pass! Register Now!

References

[1] J. Canny, D. Hall, and D. Klein, “A multi-teraflop constituency parser using GPUs,” in EMNLP, 2013

[2] A. Ahmed, M. Aly, J. Gonzalez, S. Narayanamurthy, and A. J. Smola. “Scalable inference in latent variable models”. In Proceedings of the fifth ACM international conference on Web search and data mining, pages 123–132. ACM, 2012.