NVIDIA NIM for Developers

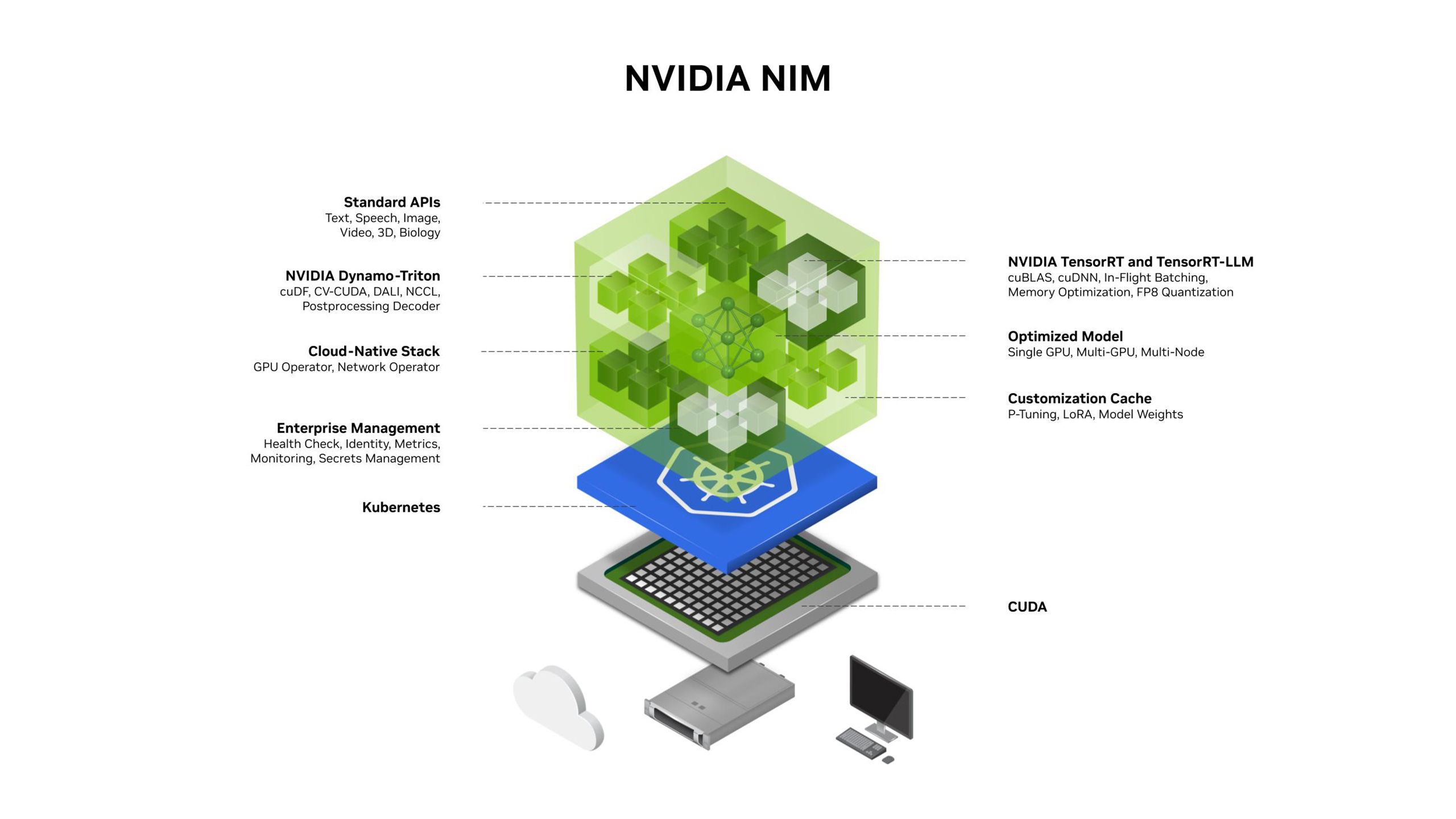

NVIDIA NIM™ provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models across clouds, data centers, and RTX™ AI PCs and workstations. NIM microservices expose industry-standard APIs for simple integration into AI applications, development frameworks, and workflows and optimize response latency and throughput for each combination of foundation model and GPU.

How It Works

NVIDIA NIM simplifies the journey from experimentation to deploying enterprise AI applications by providing enthusiasts, developers, and AI builders with pre-optimized models and industry-standard APIs for building powerful AI agents, co-pilots, chatbots, and assistants. With inference engines built on leading frameworks from NVIDIA and the community, including TensorRT, TensorRT-LLM, vLLM, SGLang, and more, NIM is engineered to facilitate seamless AI inferencing for the latest AI foundation models on NVIDIA GPUs.

Introductory Blog

Learn about NIM architecture, key features, and components.

Documentation

Access guides, reference information, and release notes for running NIM on your infrastructure.

Introductory Video

Learn how to deploy NIM on your infrastructure using a single command.

Deployment Guide

Get step-by-step instructions for self-hosting NIM on any NVIDIA accelerated infrastructure.

Build With NVIDIA NIM

Optimized Model Performance

Improve AI application performance and efficiency with accelerated engines from NVIDIA and the community, including TensorRT, TensorRT-LLM, vLLM, SGLang, and more—prebuilt and optimized for low-latency, high-throughput inferencing on specific NVIDIA GPU systems.

Run AI Models Anywhere

Maintain security and control of applications and data with prebuilt microservices that can be deployed on NVIDIA GPUs anywhere—from RTX AI PCs, workstations, data centers, or the cloud. Download NIM inference microservices for self-hosted deployment, or take advantage of dedicated endpoints on Hugging Face to spin up instances in your preferred cloud.

Choose Among Thousands of AI Models and Customizations

Deploy a broad range of LLMs supported by vLLM, SGLang, or TensorRT-LLM, including community fine-tuned models and models fine-tuned on your data.

Maximize Operationalization and Scale

Get detailed observability metrics for dashboarding, and access Helm charts and guides for scaling NIM on Kubernetes.

NVIDIA NIM Examples and Blueprints

Build Accelerated Generative AI Applications Including RAG, Agentic AI, and More

Get started building AI applications powered by NIM using NVIDIA-hosted NIM API endpoints and generative AI examples from GitHub. See how easy it is to deploy retrieval-augmented generation (RAG) pipelines, agentic AI workflows, and more.

Jump-Start Development With Blueprints

NVIDIA AI Blueprints are predefined, customizable AI workflows for creating and deploying AI agents and other generative AI applications. Build and operationalize custom AI applications—creating data-driven AI flywheels—using blueprints along with NVIDIA AI and Omniverse™ libraries, SDKs, and microservices. Explore blueprints co-developed with leading agentic AI platform providers including CrewAI, LangChain, and more.

Explore NVIDIA BlueprintsSimplify Development With NVIDIA AgentIQ Toolkit

Weave NIM microservices into agentic AI applications with the NVIDIA AgentIQ library, a developer toolkit for building AI agents and integrating them into custom workflows.

Get Started With NVIDIA NIM

Explore different options for experimenting, building, and deploying optimized AI applications using the latest models with NVIDIA NIM.

Try

Get free access to NIM API endpoints for unlimited prototyping, powered by DGX Cloud. Your membership to the NVIDIA Developer Program enables NVIDIA-hosted NIM APIs and containers for development and testing (FAQ).

Visit the NVIDIA API CatalogBuild

Get a head start on development with sample applications built with NIM and partner microservices. NVIDIA Blueprints can be deployed in one click with NVIDIA Launchables, downloaded for local deployments on PCs and workstations, or for development in your datacenter or private cloud.

Explore NVIDIA BlueprintsDeploy

Deploy on your own infrastructure for development and testing. When ready for production, get the assurance of security, API stability, and support that comes with NVIDIA AI Enterprise, or access dedicated enterprise-grade NIM endpoints at NVIDIA partners.

Run NVIDIA NIM anywhereNVIDIA NIM Learning Library

More Resources

Ethical AI

NVIDIA’s platforms and application frameworks enable developers to build a wide array of AI applications. Consider potential algorithmic bias when choosing or creating the models being deployed. Work with the model’s developer to ensure that it meets the requirements for the relevant industry and use case; that the necessary instruction and documentation are provided to understand error rates, confidence intervals, and results; and that the model is being used under the conditions and in the manner intended.

Learn about the latest NVIDIA NIM models, applications, and tools.