NVIDIA Cloud Functions (NVCF)

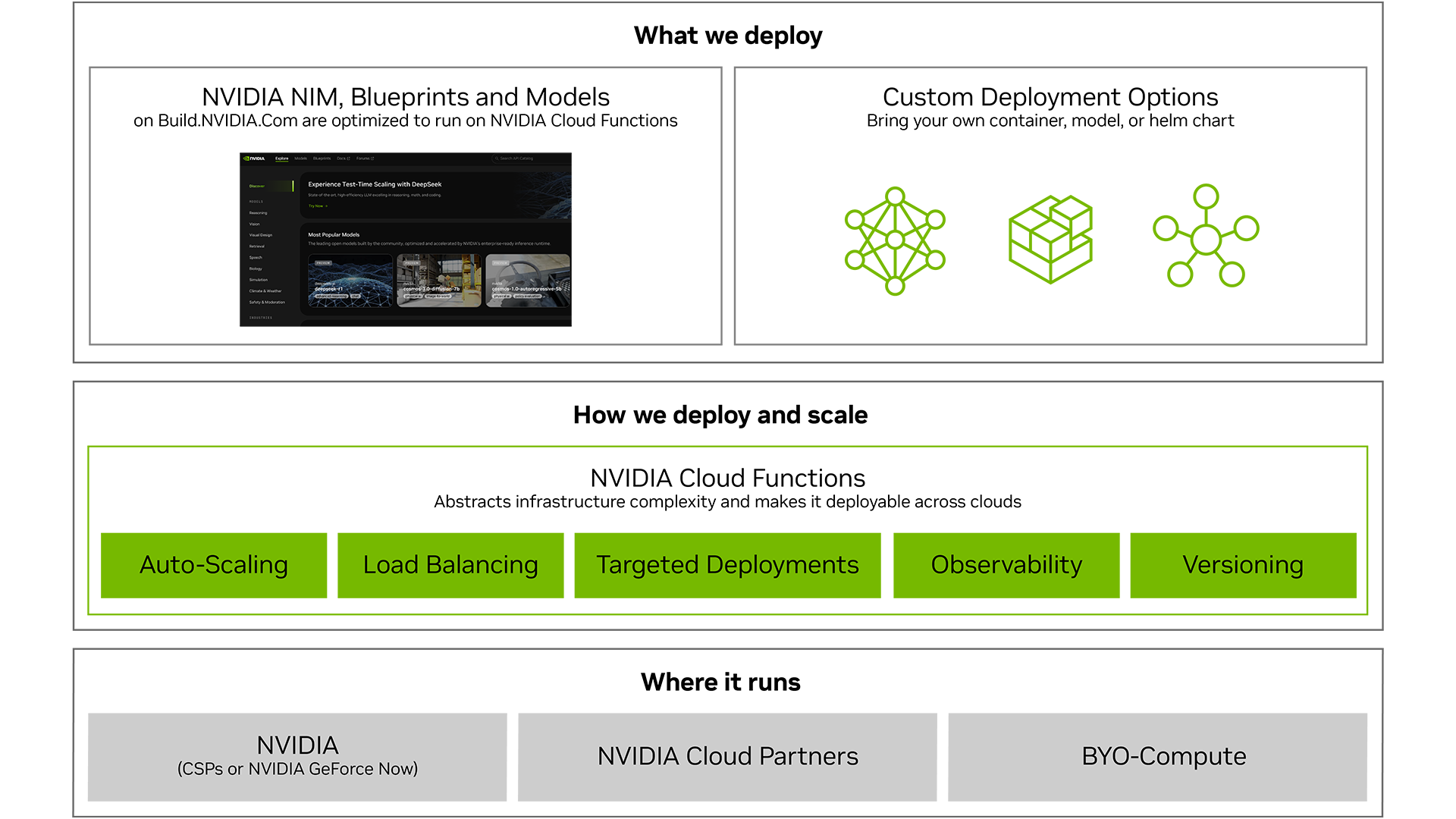

NVIDIA Cloud Functions (NVCF) is a high-performance, serverless AI inference solution that accelerates AI innovation with auto-scaling, cost-efficient GPU utilization, multi-cloud flexibility, and seamless scalability.

NVIDIA Cloud Functions Demo Video

Simplify and Scale Inference

See how NVCF simplifies AI workload deployment across multiple regions with seamless auto-scaling, load balancing, and event-driven execution. You can bring your own models, containers, or Helm charts and instantly integrate with NVIDIA GPUs in DGX™ Cloud), or a cloud partner infrastructure.

How NVIDIA Cloud Functions Works

AI builders can easily package and deploy inference pipelines or data preprocessing workflows in containers optimized for NVIDIA GPUs, without worrying about underlying infrastructure. With flexible deployment options via API, CLI, or UI and built-in features like autoscaling, monitoring, and secret management, NVCF lets you focus on developing and fine-tuning your AI models while it handles resource management.

NVCF can provision and deploy applications and containers on DGX Cloud or through NVIDIA cloud partners (NCPs).

Introductory Blog

Learn how NVCF deploys, manages, and serves GPU-powered containerized applications across multiple regions and in the data center.

Quick-Start Guide

Learn how to deploy an NVIDIA NIM™ microservice in five minutes using NIM APIs across popular application frameworks on NVIDIA Cloud Functions.

Self-Paced Course

Learn how to create an NVIDIA Omniverse™ extension that uses NVCF-powered AI NIM microservice models to generate realistic 3D materials from natural language descriptions.

NVIDIA Cloud Functions Key Features

Auto-Scaling to Zero

With NVCF, you can scale down to zero instances during periods of inactivity to optimize resource utilization and reduce costs. There’s no extra cost for cold-boot start times, and the system is optimized to minimize them.

BYO Observability

NVCF offers robust observability features. It allows you to integrate your preferred monitoring tools, such as Splunk, for comprehensive insights into your AI workloads.

Broad Workload Support

NVCF offers flexible deployment options for NIM microservices, while allowing you to bring your own containers, models, and Helm charts. By hosting these assets within the NGC™ Private Registry, you can seamlessly create and manage functions tailored to your specific AI workloads.

Targeted Deployment

NVCF supports targeted deployment, providing you with the flexibility to choose instance types with specific characteristics, such as number of GPUs, number of CPU cores, CPU architecture, storage, and geographical location.

Get Started With NVIDIA Cloud Functions

Try

Experience leading models on build.nvidia.com, accelerated by NVIDIA DGX Cloud with NVCF.

NVIDIA Cloud Functions Learning Library

AI Inference

What is AI Inference?

Learn how AI inference generates outputs from a model based on inputs like images, text, or video to enable applications like weather forecasts or LLM conversations.

Build Agentic AI With NVIDIA NIM

Learn more about NVIDIA NIM.

Explore technical documentation to start prototyping and building your enterprise AI applications with NVIDIA APIs, or scale on your own infrastructure with NVIDIA NIM.

NVIDIA Cloud Functions (NVCF)

Learn more about NVCF.

Check out NVIDIA Cloud Functions (NVCF), a serverless API that deploys and manages AI workloads on GPUs, providing security, scale, and reliability to your workloads.

NVIDIA-Optimized Code for Popular LLMs

Learn more about NVIDIA AI Foundation models and endpoints.

Get tips in this tech blog for generating code, answering queries, and translating text on Llama, Kosmos-2, and SeamlessM4T with NVIDIA AI Foundation models.

NVIDIA Core SDKs With Direct Access to NVIDIA GPUs

Explore the NVIDIA API catalog.

Visit the NVIDIA API catalog to experience models optimized to deliver the best performance on NVIDIA-accelerated infrastructure directly from your browser or connect to NVIDIA-hosted endpoints.

Gauge AI Workload Performance

Learn about NVIDIA DGX Cloud benchmarking recipes.

Evaluate performance of deep learning models across any GPU-based infrastructure—on premises or in the cloud—with featured recipes in the DGX Cloud Benchmarking Collection.

NVIDIA Cloud Functions Ecosystem

More Resources

Ethical AI

NVIDIA believes trustworthy AI is a shared responsibility, and we have established policies and practices to support the development of AI across a wide array of applications. When downloading or using this model in accordance with our terms of service, developers should work with their supporting model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

For more detailed information on ethical considerations for this model, please see the Model Card++ Explainability, Bias, Safety & Security, and Privacy Subcards. Please report security vulnerabilities or NVIDIA AI Concerns here.