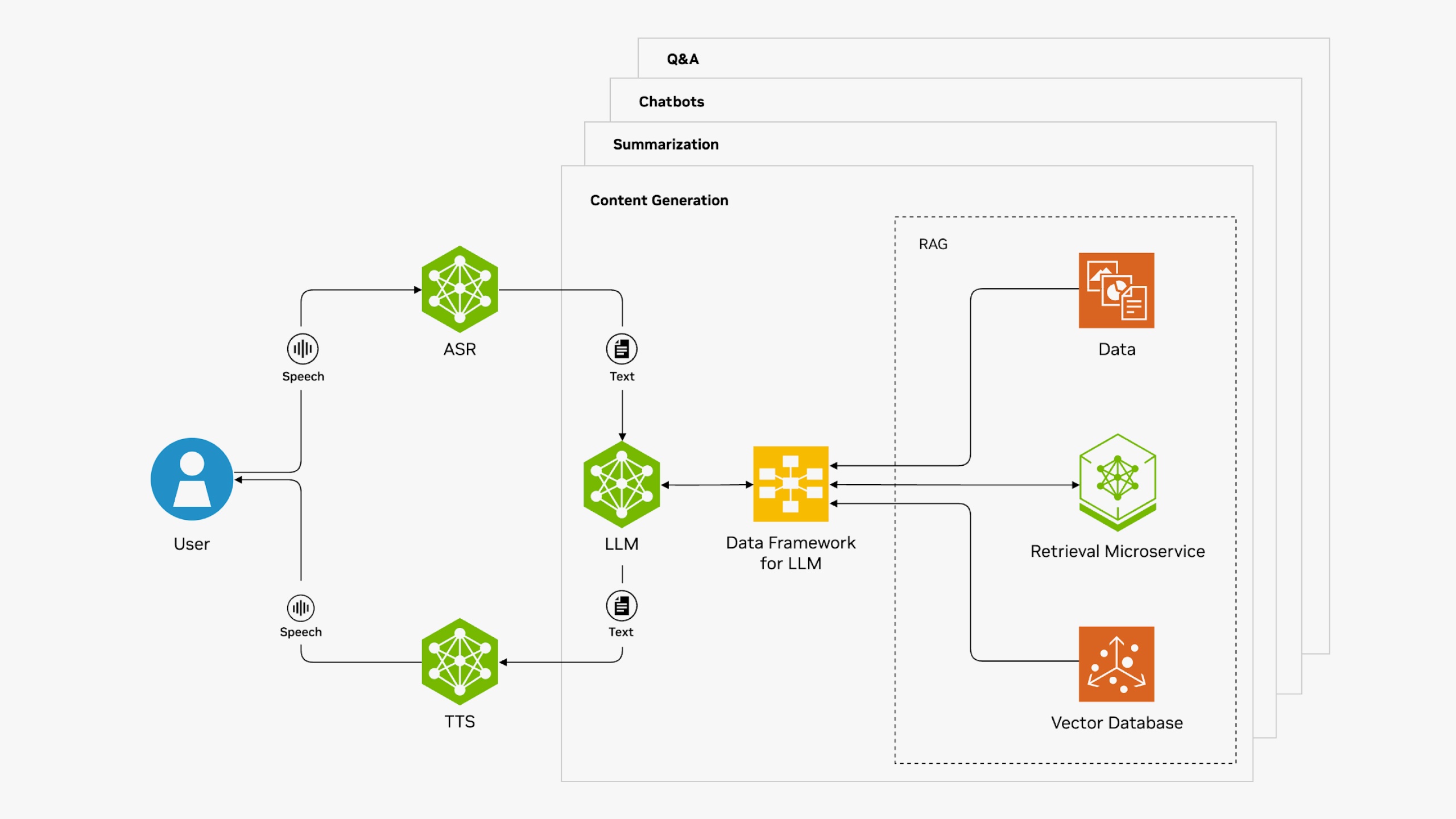

How Conversational AI Works

When you present an application with a question, the audio waveform is converted to text during the automatic speech recognition (ASR) stage. It converts the speech audio signal into text for processing by subsequent components. The question is then interpreted, and a large language model enhanced with retrieval-augmented-generation generates a response. Finally, the text is converted into speech signals to generate audio for the user during the text-to-speech (TTS) stage, also known as speech synthesis.

Explore Conversational AI Tools and Technologies

NVIDIA Riva

NVIDIA Riva includes automatic speech recognition (ASR), text-to-speech (TTS), and neural machine translation (NMT).

Get Started With RivaNVIDIA NeMo

NVIDIA NeMo includes tools for developing and deploying custom generative AI, including large language models (LLMs), multimodal, vision and speech and translation AI.

Get Started With NeMoNVIDIA NIM

NVIDIA NIM ™ is a set of easy-to-use microservices designed to accelerate the deployment of generative AI models across any cloud or data center.

Get Started With NIM