NVIDIA NeMo Evaluator for Developers

NVIDIA NeMo™ Evaluator is a scalable solution for evaluating generative AI applications—including large language models (LLMs), retrieval-augmented generation (RAG) pipelines, and AI agents—available as both an open-source SDK for experimentation and a cloud-native microservice for automated, enterprise-grade workflows. NeMo Evaluator SDK supports over 100 built-in academic benchmarks and an easy-to-follow process for adding customizable metrics via open-source contribution. In addition to academic benchmarks, NeMo Evaluator microservice provides LLM-as-a-judge scoring, RAG, and agent metrics that make it easy to assess and optimize models across environments. NeMo Evaluator is a part of the NVIDIA NeMo™ software suite for building, monitoring, and optimizing AI agents across their lifecycle at enterprise scale.

NVIDIA NeMo Evaluator Key Features

NeMo Evaluator is built on a single-core engine that powers both the open-source SDK and the enterprise-ready microservice.

SDK

An open-source SDK for running academic benchmarks with reproducibility and scale. Built on the nemo-evaluator core and launcher, it provides code-native access for experimentation on LLMs, embeddings, and reranking models.

Reproducible by default: Captures configs, seeds, and software provenance for auditable, repeatable results.

Comprehensive benchmarks: Over 100 academic benchmarks across leading harnesses and modalities, continuously updated.

Python-native and ready to run: Configs and containers deliver results directly in notebooks or scripts.

Flexible and scalable: Run locally with Docker or scale out to Slurm clusters.

Microservice

An enterprise-grade, cloud-native REST API that automates scalable evaluation pipelines. Teams can submit jobs, configure parameters, and monitor results centrally—ideal for CI/CD integration and production-ready generative AI operations workflows.

Automates scalable evaluation pipelines with a simple REST API.

Abstracts complexity: Submit “jobs,” configure parameters, and monitor results centrally.

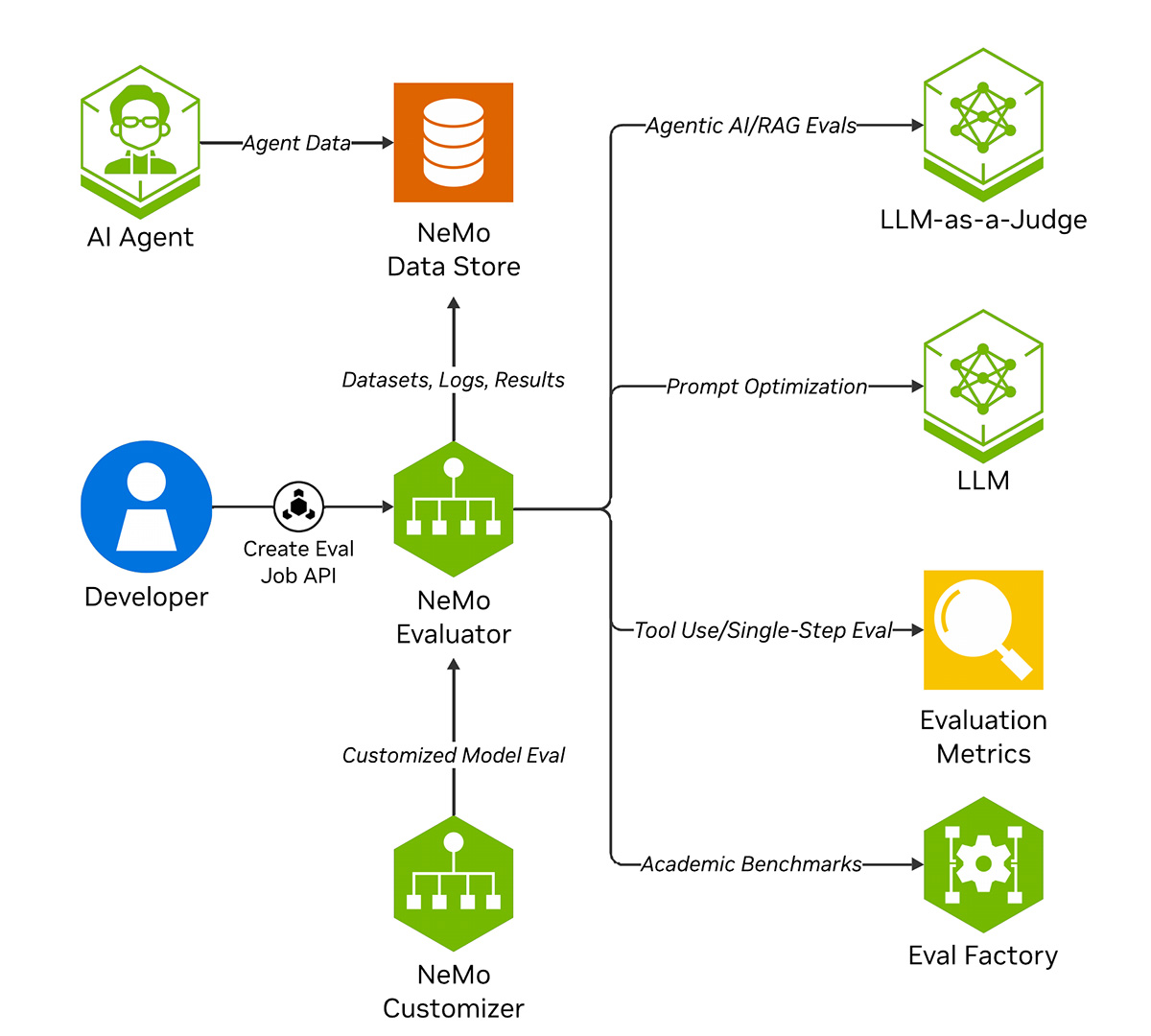

How NVIDIA NeMo Microservices Evaluator Works

NeMo Evaluator microservice allows a user to run various evaluation jobs for agentic AI applications through a REST API. Evaluation flows enabled include: academic benchmarking, agentic and RAG metrics, and LLM-as-a-judge. A user can also tune their judge model via the prompt optimization feature.

Introductory Resources

Introductory Blog

Read how the NeMo Evaluator microservice simplifies end-to-end evaluation of generative AI systems.

Read BlogTutorial Notebook

Explore tutorials designed to help you evaluate generative AI models with the NeMo Evaluator microservice.

Introductory Webinar

Understand the architecture of data flywheels and their role in enhancing agentic AI systems and learn best practices for integrating NeMo components to optimize AI agent performance.

How-To Blog

Dive deeper into how NVIDIA NeMo microservices help build data flywheels with a case study and a quick overview of the steps in an end-to-end pipeline.

Ways to Get Started With NVIDIA NeMo Evaluator

Use the right tools and technologies to assess generative AI models and pipelines across academic and custom LLM benchmarks on any platform.

Download

Get free access to the NeMo Evaluator microservice for research, development, and testing.

Download MicroserviceAccess

Get free access to the NeMo Evaluator microservice for research, development, and testing.

Access SDK

Try

Jump-start building your AI solutions with NVIDIA AI Blueprints, customizable reference applications, available on the NVIDIA API catalog.

Try the BlueprintSee NVIDIA NeMo Evaluator Microservice in Action

Watch these demos to see how the NeMo Evaluator microservice simplifies the evaluation and optimization of AI agents, RAG, and LLMs.

Evaluate LLMs With NeMo Evaluator and Docker Compose

This step-by-step guide walks through deploying the NeMo Evaluator microservice using Docker Compose and running custom evaluations.

Watch VideoScale AI Agent Evaluation With NeMo Evaluator LLM-as-a-Judge

In this step-by-step tutorial, you’ll discover how to scale your AI agent evaluation workflows with NeMo Evaluator LLM-as-a-judge.

Watch VideoSet Up a Data Flywheel to Optimize AI Models and Agents

Get an overview of the data flywheel blueprint, understand how to do model evaluation and cost optimization, explore the evaluation report, and more.

Customizing AI Agents for Tool Calling With NeMo Microservices

Learn how to customize AI agents for precise function calling with this end-to-end example with NeMo microservices.

Starter Kits

LLM-as-a-Judge

Automate subjective evaluation of open-ended responses, RAG systems, or AI agents. Ensures structured scoring and consistency.

Similarity Metrics

Measure how well LLMs or retrieval models handle domain-specific queries using F1, ROUGE, or other metrics.

Agent Evaluation

Evaluate whether agents call the right functions with the correct parameters; integrates with CI/CD pipelines.

LLM Benchmarks

Standardized evaluation of model performance across reasoning, math, coding, and instruction-following. Supports regression testing.

NVIDIA NeMo Evaluator Learning Library

More Resources

Ethical AI

NVIDIA believes Trustworthy AI is a shared responsibility, and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse. Please report security vulnerabilities or NVIDIA AI concerns here.

Get started with NeMo Evaluator today.