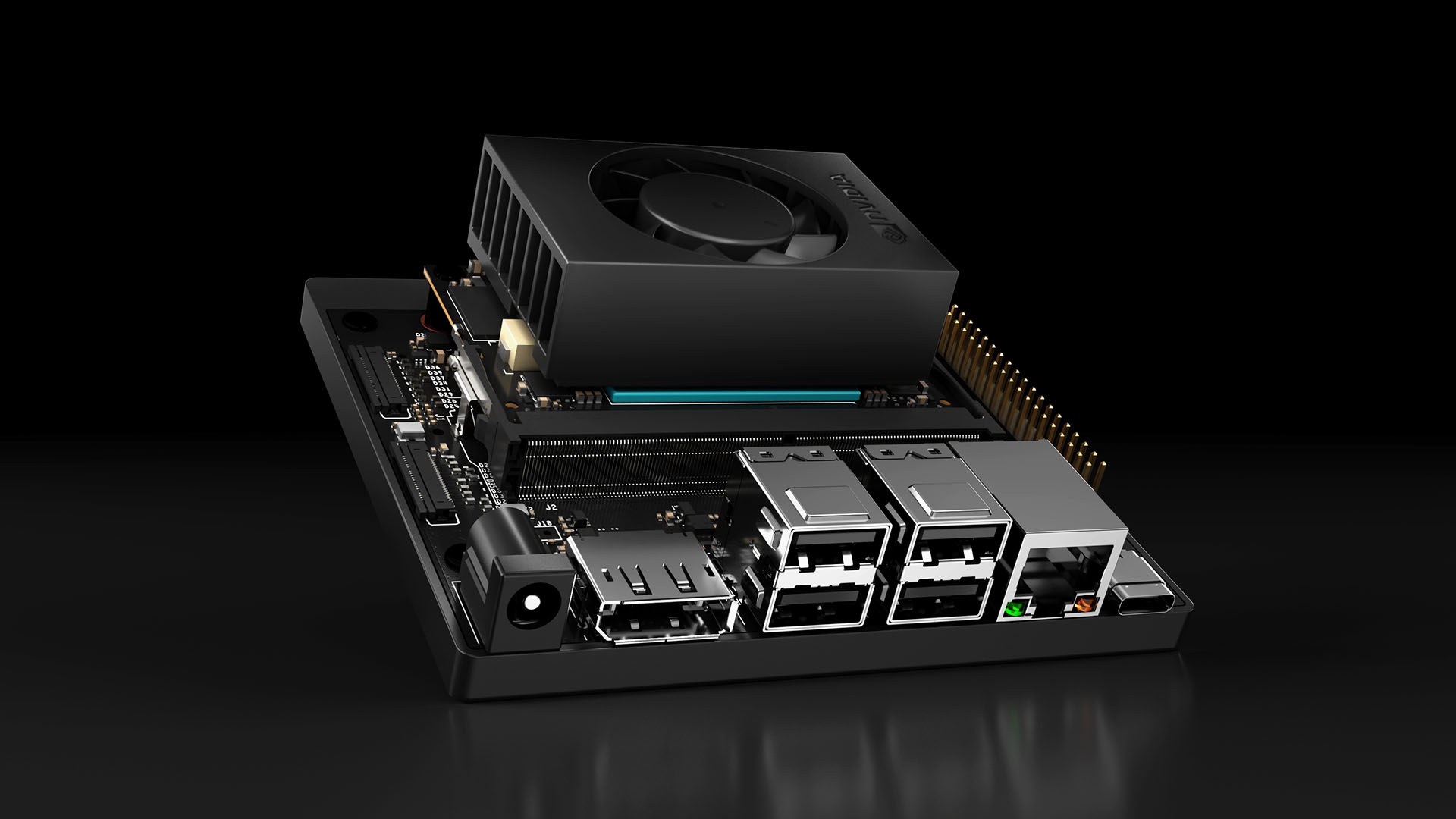

NVIDIA Jetson for Robotics and Edge AI

NVIDIA Jetson™ is a powerful platform for developing innovative edge AI and robotics solutions across industries. It delivers the compact, energy-efficient modules and developer kits with a robust AI software stack you need to deploy next-generation physical AI solutions.

Highlights

New NVIDIA® Jetson Thor™ Now available for Order

Discover the NVIDIA Jetson Orin Nano™ Super Developer Kit

NVIDIA Isaac™ for Robotics Development

.jpg)

NVIDIA Metropolis for Vision AI Agents and Applications

Bring Generative AI to the World With Jetson

Explore Jetson Projects from Our Community

Connect With Other Jetson Developer

Find the Right Jetson Partner for Your Needs

Join now