A-Eye for the Blind

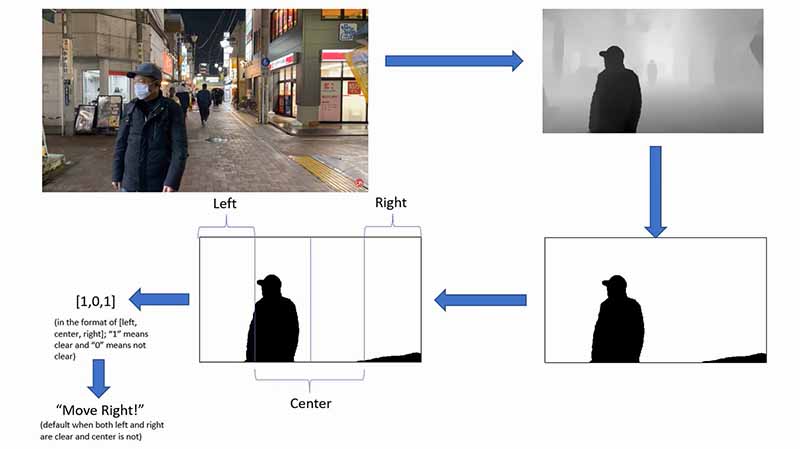

Help visually-impaired users keep themselves safe when travelling around. The hardware interface passes pictures of the user's surroundings in real time through a 2D-image-to-depth-image machine learning model. The software analyzes the depths of objects in the images to provide users with audio feedback if their left, center, or right is blocked. Images and timestamps are uploaded to a secured Firebase database so that friends and family can view its website for live images and check-up on them to see if they're okay. The setup uses a Jetson Nano 2GB, a fan, a Raspberry Pi Camera V2, a wifi dongle, a power bank, and wired headphones.

Buy Jetson to Get Started

Bring your edge AI, computer vision or robotics ideas to life with a Jetson developer kit.

Explore Products