Ray Tracing

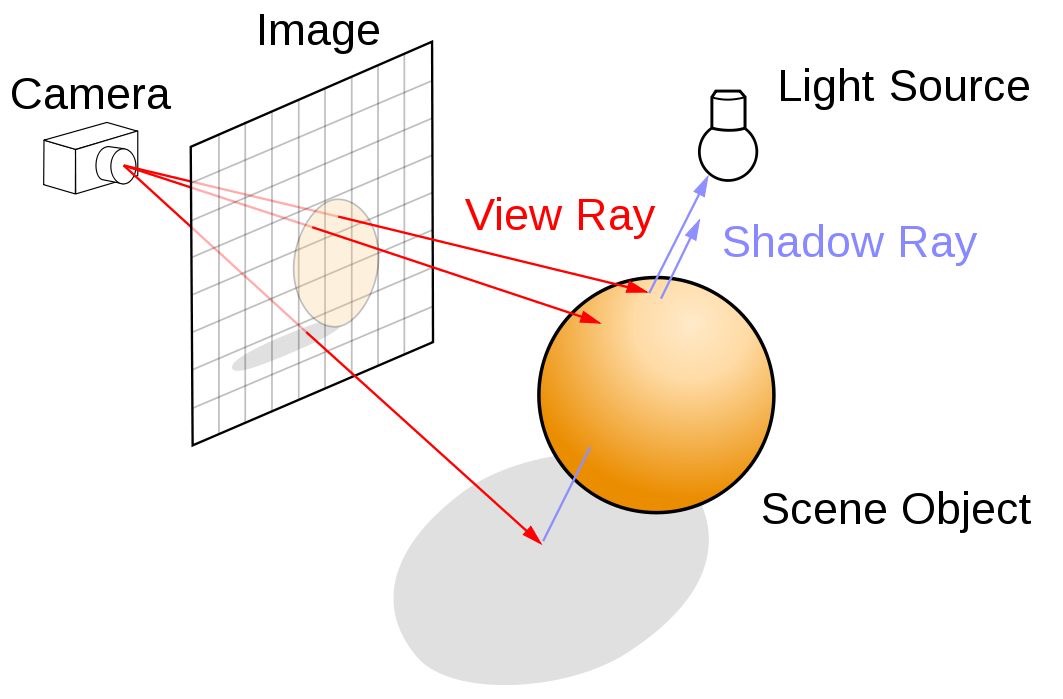

Ray tracing is a rendering technique that can realistically simulate the lighting of a scene and its objects by rendering physically accurate reflections, refractions, shadows, and indirect lighting. Ray tracing generates computer graphics images by tracing the path of light from the view camera (which determines your view into the scene), through the 2D viewing plane (pixel plane), out into the 3D scene, and back to the light sources. As it traverses the scene, the light may reflect from one object to another (causing reflections), be blocked by objects (causing shadows), or pass through transparent or semi-transparent objects (causing refractions). All of these interactions are combined to produce the final color and illumination of a pixel that is then displayed on the screen. This reverse tracing process of eye/camera to light source is chosen because it is far more efficient than tracing all light rays emitted from light sources in multiple directions.

Another way to think of ray tracing is to look around you, right now. The objects you’re seeing are illuminated by beams of light. Now turn that around and follow the path of those beams backwards from your eye to the objects that light interacts with. That’s ray tracing.

The primary application of ray tracing is in computer graphics, both non-real-time (film and television) and real-time (video games). Other applications include those in architecture, engineering, and lighting design.

The following section introduces rendering and ray tracing basics along with commonly used terminology.

Figure 1: Ray Tracing Basics

Ray Tracing Fundamentals

- Ray casting is the process in a ray tracing algorithm that shoots one or more rays from the camera (eye position) through each pixel in an image plane, and then tests to see if the rays intersect any primitives (triangles) in the scene. If a ray passing through a pixel and out into the 3D scene hits a primitive, then the distance along the ray from the origin (camera or eye point) to the primitive is determined, and the color data from the primitive contributes to the final color of the pixel. The ray may also bounce and hit other objects and pick up color and lighting information from them.

- Path Tracing is a more intensive form of ray tracing that traces hundreds or thousands of rays through each pixel and follows the rays through numerous bounces off or through objects before reaching the light source in order to collect color and lighting information.

- Bounding Volume Hierarchy (BVH) is a popular ray tracing acceleration technique that uses a tree-based “acceleration structure” that contains multiple hierarchically-arranged bounding boxes (bounding volumes) that encompass or surround different amounts of scene geometry or primitives. Testing each ray against every primitive intersection in the scene is inefficient and computationally expensive, and BVH is one of many techniques and optimizations that can be used to accelerate it. The BVH can be organized in different types of tree structures and each ray only needs to be tested against the BVH using a depth-first tree traversal process instead of against every primitive in the scene. Prior to rendering a scene for the first time, a BVH structure must be created (called BVH building) from source geometry. The next frame will require either a new BVH build operation or a BVH refitting based on scene changes.

- Denoising Filtering is an advanced filtering techniques that can improve performance and image quality without requiring additional rays to be cast. Denoising can significantly improve the visual quality of noisy images that might be constructed of sparse data, have random artifacts, visible quantization noise, or other types of noise. Denoising filtering is especially effective at reducing the time ray traced images take to render, and can produce high fidelity images from ray tracers that appear visually noiseless. Applications of denoising include real-time ray tracing and interactive rendering. Interactive rendering allows a user to dynamically interact with scene properties and instantly see the results of their changes updated in the rendered image.

Rendering fundamentals

- Rasterization is a technique used to display three-dimensional objects on a two-dimensional screen. With rasterization, objects on the screen are created from a mesh of virtual triangles, or polygons, of different shapes and sizes. The corners of the triangles — known as vertices — are associated with a lot of information including its position in space, as well as information about color, texture and its “normal,” which is used to determine the way the surface of an object is facing. Computers convert the triangles of the 3D models into pixels, or dots, on a 2D screen. Each pixel can be assigned an initial color value from the data stored in the triangle vertices. Further pixel processing or “shading,” including changing color based on how lights in the scene hit, and applying one or more textures, combine to generate the final color applied to a pixel. Rasterization is used in real-time computer graphics and while still computationally intensive, it is less so compared to Ray Tracing.

- Hybrid Rasterization and Ray Tracing is a technique that uses rasterization and ray tracing concurrently to render scenes in games or other applications. Rasterization can determine visible objects and render many areas of a scene well and with high performance. Ray tracing is best utilized for rendering physically accurate reflections, refractions, and shadows. Used together, they are very effective at attaining high quality with good frame rates.

Accelerating Ray Tracing with GPUs

Ray Tracing is a very computationally intensive technique. Movie makers have traditionally relied on vast numbers of CPU based render farms that still can take multiple days to render complex special effects. GPUs can render realistic movie-quality ray traced scenes exponentially faster than CPUs but were limited by the amount of onboard memory which determines how complex a scene could be rendered. Turing adds Tensor Cores for artificial intelligence acceleration providing real-time denoising which drastically reduce the amount of rays needing to be cast as well as RT Cores that accelerate BVH traversal, the most time consuming part of the ray tracing calculations. These hardware advancements paired with powerful software APIs that make up the NVIDIA RTX platform make real-time ray tracing possible in game engines and digital content creation applications.

Additional Resources

- “Ray-Tracing pioneer explains how he stumbled into global illumination” Whitted, Turner. NVIDIA, 1 Aug 2018.

- “What’s the difference between Ray Tracing, Rasterization” Caulfield, Brian. NVIDIA, 19 Mar 2018.

- “Introduction to NVIDIA RTX and DirectX Ray Tracing” Stich, Martin. NVIDIA, 19 Mar 2018.