NVIDIA Data Center Deep Learning Product Performance

Reproducible Performance

Learn how to lower your cost per token and maximize AI models with The IT Leader’s Guide to AI Inference and Performance.

View Performance Data For:

Latest NVIDIA Data Center Products

Training to Convergence

Deploying AI in real-world applications requires training networks to convergence at a specified accuracy. This is the best methodology to test whether AI systems are ready to be deployed in the field to deliver meaningful results.

AI Inference

Real-world inferencing demands high throughput and low latencies with maximum efficiency across use cases. An industry-leading solution lets customers quickly deploy AI models into real-world production with the highest performance from data center to edge.

Conversational AI

NVIDIA Riva is an application framework for multimodal conversational AI services that deliver real-time performance on GPUs.

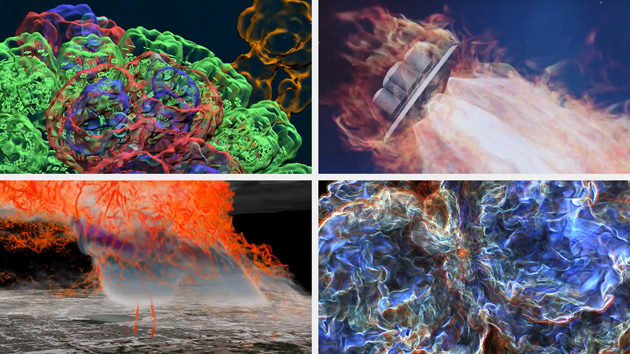

High-Performance Computing (HPC) Acceleration

Modern HPC data centers are crucial for solving key scientific and engineering challenges. NVIDIA Data Center GPUs transform data centers, delivering breakthrough performance with reduced networking overhead, resulting in 5X–10X cost savings.

NVIDIA Blackwell Ultra Delivers up to 50x Better Performance and 35x Lower Cost for Agentic AI

Built to accelerate the next generation of agentic AI, NVIDIA Blackwell Ultra delivers breakthrough inference performance with dramatically lower cost. Cloud providers such as Microsoft, CoreWeave, and Oracle Cloud Infrastructure are deploying NVIDIA GB300 NVL72 systems at scale for low-latency and long-context use cases, such as agentic coding and coding assistants.

This is enabled by deep co-design across NVIDIA Blackwell, NVLink™, and NVLink Switch for scale-out; NVFP4 for low-precision accuracy; and NVIDIA Dynamo and TensorRT™ LLM for speed and flexibility—as well as development with community frameworks SGLang, vLLM, and more.

Deep Learning Product Performance Resources

Explore software containers, models, Jupyter notebooks, and documentation.