By Tomasz Bednarz (GTC 2012 Guest Blogger)

I had been eagerly anticipating the “Inside Kepler” session since GTC 2012 opened. On Wednesday arvo, May 16th, two NVIDIA blokes, Stephen Jones (CUDA Model Lead) and Lars Nyland (Senior Architect), warmly introduced the new Kepler GK110 GPU, proudly announcing that it is all about “performance, efficiency and programmability.”

I had been eagerly anticipating the “Inside Kepler” session since GTC 2012 opened. On Wednesday arvo, May 16th, two NVIDIA blokes, Stephen Jones (CUDA Model Lead) and Lars Nyland (Senior Architect), warmly introduced the new Kepler GK110 GPU, proudly announcing that it is all about “performance, efficiency and programmability.”

Lars and Stephen revealed that GK110 has 7.1B transistors (wow!), 15 SMX units, >1 TFLOP fp64, 1.5MB L2 cache, 384-bit GDDR5 and PCI-Express Gen 3. NVIDIA looked high and low to reduce power consumption and increase performance, and after many months of tough design and testing in their labs, the NVIDIA mates emerged with an awesome GPU that greatly exceeds Fermi’s compute horsepower, while consuming less power and generating less heat. Corker of a GPU!The new SMX uses a power-aware architecture, which simply translates to “effective performance.” Each GK110 SMX unit has 192 single-precision CUDA cores, 64 double-precision units, 32 special function units and 32 load/store units. The resources comparison slide of Kepler GK110 versus Fermi showed a 2-3x increase in floating point throughput and 2x increase in max blocks per SM, 2x register file bandwidth, 2x register file capacity and 2x shared memory bandwidth. Good on ya NVIDIA!

Also, according to Stephen and Lars, a new ISA encoding enables four times more registers to be indexed per thread; 255 compared to 63 in Fermi. This was often a limiting factor for scientific codes, especially those that use double precision floating point; a ripper now! Quda was mentioned as a great example with a kernel that used 110 registers per thread. One Quda kernel with a major register spilling bottleneck on Fermi ran multiple times faster on GK110 where there was no spilling.

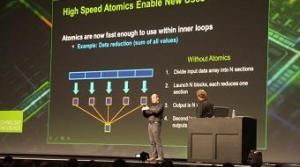

SMX also adds several new high-performance instructions. For example, SHFL (“shuffle”), for intra-warp data exchange, and atomic instructions with extended functionality and faster execution. Additional compiler-generated high-performance instructions include: bit shift, bit rotate, fp32 division and read-only caching. These new instructions were beautifully illustrated using simple examples presented by the speakers. I agree 100% with their opinion that higher-speed atomics will enable many new uses. Stephen presented a data reduction example as a case study of how faster atomics programming easier.

There was also a big emphasis on the (read-only) texture cache, which now allows global addresses to be fetched and cached without the need to map a texture. Code for this usage is automatically generated by the compiler when pointers declared “const __restrict” are accessed within a kernel.

There would be no good presentation without a great visualization. The Bonsai GPU Tree Code—which we had seen in the keynote by Jen-Hsun Huang—was displayed again, giving us another few seconds to reflect on the future of our kids and grand-kids. The Bonsai code does lots of reductions and scans, and Stephen mentioned that 20% speedup was achieved on the main tree traversal kernel by using the SHFL instruction.

GK110 enables a new feature in CUDA, “Dynamic Parallelism”, which allows launching new grids from the GPU. There is no longer a need to execute every kernel from the host side; now GPU threads can launch kernels. If I understood correctly, the kernel launch overhead will remain about the same as CPU-side launches. The benefits of CUDA Dynamic Parallelism come from the ability to focus computational resources to regions that require higher accuracy, and the easier programming that results.

Lars and Stephen showed Mandelbrot fractal and jet flow examples. I have often needed something like CUDA Dynamic Parallelism for my CFD simulations of boundary layer flows! The region of interest there is mainly around rigid walls and requires finer adaptive meshing to improve resolution and accuracy.

Q&A revealed that the warp size on GK110 remains the same as on Fermi (32 threads). Someone from the audience also requested to have a “configurable warp” size. I wonder if this is possible.

I enjoyed the talk. Good on ya Stephen and Lars! Now I’m looking forward to trying GK110 myself! Be sure to check out the NVIDIA Kepler GK110 White Paper.

Follow this link to watch a streamcast of this presentation : Inside Kepler

About our Guest Blogger:

Tomasz Bednarz works at CSIRO as a Computational Research Scientist, and he is co-organizer of the Sydney GPU Meetup Group.

Tomasz Bednarz works at CSIRO as a Computational Research Scientist, and he is co-organizer of the Sydney GPU Meetup Group.